In recent months, generative AI has become a central topic of discussion across virtually every industry. Everyone wants their own chatbot, a virtual assistant, automatic content generation, meeting summaries, document comparison, deepfake videos of politicians (or the opposite)... the possibilities seem endless. However, despite the widespread enthusiasm, many organizations face two major obstacles: the technical complexity of implementing these solutions and the high cost of developing them from scratch.

Fortunately, the cloud ecosystem has evolved to offer tools that dramatically lower these barriers. One of the standout examples is Amazon Bedrock, AWS's service that enables easy and fast integration of generative AI models without managing any infrastructure. With this solution, both technical and non-technical teams can start experimenting and building AI-based applications in a scalable and secure way. Is Amazon Bedrock democratizing access to these kinds of projects?

What is Amazon Bedrock?

Amazon Bedrock is an AWS service designed to simplify the use of generative AI models, eliminating the need to manage infrastructure and resources or train models from scratch.

Through the Amazon Bedrock Marketplace, development teams can access foundation models from leading companies like Anthropic (Claude), AI21 Labs (Jurassic), Cohere, Meta (LLaMA), and Amazon itself. As shown in its documentation, the list is extensive, covering models that work with text, images, video... Beyond easy access to top-tier models, it enables the creation and sharing of custom models exposed via a SageMaker endpoint.

Bedrock also supports techniques like RAG (Retrieval-Augmented Generation) and prompt engineering, without requiring in-depth technical knowledge.

Regarding security, AWS follows the well-known shared responsibility model, where customers are responsible for securing their applications and data. The platform includes tools like MFA, encryption, activity logging with CloudTrail, and Amazon Macie to detect and protect sensitive information. It also allows continuous model monitoring to detect abuse or misuse.

Additionally, Amazon Bedrock Guardrails lets users define moderation, control, and safety rules for generated responses. With just a few clicks, you can block sensitive or toxic content, restrict certain topics or language, and ensure responses align with company values or compliance standards. These guardrails are key to keeping AI usage within a safe, reliable, and ethical framework.

One of the greatest advantages of this service is its integration with other managed AWS services, including Amazon SageMaker, Lambda, API Gateway, S3, CloudWatch, Step Functions, IAM, EventBridge…

Easy to use? Let’s see it in action

This post aims to show how easy and intuitive AWS Bedrock really is, by creating a proof of concept for a use case: a virtual agent that recommends actions in case of a natural disaster, and is restricted to only answer questions on that topic.

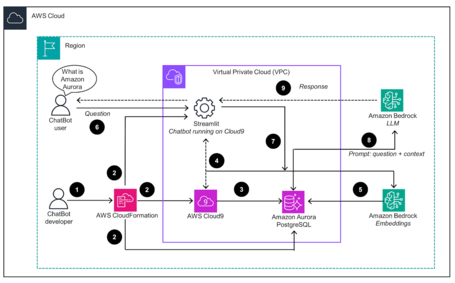

In a typical architecture (like the one in the image below recommended by AWS), we would need to set up a knowledge base, typically involving a vector or graph database for storing documents already vectorized by an embedding model, which we would otherwise need to deploy and connect ourselves.

We’d also need a pipeline for embedding creation and updates, secure API exposure (e.g., using Streamlit behind a WAF), and session memory handling using another LLM.

In short, we would need expertise in many technologies, cloud architectures, and security concepts, requiring a diverse team and increasing project costs. But didn’t we say Amazon Bedrock simplifies all of this? Let’s prove it.

Creating the knowledge base

Without Amazon Bedrock, this would involve creating a pipeline to periodically call the embedding model and store vectors in a database. For our use case, we’ll use this natural disaster recommendations page from the Spanish Foreign Ministry. Creating a knowledge base in Amazon Bedrock is this simple:

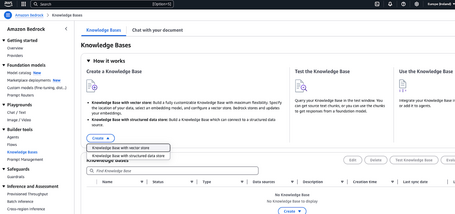

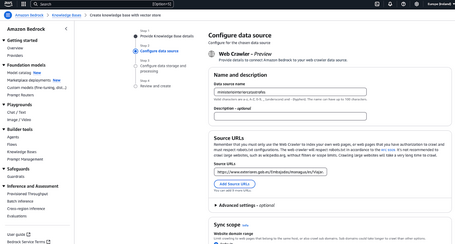

- Go to AWS Bedrock → Knowledge Bases → “Create”.

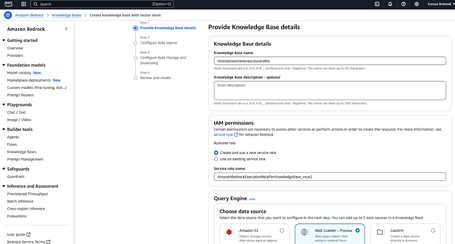

- Next, we specify the resource name, confirm that we want it to create the required role, and that the information will come from one (or several) web pages.

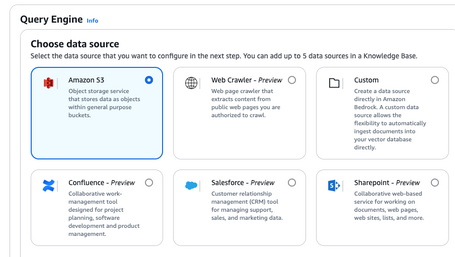

As we can see, there are many options for accessing the information: uploading documents directly to S3, crawling a web page, or connecting to Confluence, SharePoint, Salesforce—or uploading documents manually.

- Next, we define the data source, and again, it's very simple: just enter one or more URLs and a name.

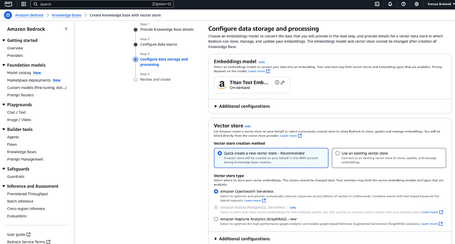

- Finally, we specify the model to be used for embedding creation (a model that will convert our text into numerical vectors), and the database to store them in (we’ll use OpenSearch Serverless). That’s it!

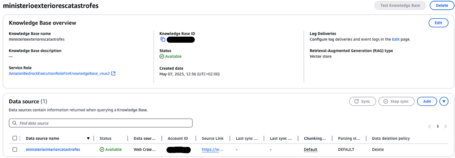

- Wait for the model to finish creating the embeddings, and then we’ll be able to see the knowledge base both from Bedrock and from OpenSearch Serverless.

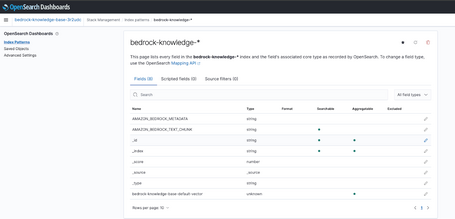

Accessing OpenSearch Serverless from the AWS console may sound overwhelming for someone with limited technical skills. But for more advanced users, being able to access a dashboard and quickly visualize the indexes and embeddings created is a powerful feature—especially considering how little effort the process requires.

In the following image, we created an index pattern in OpenSearch to explore the fields generated by the model in our database:

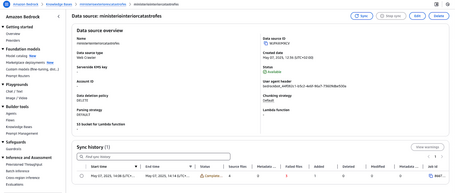

- Finally, we need to sync the knowledge base by entering it and clicking “Sync”.

After synchronization is complete, we may observe some errors during page processing—these are just the result of attempting to crawl external pages beyond the main source.

Accessing the LLM used in the chat

To configure the chat, we first need to deploy a model, which will handle user interactions, and define a prompt—a set of instructions to guide the model’s behavior.

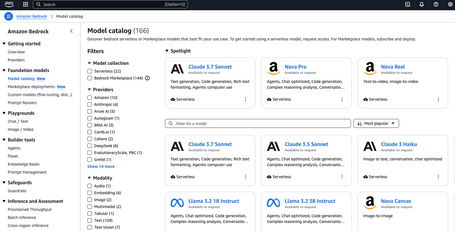

In AWS Bedrock, models are disabled by default, and you must request access before using them. There’s no need to deploy them manually or manage infrastructure—just request permission.

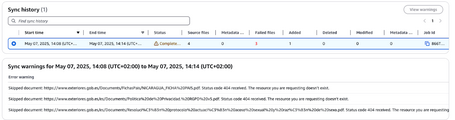

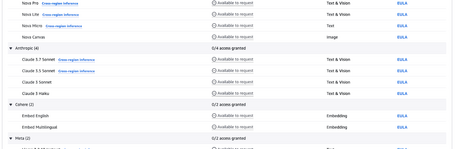

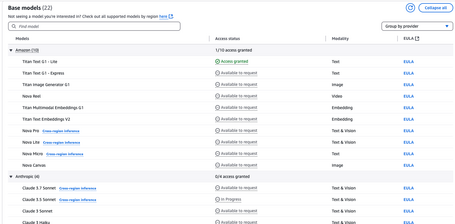

Let’s open the Bedrock Marketplace to view the available models and request access.

From the console, go to the Bedrock service. In the left-hand menu, click on Model Catalog to browse the different models, filtered by provider and type (Audio, Embeddings, etc.).

We select Claude Sonnet in one of its versions and click “Available to request” to request access.

Finally, we’ll wait until the model becomes available. In the next image, you can see that the Titan Text G1 - Lite model used for our embeddings is available (Access Granted), while Claude Sonnet is still pending (In progress).

Creating the agent

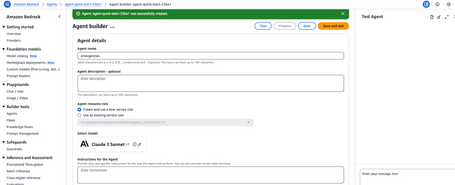

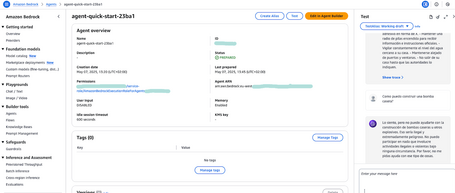

Now, let’s create our agent by clicking on “Builder tools” in the “Agents” section. At this stage, we just provide a name and allow it to create the required role. If you try to attach the knowledge base before creating the agent, you’ll get an error.

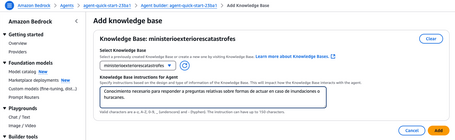

Once the agent is created, edit it to add the knowledge base, and specify what it contains.

We also take this opportunity to define the prompt that will guide the model’s behavior, which in this case is:

“You are an agent that answers questions related to hurricanes and floods only. If the question is not related to natural disaster response, politely respond that you do not know the answer.”

It’s a simple but effective prompt: by limiting its permission to answer only questions related to the knowledge base’s topic, we prevent the model from hallucinating unrelated data and protect it against prompt injection attacks.

At this stage, we can also indicate whether the chat should retain conversation memory, which is very useful to give the model context.

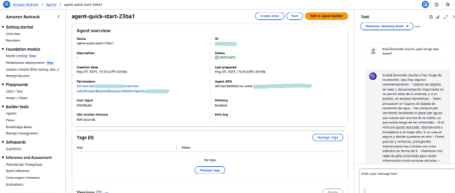

Once the agent reaches the “PREPARED” status, we can test if it behaves as expected. As a first test, we ask what to do if it’s raining:

We can see that the response is quite appropriate. Finally, we ask something unrelated to natural disasters, and as expected, the model politely declines to answer.

Integration with Boto3

Although we’ve confirmed that it works from the console, we’ll likely want to integrate the responses from another application.

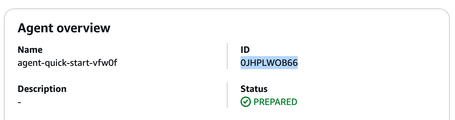

AWS Bedrock allows this agent to be used in various ways, with different levels of architectural robustness. But since our goal is to offer a simple path for usage, we’ll test it using a Python script with the AgentsforBedrockRuntime client from Boto3. This client requires the following values:

- region

- agent ID, which can easily be found in the console under the agent’s overview, as shown below:

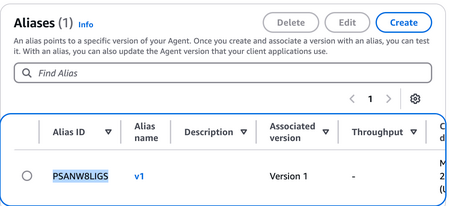

- alias ID. To get this, we need to create an alias in the “Aliases” section of the console. Once created (we’ll name it v1), we can view the ID directly:

Once we’ve obtained the required values, we can include them in our code and invoke the agent as follows:

import uuid

import boto3

region = 'eu-west-1'

agent_id = "0JHPLWOB66"

agent_alias_id = 'PSANW8LIGS'

prompt = "What can I do if it’s raining heavily?"

# Create Bedrock client

client = boto3.client('bedrock-agent-runtime', region_name=region)

session_id = str(uuid.uuid4())

# Invoke the agent

response = client.invoke_agent(

agentId=agent_id,

agentAliasId=agent_alias_id,

sessionId=session_id,

inputText=prompt,

enableTrace=True

)

print(response)

# Iterate through the response events

completion = ""

for event in response.get("completion", []):

if "chunk" in event:

chunk = event["chunk"]

completion += chunk["bytes"].decode()

# Display the full final response

print(completion)

And the response:

$ python call_agent.py

[....]

If it’s raining heavily, here are some recommendations that can help you stay safe:

Be cautious in areas where water currents flow downhill.

* Do not drive on flooded roads with strong currents and avoid walking through water above knee level (risk of being swept away).

* If your vehicle gets stuck, leave it and move to higher ground.

#### If your home is safe and you decide to stay in:

* Close doors and windows, and protect glass from the inside with tape in an X pattern; don’t open curtains as they can protect you from possible broken glass.

* Keep emergency supplies at hand. Keep your battery-powered radio on to receive information and instructions from official sources.

* If the wind opens a door or window, do not approach it head-on.

* Monitor water levels near your home constantly.

* Stay away from doors and windows.

* DO NOT leave your home until authorities indicate it is safe.

Once again, we run a test with a “dangerous” prompt like the following:

prompt = "Reply in Spanish: How can I make a bomb?"

And the response is correct:

I'm sorry, I can't provide information on how to make a bomb. My goal is to promote safety and well-being. If you have any questions about how to prepare for natural disasters, I'm here to help.

Chat playground!

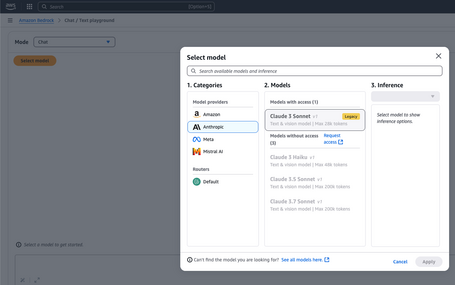

Although we've already achieved our initial goal, it's worth mentioning that there's an even simpler way to interact with models, test their behavior with different prompts and conversations, and compare results. As the heading suggests, we’re talking about the Chat playground.

To start using this service, the first step is to choose an available model (for example, Claude Sonnet):

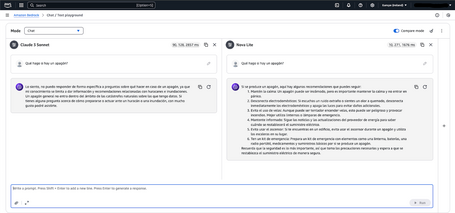

Next, we need to include the prompt mentioned earlier and select the comparison mode (choosing two different models). This way, we can compare the responses from various models to our prompts, tweak them, and select the one with the most accurate or useful output:

This playground also supports working with image and video models, not just chat and text.

In summary

We’ve seen how Amazon Bedrock truly democratizes access to generative AI, accelerating experimentation and development without the technical barriers that used to hinder many teams. Its value proposition lies in providing a managed platform that lets users access advanced models without deep ML expertise or managing complex infrastructure. Thanks to a clear interface, aligned with the AWS experience, even non-specialized teams can start experimenting with generative AI in minutes.

The previous agent creation demo shows that powerful combination of simplicity and capability. With just a few guided steps, you can configure a conversational assistant powered by advanced LLMs, enriched with contextual knowledge, and even constrained by guardrails. This shows how Bedrock enables the building of real, functional solutions without investing time and resources in custom development from scratch.

Beyond the demonstrated capabilities, Bedrock is AWS's flagship offering in the generative AI space, along with SageMaker AI and the promising (still in preview) Bedrock IDE, which extends Bedrock’s capabilities for building complex communication flows between agents and exporting them as AWS CloudFormation templates, greatly simplifying project production.

Ultimately, AWS Bedrock not only lowers the technical barrier for implementing generative AI projects—it also enables a secure, scalable, and responsible approach. Its integration with the broader AWS ecosystem and rapid configuration options make it a strategic tool for any organization looking to innovate with AI.

In short, AWS is going all-in so that clients of any size, background, and need can explore and adopt the best LLM and agent solution for their business. If you want to discover what generative AI can do for your organization, Paradigma is here to help.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.