Imagine that, as a software engineer with Python experience, you've just been assigned your first "agentic" project. The excitement is real. It all begins with a promising PoC (Proof of Concept) in a web playground where the language model seems to understand everything.

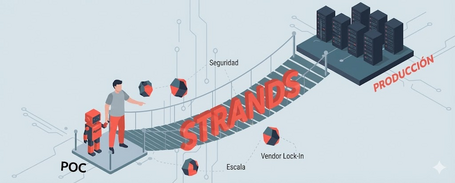

However, reality quickly sets in: the path from that PoC to a robust, scalable, secure, production-ready application is a minefield of architectural decisions.

You’re at a crossroads with questions that will shape your project’s future:

- Model provider (LLM). What will be the agent’s “brain”? Do we go with OpenAI, Anthropic (Claude), Google (Gemini), or open-source models like Mistral? And most importantly, how do we ensure that these new providers meet the compliance and bureaucracy required to be approved in a large enterprise?

- Hosting. Where will this intelligence live? In the cloud, leveraging our main provider (e.g. AWS)? Or do we want the option to run an LLM in our own data center for sensitive data that should never reach the cloud?

- Knowledge access. How will the agent learn about our business? Should we implement a Retrieval-Augmented Generation (RAG) system to query general internal documentation, or do we need segregated access to data based on the user interacting with the agent?

- Agentic framework. And the most critical decision: what will be the central nervous system connecting the model, tools, and data? Popular options include LangChain and alternatives like crewAI, but which one aligns best with our long-term vision?

Every decision implies a trade-off. The need to reduce vendor lock-in per component is a strategic priority; we don’t want today’s choice to limit tomorrow’s innovation.

At the same time, we must enable our existing data and Python engineering team to become “agentic engineers” without an overwhelming learning curve. The framework we choose is the keystone of this puzzle, as it will dictate architecture, development speed, and future flexibility.

In this complex landscape, Strands emerges as a particularly compelling option. Although it's an AWS-led initiative, the project is open-source at its core and designed to directly address enterprise-grade concerns — especially for a new project that:

- Relies on AWS as the main cloud provider: Strands offers native integrations and a clear production path within the AWS ecosystem.

- Has capable data engineers familiar with Python and AWS: as a pure Python library, it has a low barrier to entry, enabling the current team to become productive quickly.

- Wants the flexibility to switch LLM providers: Strands is model-agnostic and provider-agnostic, letting teams experiment and adopt the latest LLM technology without being locked into a single ecosystem.

- Must meet enterprise standards: Strands is production-oriented, helping create infrastructure that complies with CI/CD, GDPR, and data governance requirements.

Next, we’ll explain how Strands achieves this balance and why it might be the strategic choice for your first production-grade agentic application.

What is Strands? The anatomy of a “Model-Driven” agent

Strands’ philosophy materializes in a radically simple architecture. Instead of a complex web of abstractions, building an agent comes down to defining three core components in Python code — a structure reminiscent of the DNA strands that inspired the project's name: model and tools connected by the prompt.

The three pillars of a Strands agent

- Model

This is the agent’s reasoning engine — its brain — responsible for interpreting intent, planning actions, and deciding what to do next. By default, Strands uses a Claude 3.x Sonnet model via Amazon Bedrock, providing a great starting point with a solid cost-capability balance. However, this is just a default config, not a hard dependency.

- Tools

These are the agent’s capabilities to act — its “hands” to interact with the outside world. A tool in Strands can be as simple as a Python function with an @tool decorator or as complex as an external service available via an MCP or AWS AgentCore, the entrypoint to all AWS services. The agent doesn’t execute tools blindly — the model selects and uses them based on its understanding of the task at hand.

- Prompt

This is the “soul” of the agent — the natural language instruction set that defines its purpose and behavior. It’s essential to distinguish between the user prompt, which defines the immediate task (e.g., "summarize this document") and the system_prompt, which defines the agent’s identity, rules, personality, and overall context (e.g., "You are a financial analyst. Be concise and only base your responses on the data provided by your tools.")

In practice, combining these three pillars is as simple as instantiating the Agent class and passing it a task.

from strands import Agent

from strands_tools import http_request

simple_agent = Agent(

name="Research Assistant",

model="eu.amazon.nova-pro-v1:0",

tools=[http_request],

system_prompt="You are a research assistant with analytical capabilities. Use tools when needed to provide accurate, data-driven responses."

)

simple_agent("Compare the price of top 5 reasoning Bedrock models for text, from providers Anthropic and MistralAI")

License Verification and Commercial Use

A key factor in the adoption of any technology within the enterprise environment is its licensing model. The Strands SDK is released under the Apache 2.0 license, one of the most permissive and widely respected open-source licenses in the industry. In practical terms, this means any individual or organization can:

- Freely use the software for any purpose, including commercial and for-profit projects, without any licensing fees.

- Modify the source code to tailor it to specific needs.

- Distribute copies of the original or modified software.

This choice of license removes a critical adoption barrier and fosters an open ecosystem, ensuring companies can confidently build mission-critical solutions on top of Strands without legal uncertainty or fears of hidden costs or future vendor lock-in.

Agnostic Architecture: Freedom of Choice in an Open Ecosystem

One of the most powerful and strategic features of Strands is its agnostic design. Despite being initiated by AWS, the SDK does not impose any mandatory dependencies on its ecosystem. It is, at its core, a pure Python library that can run anywhere Python runs — on a laptop, an on-prem server, or any cloud provider.

Model and Provider Agnosticism

Strands' flexibility is most evident in its ability to interact with a wide variety of language models from different providers. Swapping out an agent’s "brain" is as simple as instantiating a different Model Provider, often with just a single line of code.

Here are examples of how to instantiate a Strands agent using several popular providers:

- Amazon Bedrock (default configuration):

from strands.models import BedrockModel

# Use the Claude 3.7 Sonnet model on Bedrock

bedrock_model = BedrockModel(model_id="us.anthropic.claude-3-7-sonnet-20250219-v1:0")

- Google Gemini:

from strands.models.gemini import GeminiModel

gemini_model = GeminiModel(api_key="TU_API_KEY_GEMINI", model_id="gemini-1.5-pro-latest")

It’s important to highlight that while Strands promotes the use of alternative providers beyond AWS — such as Google, Ollama, or MistralAI — the implementations for these providers are not as up-to-date or robust as those for AWS Bedrock, which sponsors the project.

For instance, the Gemini model class is limited to the Gemini API Endpoint v1beta, offering a small model selection and lacking support for Vertex credentials (i.e., Gemini models via Google Cloud Computing). As an open-source project, this can be addressed by forking the repo and extending a custom Model class.

The Tooling Ecosystem: Native, Custom, and Cloud Capabilities

The same open philosophy applies to the tooling ecosystem, which can be categorized into four main types:

- Native tools: Strands is complemented by its sibling project, strands-agents-tools, which provides a set of ready-to-use tools. These include core functionalities like getting the current time (current_time), file operations (file_read, file_write), shell command execution (shell), HTTP requests (http_request), and even a web browser (browser). This enables an agent to interact with the outside world right out of the box.

- Custom tools: The most direct way to extend an agent’s capabilities is to convert any Python function into a custom tool using the @tool decorator. Strands inspects the function’s name, type annotations, and docstring to build a schema the LLM can understand. A well-written docstring is not just documentation for other developers — it becomes the API that the model uses to determine when and how to call the tool.

from strands import tool

@tool

def get_user_details(user_id: int) -> dict:

"""

Retrieves user details from the database.

Use this tool when you need to get information about a specific user by their ID.

"""

# Logic to connect to the DB and fetch user details...

return {"id": user_id, "name": "Jane Doe", "email": "jane.doe@example.com"}

- Server Tools with Model Context Protocol (MCP): To scale and standardize tool access, Strands natively integrates the Model Context Protocol (MCP). MCP is an open standard that enables services to expose their capabilities in a way that AI agents can discover and consume uniformly. Instead of implementing a custom API client for each service, agents can use Strands' MCPClient to connect to an MCP server and gain access to its full tool catalog.

- Bedrock AgentCore Tools: For teams operating within the AWS ecosystem, AgentCore provides simplified yet controlled access to major cloud services. For example, AgentCore Memory enables a smooth yet robust transition from short-term memory (conversation history) to long-term memory, handling persistence and retrieval across sessions transparently for the agent.

AWS's strategy with Strands and its managed services is a brilliant example of how a company can foster an open ecosystem while creating a clear path toward its own value-added solutions.

By launching Strands as an open-source and agnostic SDK, AWS appeals to the developer community that values flexibility and is wary of vendor lock-in. A developer can start building an agent locally, using models from OpenAI, Google, Ollama… without touching AWS infrastructure.

Amazon Bedrock AgentCore

However, once that agent is ready for production, critical questions about scalability, security, observability, and management arise. This is where AWS introduces its complementary solution: managed services like Amazon Bedrock Agents and, more specifically, Amazon Bedrock AgentCore.

AgentCore is a serverless runtime environment designed specifically for agentic workloads, offering fast cold starts, session isolation, and extended runtimes up to 8 hours — capabilities that traditional serverless functions often lack.

Building Specialized Agent Teams: Collaboration Patterns

As tasks grow more complex, the idea of a single monolithic agent that knows and does everything becomes inefficient and hard to maintain. An overloaded prompt is more likely to contain contradictory instructions, resulting in unpredictable behavior.

A more powerful approach is to build a system of smaller, specialized agents. Each agent is designed with a clear purpose, defined by a specific system prompt, a toolset limited to its domain, and a focused contextual scope. This is a solution that’s easier to define and maintain over time.

Practical Example: A Virtual Project Team

Let’s imagine we want to build an AI system to help with software project management and operations. Instead of using one single agent, we’ll create a team of specialists:

- "Project Manager" Agent: acts as the main coordinator, ensuring the technical work aligns with the strategic business goals.

- "Operations Engineer" Agent: specializes in infrastructure and system stability.

Orchestration and Collaboration: How Do Agents Communicate?

With specialized agents defined, the next question is how to orchestrate their collaboration. Strands proposes a set of primitives for multi-agent interaction.

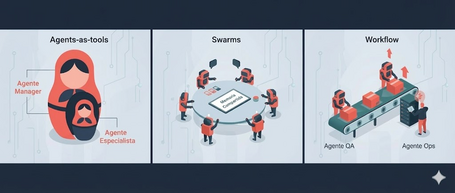

- Agents-as-Tools (Hierarchical Delegation): This is the simplest pattern. One agent (the “manager”) invokes another (the “specialist”) as if it were just another tool. In our example, the project_manager_agent can delegate a diagnostic task to the operations_agent via a direct call. Strands handles context passing and response.

- Swarms (Autonomous Collaboration): For complex, unstructured problems that benefit from brainstorming or parallel exploration, the Swarm pattern is ideal. A group of agents works together in a shared memory space. The workflow emerges from their collaborative decisions.

- Workflows (Defined Sequences): For predictable, structured processes, defined workflows are preferred. For example, a software deployment process may require a “QA” agent to run tests and give approval before the “Operations” agent proceeds with production. This pattern ensures steps follow a predefined order.

Strands in the Ecosystem: Comparative Analysis for Architects

Choosing a framework is a critical decision. To help architecture roles position Strands, here’s a comparison with other popular alternatives, focusing on paradigm, control, and ideal use case.

Strands vs. LangChain / LangGraph

The fundamental difference is philosophical. LangChain and LangGraph follow a developer-driven paradigm, where the development team explicitly defines control flow as a chain or graph.

Strands is model-driven: the team defines the capabilities (tools) and the goal (prompt), and the LLM dynamically decides the execution path. LangGraph offers granular control ideal for auditable business processes, at the cost of increased complexity. Strands shines in autonomous and resilient scenarios, where the agent must adapt to unforeseen conditions.

Strands vs. n8n

This comparison pits a code-first SDK (Strands) against a visual automation platform (n8n).

While n8n excels at connecting services quickly through a vast library of prebuilt connectors and a graphical UI, there are two key differences for development teams:

First, its fair-code license is open-source but limited, often requiring a paid plan for professional use. Second, n8n workflows run only on n8n servers (cloud or self-hosted), while Strands is a standard Python library that runs anywhere Python runs. Strands natively integrates with Python’s quality ecosystem (version control, CI/CD, testing), making it a natural fit for software engineering.

Strands vs. AWS Bedrock Agents

This is a comparison between an open-source SDK and a fully managed PaaS.

Strands is a library the development team runs on their own infrastructure, giving full control over the environment, dependencies, and logic. Bedrock Agents, on the other hand, are a serverless service offering maximum convenience, with AWS managing all infrastructure at the cost of reduced flexibility.

Strands’ biggest advantage is flexibility — it can quickly integrate emerging standards like MCP and A2A, enabling future-ready architectures while still benefiting from AWS services when desired.

Strands as the Future Standard of Agentic Development

Strands represents a significant evolution in agentic AI development, marking a shift from rigid, preprogrammed workflows to true model-driven autonomy.

By focusing on model, tools, and prompt, it dramatically reduces complexity in building powerful, resilient agents. Offloading orchestration to the LLM’s reasoning engine accelerates the development cycle.

The SDK’s open and agnostic architecture is perhaps its most strategic asset. Free from the constraints of a single model provider or cloud platform, Strands positions itself as a universal language for agent development.

This flexibility, combined with a production-ready design — including native observability via OpenTelemetry and clear deployment patterns — makes it an appealing choice for both agile startups and large enterprises aiming to build robust, maintainable AI systems.

In the coming weeks, we’ll publish a hands-on guide to get started. Let us know your thoughts in the comments! 👇

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.