In recent times we are hearing more and more talk about artificial intelligence, already set to remain in our lives.

We find it in our homes, close to hand, in television, automation, apps… Companies use it ever more in different fields such as customer care or the study of consumers' behaviour.

Some are already talking about an industrial revolution, but how did we reach this point? What is the history of the evolution of artificial intelligence? Which events and technology have made it possible to finally arrive to the golden age of AI?

Origins

The origin of artificial intelligence as a discipline dates back to 1956, during a workshop that took place at Dartmouth College. This meeting gathered for several weeks some of the most relevant mathematicians and scientists of the time, such as Marvin Minsky or John McCarthy (inventor of Lisp language), who shared their ideas and coined for the first time the term “Artificial Intelligence”.

[caption id="" align="aligncenter" width="400"]

Some of the participants celebrating the 50th anniversary of the Darmouth conference in 2006[/caption]

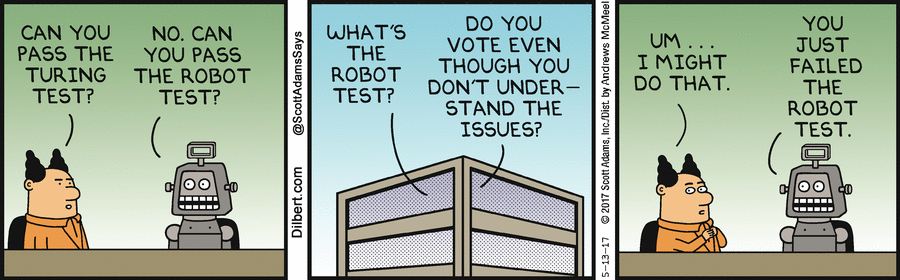

However, we can find some relevant precedents in history, such as the Turing's test developed in 1950 by Alan Turing. It is here that the idea of thinking machines is examined, and how a human and a robot could be told apart in a teleprinter conversation.

Winters and springs

Following the Dartmouth conference the interest in the study of artificial intelligence rocketed. Some of the early results were impressive, such as the advances in natural language processing or problem solving systems based on heuristics. The expectations were high and investments multiplied. At the time, agencies such as DARPA invested millions of dollars in the field.

However, the results were not what was expected. Although great academic advances were made and the foundations were laid for what was to come, the expectations were too high and the difficulty of the challenges ahead was not properly assessed.

The technology was unripe in many regards. So in the early 1970s a crisis known as “the first winter of artificial intelligence” arrived, with reduced investment and a slowing down of progress. Most artificial intelligence projects were no more than “toys” or concept tests.

[caption id="" align="aligncenter" width="590"]

Picture of the film '2001: a space odyssey' (year 1968)[/caption]

In the 1980s the field of artificial intelligence revived with a new boost, led basically by the drive of “expert systems”which, thanks to complex rules engines, tried to model the knowledge of an expert in a very specific area.

These systems were implemented in the business world, but their implementation was very limited and they did not have a great impact on people's lives. They were very far from the uses imagined by the cinema of the time in films such as “2001: A space odyssey" or “Blade runner”.

Thus came the “second winter”, where there was big scepticism around whether real applications could have artificial intelligence or not.

In the 1990s progress was not particularly relevant and had little implementation in real products or services. We can highlight the victory of Deep Blue by IBM against Kasparov, the world chess champion, as one of the most remarkable events.

Nevertheless, something was changing. It was the beginning of the internet era, and the birth of large companies such as Google or Amazon and new free technologies such as GNU/Linux, would make the difference and change the world.

The boom of Big Data

Finally around 2010 a series of events would have a radical impact on the history of artificial intelligence.

The first one was the final take off of Cloud technologies, which prevailed as the new paradigm for building digital products. In very few years we had enormous computing and storage power at a very affordable price and with minimal effort.

On the other hand, a whole new ecosystem of Big Data technologies emerged. New tools which, based on this store and computing power, were able to ingest and process large volumes of data, unstructured at times, at great speed.

The pervasiveness of mobile, IoT or social networks has given us reach to new and massive sources of data. And thanks to Big Data we have the ability to exploit them.

Finally, the Internet has allowed working in a much more agile fashion, as well as collaborating and disseminating knowledge. Thanks to open source and open data we have access to algorithms that not long ago seemed science fiction.

These big disruptions have made research and progress in artificial intelligence to shoot up in the last few years. Specifically in the area of Machine Learning and Deep Learning, where algorithms are able to learn the data and carry out intelligent tasks such as classifying, predicting and recognising patterns.

Big technology companies such as Google, Amazon, Facebook or Microsoft are betting hard in this line and in the past few years we are witnessing real progress in everyday products and services, for example intelligent voice assistants such as Siri or Alexa, or autonomous and assisted vehicles and drones such as Google's or Tesla's.

Conclusions and future

It looks like artificial intelligence has finally reached maturity. Everyday new fascinating apps appear, but also new fears and uncertainties. How far will machine intelligence go? Will work be carried out by intelligent machines or will new jobs for people emerge thanks to artificial intelligence? Could artificial intelligence be a danger for humans?

There has been a lot of speculation in literature and cinema in the 20th century, but the difference nowadays is that the debate is not science fiction, it is in the streets. A good example are the different points of view and the discussion between Mark Zuckerberg, founder of Facebook, and Elon Musk, founder of Tesla and SpaceX, on the topic of social media.

Other relevant personalities such as Bill Gates and Steven Hawking have joined in in this public debate. Undoubtedly there are ethical aspects to be considered in the debate and risks that cannot be ignored.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.