The problem: why do we need resilience?

In distributed systems, applications depend on multiple external services (APIs, databases, etc.). A failure in one of these services can cause a cascading failure, where the slowness or outage of a single component blocks resources and brings down the entire application.

Common problems

- Cascading failures: a slow service (e.g., a payment API) consumes all the application threads, leaving it unable to respond to other requests.

- Infinite retries: a poorly designed retry strategy can further overload an already failing service and eventually cause a complete system outage.

- No plan B (Fallback): if a non-critical service (e.g., a suggestions or recommendations service) fails, the application returns a 500 error to the user instead of offering a more graceful alternative.

- Wasted resources: calls continue to be made to a service that is known to be down, unnecessarily waiting for timeouts.

The goal of Resilience4j is to equip applications with the ability to withstand, adapt to, and recover from these failures without collapsing, improving user experience and overall system stability.

Evolution of Resilience4j within Spring Boot

Resilience4j has evolved significantly since its creation in 2015. The library moved from being an experimental project to becoming the de facto standard for fault tolerance in Spring Boot applications. The most important milestone was version 2.0.0 (2022), which set Java 17 as the minimum requirement and introduced separate modules for Spring Boot 2 (resilience4j-spring-boot2) and Spring Boot 3 (resilience4j-spring-boot3).

Currently, in its version 2.3.0 (2025), Resilience4j offers native integration with Spring Boot 3.2+, full support for reactive programming, enhanced observability with Actuator and Micrometer, and positions itself as a mature and stable solution for implementing resilience patterns (Circuit Breaker, Retry, Rate Limiter, Bulkhead, Time Limiter) in modern microservice architectures.

Core concepts of Resilience4j

Resilience4j implements several resilience patterns. It’s important to note that this library and its strategies can be applied to systems with external dependencies regardless of their design (monoliths, microservices, etc.).

However, given the nature of microservices, its usage and strategies are a perfect fit. The most important ones are:

Circuit Breaker

States:

- CLOSED: normal state. Requests are allowed to pass through to the service. If the failure rate exceeds a threshold (e.g., 50% of 100 calls), the circuit opens.

- OPEN: requests fail immediately without calling the service, protecting resources. After a wait period (e.g., 30s), it transitions to HALF_OPEN.

- HALF_OPEN: allows a limited number of test requests. If they succeed, the circuit closes. If they fail, it opens again.

Key configuration:

Properties:

# Circuit Breaker - Default settings

resilience4j.circuitbreaker.configs.default.slidingWindowSize=100

resilience4j.circuitbreaker.configs.default.minimumNumberOfCalls=10

resilience4j.circuitbreaker.configs.default.failureRateThreshold=50

resilience4j.circuitbreaker.configs.default.waitDurationInOpenState=60000

resilience4j.circuitbreaker.configs.default.permittedNumberOfCallsInHalfOpenState=10

resilience4j.circuitbreaker.configs.default.automaticTransitionFromOpenToHalfOpenEnabled=true

resilience4j.circuitbreaker.configs.default.registerHealthIndicator=true

# Circuit Breaker - Email Service (SendGrid)

resilience4j.circuitbreaker.instances.emailService.baseConfig=default

resilience4j.circuitbreaker.instances.emailService.failureRateThreshold=60

resilience4j.circuitbreaker.instances.emailService.waitDurationInOpenState=120000

Retry

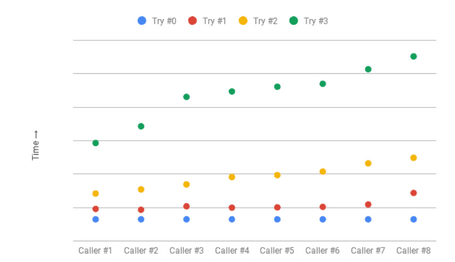

It aims to retry an operation that has failed due to a temporary issue (e.g., a network timeout).

- Exponential backoff: increases the wait time between retries (1s, 2s, 4s...). This gives the service time to recover.

- Jitter (variation): adds a small random delay to the wait time to prevent all clients from retrying at the same time.

Configuration:

# Retry - Default settings

resilience4j.retry.configs.default.maxAttempts=3

resilience4j.retry.configs.default.waitDuration=1000

resilience4j.retry.configs.default.exponentialBackoffMultiplier=2

resilience4j.retry.configs.default.retryExceptions=java.net.SocketTimeoutException,java.net.ConnectException

# Retry - Email Service

resilience4j.retry.instances.emailService.baseConfig=default

resilience4j.retry.instances.emailService.maxAttempts=2

resilience4j.retry.instances.emailService.waitDuration=2000

Never use automatic retries on non-idempotent operations, such as processing a payment, to avoid duplicates.

Rate Limiter

Protects your API from an excessive number of requests, whether due to abuse or overload (e.g., Black Friday). It limits the number of requests within a given time period.

Key configuration:

resilience4j.ratelimiter:

instances:

publicApi:

limitForPeriod: 100 # 100 requests

limitRefreshPeriod: 1s # per second

timeoutDuration: 0 # Reject immediately if exceeded

Bulkhead

Isolates resources (threads) so that a slow service does not monopolize all application resources. It limits the number of concurrent calls to a specific service. If a service becomes saturated, only its “compartment” is affected—not the rest of the application.

Key configuration:

resilience4j.bulkhead:

instances:

emailService:

maxConcurrentCalls: 30 # Max 30 simultaneous calls

Time Limiter

Sets a maximum timeout for an operation. If the operation takes longer than the defined limit, it is cancelled and a TimeoutException is thrown. This is essential to prevent threads from being blocked indefinitely.

Key configuration:

# Time Limiter - Default Settings

resilience4j.timelimiter.configs.default.timeoutDuration=3000

# Time Limiter - Email Service

resilience4j.timelimiter.instances.emailService.timeoutDuration=15000

Practical implementation in Spring Boot

Maven Dependencies

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-spring-boot3</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

Combined example: protecting a critical service

The real power of Resilience4j lies in combining patterns. This is an example of protecting a call to a payment service.

The first step is to define the default configuration so it’s clear that it is enabled. This applies to all the patterns we’ll explain next:

resilience4j.auto-configuration.enabled=true

# Circuit Breaker - Payment Service

resilience4j.circuitbreaker.instances.paymentService.baseConfig=default

resilience4j.circuitbreaker.instances.paymentService.failureRateThreshold=40

resilience4j.circuitbreaker.instances.paymentService.waitDurationInOpenState=180000

# Time Limiter - Payment Service (10 seconds to allow for)

resilience4j.timelimiter.instances.paymentService.timeoutDuration=10000

# IMPORTANT: Do not configure Retry for payments.

# Payments should NOT be automatically retried to avoid duplicate charges.

Java code:

@Service

public class RedsysPaymentServiceImpl {

private static final Logger logger = LoggerFactory.getLogger(RedsysPaymentServiceImpl.class);

private final PaymentRepository paymentRepository;

private final OrderRepository orderRepository;

private final OrderMailPaymentService orderMailPaymentService;

/**

* Processes a Redsys payment with full Resilience4j protection.

*

* Resilience4j:

* - Circuit Breaker: Protects against failures in the payment service

* - Retry: NOT used here (payments must not be retried automatically)

* - Time Limiter: Cancels the operation if it takes longer than 10 seconds

* - Fallback: Marks the order as pending manual review

*/

@CircuitBreaker(name = "paymentService", fallbackMethod = "fallbackProcessRedsysPayment")

@TimeLimiter(name = "paymentService")

public CompletableFuture<RedsysPaymentResponseDTO> processRedsysPayment(RedsysPaymentRequestDTO request) {

return CompletableFuture.supplyAsync(() -> {

logger.info("[RedsysService] Processing payment for orderId: {}", request.getOrderId());

// Simulate Redsys delay (3 seconds)

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new RuntimeException("Payment interrupted", e);

}

// Create and persist the payment

Payment payment = Payment.builder()

.orderId(request.getOrderId())

.amount(request.getAmount())

.method(PaymentMethod.REDSYS)

.status(PaymentStatus.COMPLETED)

.transactionId("REDSYS-" + System.currentTimeMillis())

.build();

Payment saved = paymentRepository.save(payment);

// Update order status to COMPLETED

orderRepository.findByOrderId(request.getOrderId()).ifPresent(order -> {

order.setStatus("COMPLETED");

orderRepository.save(order);

logger.info("[RedsysService] Order updated to COMPLETED: {}", order.getOrderId());

// Send confirmation email (protected with its own Circuit Breaker)

orderMailPaymentService.sendOrderConfirmationEmail(order.getOrderId());

});

return paymentMapper.toRedsysPaymentResponseDTO(saved);

});

}

/**

* Fallback when the payment service fails.

*

* IMPORTANT: Payments must NOT be retried automatically to avoid duplicate charges.

*/

private CompletableFuture<RedsysPaymentResponseDTO> fallbackProcessRedsysPayment(

RedsysPaymentRequestDTO request, Exception e) {

logger.error("⚠️ FALLBACK: Redsys payment service unavailable for orderId={}. Cause: {}",

request.getOrderId(), e.getMessage(), e);

// Mark the order as pending manual review

orderRepository.findByOrderId(request.getOrderId()).ifPresent(order -> {

order.setStatus("PENDING_PAYMENT");

orderRepository.save(order);

logger.warn("[RedsysService] Order marked as PENDING_PAYMENT for manual review: {}",

order.getOrderId());

});

// Create failed payment record for audit purposes

Payment failedPayment = Payment.builder()

.orderId(request.getOrderId())

.amount(request.getAmount())

.method(PaymentMethod.REDSYS)

.status(PaymentStatus.FAILED)

.providerResponse("Service temporarily unavailable: " + e.getMessage())

.build();

paymentRepository.save(failedPayment);

// Return response indicating the payment is pending

RedsysPaymentResponseDTO fallbackResponse = new RedsysPaymentResponseDTO();

fallbackResponse.setStatus(PaymentStatus.PENDING);

fallbackResponse.setMessage(

"The payment service is currently unavailable. " +

"Your order has been saved and will be processed manually. " +

"You will receive a confirmation email shortly."

);

return CompletableFuture.completedFuture(fallbackResponse);

}

}

Benefits of this combination

The benefits are mainly two:

- Full protection: the TimeLimiter prevents long waits, and the CircuitBreaker protects against continuous failures.

- Graceful degradation: the fallbackMethod ensures the system continues to operate in a controlled manner instead of failing completely.

Decision guide: which pattern should you use?

| If your service... | Recommended pattern | Example use case |

|---|---|---|

| Can go down completely or is highly unstable | Circuit Breaker + Fallback (mandatory) | Payment APIs, email services, external databases. |

| Suffers from temporary failures (e.g., network issues) | Retry (only for idempotent operations) | Database reads, query-based API calls. |

| Needs protection against overload or abuse | Rate Limiter | Public API endpoints, login endpoints. |

| Consumes many resources and can impact others | Bulkhead | Heavy operations, complex database queries. |

| Sometimes takes too long to respond | Time Limiter | Calls to third-party APIs without a clear SLA. |

Essential best practices

- Use meaningful fallbacks. A fallback should not simply throw another exception. It must provide a real alternative: return cached data, enqueue a task for later processing, or return a default value.

- Do not overuse retries. Use them only for transient failures and idempotent operations (those that can be safely repeated without side effects). Never retry a payment submission without an idempotency key.

- Configure specific exceptions. Define retryExceptions and ignoreExceptions to retry only failures that make sense (e.g., SocketTimeoutException) and not definitive ones (e.g., InsufficientFundsException).

- Set realistic timeouts. A timeout that is too short will cause unnecessary failures. Base your configuration on the observed latency of the service (e.g., the 99th percentile).

- Monitor your patterns. Resilience4j integrates with Spring Boot Actuator (/actuator/circuitbreakers, /actuator/retries). Use these metrics to visualize the state of your services in tools like Prometheus and Grafana, and fine-tune your configuration accordingly.

Conclusion

Integrating Resilience4j is not an option—it is a necessity in microservice architectures. It allows you to move from a fragile system that fails completely to a robust and resilient one that can gracefully withstand dependency failures.

Remember: it’s not a question of IF an external service will fail, but WHEN. Be prepared.

With patterns such as Circuit Breaker, Retry, and Fallback, you can build applications that not only survive failures but recover automatically, ensuring a better user experience and greater peace of mind for development teams.

Additionally, in my infinia-sports project, I created some Resilience4j load tests with Gatling and generated reports showing highly significant system improvement rates.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.