The emergence of the Internet, more than two decades ago, has transformed business models and, in recent years, data has gained special relevance for decision making with regards to the future of companies.

In this line, for some years now, we have heard the term Big Data more and more frequently, but do we really know what it consists of?

Big Data

When we talk about Big Data we refer to large volumes of data, both structured and unstructured, that are generated and stored on a day-to-day basis. Although what is really important is not the amount of data we have, but what we do with it and what decisions we make to help improve our business, based on the knowledge obtained after analyzing the data.

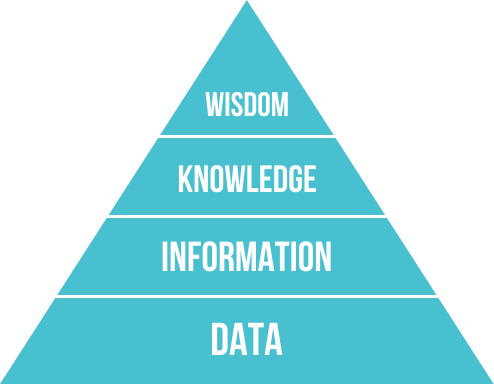

Directly related to this concept, we can find the DIKW pyramid which establishes that information, knowledge and wisdom are defined based on the data as we see in the following image:

Image 1. The DIKW pyramid (wikipedia.org)

Big Data projects are carried out on distributed file systems, in many cases on the distributed storage system of the Hadoop ecosystem, HDFS (Hadoop Distributed File System).

HDFS is a distributed, scalable and portable file system written in Java, which was initially designed to be used together with Hadoop, an open source framework for distributed application development inspired by Google's File System and MapReduce papers.

For a few years it has been the most used framework to carry out Big Data projects and has been the key element in its evolution to take it to the point where it is nowadays.

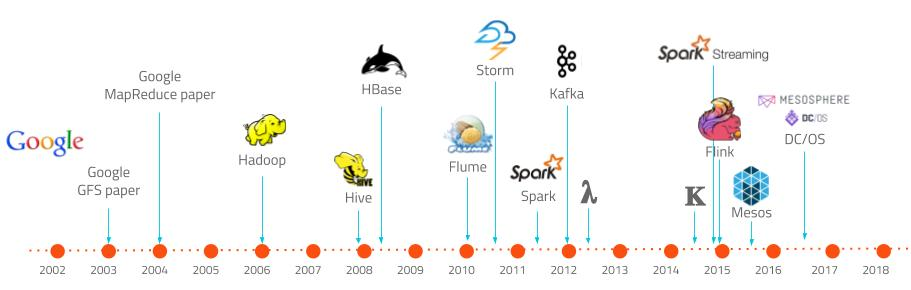

To place in time the appearance of Hadoop and others strongly related to Big Data we will use the following image:

[caption id="" align="aligncenter" width="911"]

Timeline of Big Data technologies[/caption]

In addition to the technologies related to the Hadoop ecosystem (Hadoop, Hive, HBase, etc...) Spark stands out for its determining role in the evolution of Big Data.

Spark is a distributed data processing engine that can handle large volumes of information. It could be understood as an evolution of Hadoop MapReduce, offering among others the following advantages over it:

- It works with in memory data, unlike MapReduce, which uses data stored on disk, which allows it to be faster at the expense of greater resource consumption.

- It offers real-time processing.

- It has extension modules that allow Machine Learning, streaming, graphs, data access, etc ...

- Supports different programming languages.

In the timeline that we have seen previously, we can find two Greek letters in the graph in the years 2012 and 2014, which have given name to the different architectures of which we are going to talk about next.

Lambda and Kappa Architectures

Given that companies have an increasing volume of data and need to analyze and obtain value from it as soon as possible, there is a need to define new architectures to cover use cases different from the existing ones.

The most common architectures in these projects are mainly two: Lambda Architecture and Kappa Architecture. The main difference between both is the flows of data processing that intervene, but we will see what each one consists of in more detail.

A couple of concepts that we have to define before seeing the characteristics of each, are batch processing and streaming processing.

- Batch makes reference to a process in which a set of data intervenes and which has a beginning and an end in time.

- On the other hand, we say that processing is of the streaming type when it is continuously receiving and treating new information as it arrives without having an end in relation to the temporary section.

Lambda architecture

The Lambda Architecture represented by the Greek letter λ, appeared in the year 2012 and is attributed to Nathan Marz. He defined it based on his experience in distributed data processing systems during his time as an employee in Backtype and Twitter, and is inspired by his article “How to beat the CAP theorem”.

Its objective was to have a robust, fault-tolerant system, both from human error and hardware, that was linearly scalable and that allowed for writing and reading with low latency.

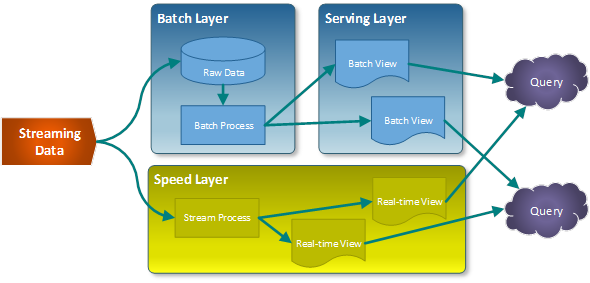

Nathan gives the solution to this problem by creating an architecture whose high level diagram appears in the following image:

Image 2: Lambda architecture

The characteristics of Lambda Architecture are:

- The new information collected by the system is sent to both the batch layer and the streaming layer (called Speed Layer in the previous image).

- In the batch layer (Batch Layer) the raw information is managed, that is, is unmodified. The new data is added to the existing one. After this, a treatment is done through a batch process whose result will be the so-called Batch Views, which will be used in the layer that serves the data to offer the information already transformed to the outside.

- The layer that serves the data, or Serving Layer, indexes the Batch Views generated in the previous step so that they can be consulted with low latency.

- The layer of streaming or Speed Layer, compensates the high latency of the writings that occur in the serving layer and only takes into account new data.

- Finally, the answer to the queries made is constructed by combining the results of the Batch Views and the real-time views, which were generated in the previous step.

In short, this type of architecture is characterized by using different layers for batch processing and streaming.

Kappa architecture

The term Kappa Architecture, represented by the greek letter Κ, was introduced in 2014 by Jay Krepsen in his article “Questioning the Lambda Architecture”.

In it, he points out possible "weak" points of Lambda and how to solve them through an evolution. His proposal is to eliminate the batch layer leaving only the streaming layer.

This layer, unlike the batch layer, does not have a beginning or an end from a temporal point of view and is continuously processing new data as it arrives.

As a batch process can be understood as a bounded stream, we could say that batch processing is a subset of streaming processing.

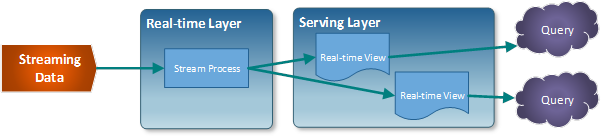

This evolution consists of a simplification of the Lambda architecture, in which the batch layer is eliminated and all the processing is done in a single layer called Real-time Layer, giving support to both batch and real-time processing.

The architecture diagram would be represented by the following image:

Image 3: Kappa architecture

We can say that its four main pillars are the following:

- Everything is a stream: batch operations are a subset of streaming operations, so everything can be treated as a stream.

- The starting data is not modified: the data is stored without it being transformed and the views are derived from it. A specific state can be recalculated since the source information is not modified.

- There is only one processing flow: since we maintain a single flow, the code, the maintenance and the system update are considerably reduced.

- Possibility of re-launching a processing: you can modify a specific process and its configuration to vary the results obtained from the same input data.

As a prerequisite, it must guarantee that the events are read and stored in the order in which they were generated. In this way, we can vary a specific processing from the same version of the data.

Which architecture best fits to our needs?

Once we have seen what each of the architectures consists of, now is the complicated part of deciding which one fits best for our business model.

As in most cases, it can be said that there is no single optimal solution for all problems, which is usually defined by the term "One size does not fit all". Lambda Architecture is more versatile and is able to cover a greater number of cases, many of which require even real-time processing.

One question that we must ask ourselves in order to decide is, is the analysis and processing that we are going to carry out in the batch and streaming layers the same? In this case, the most appropriate option would be the Kappa Architecture.

As a real example of this architecture we could put a system of geolocation of users by their proximity to a mobile phone antenna. Each time you approached an antenna that gave you coverage, an event would be generated. This event would be processed in the streaming layer and would be used to paint on a map its displacement with respect to its previous position.

However, at other times we will need to access the entire data set without penalizing the performance so here Lambda Architecture can be more appropriate and even easier to implement.

We will also lean towards a Lambda Architecture if our batch and streaming algorithms generate very different results, as can happen with heavy processing operations or in Machine Learning models.

A case of real use for a Lambda architecture could be a system that recommends books according to the tastes of the users. On the one hand, it would have a batch layer in charge of training the model and improving the predictions; and on the other, a streaming layer capable of taking charge of real-time assessments.

Conclusions

To conclude, we must point out how quickly the use cases that we want to cover with our Big Data solutions evolve, and that means that we must adapt to them as soon as possible.

Each problem to solve has particular conditions and in many cases we will have to evolve the architecture that we were using so far. As they say: "renew or die".

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.