Working with generative AI has both similarities and differences compared to traditional machine learning (ML) systems. Let’s break it down:

- Core Component: In ML, the trained model is the centerpiece. This model has been trained on structured data (e.g., tabular, images, text) to perform specific tasks like classification, regression, or object detection. In generative AI, the model is also essential, but its output heavily depends on the prompts provided, as its purpose extends beyond solving a specific problem to generating content (e.g., text, images, audio).

- Training: ML models are trained using large volumes of labeled data (e.g., images tagged as “cat” or “dog”) in either supervised or unsupervised processes. Large language models (LLMs) are similarly trained on vast datasets. However, users typically don’t need to train these models from scratch. Instead, they can customize them through fine-tuning or prompt adjustments without altering the model’s weights.

- Evaluation: ML relies on standard metrics like accuracy, recall, F1-score, or AUC-ROC to compare model predictions against real-world labels in test sets. In generative AI, evaluation is more subjective and complex. While test datasets can be used, criteria like coherence, creativity, relevance, and fluency are also considered, often with human feedback. New evaluation metrics are being developed every day.

- Tools: For classic AI problems, tools like MLflow are popular for lifecycle management, including model versioning, experiment tracking, and deployment. In generative AI, we need tools tailored to the unique aspects of LLM solutions—what we call LLM engineering. These tools manage elements like production prompts, version history, call costs, evaluation datasets, and metrics linked to traces and prompts.

Langfuse and LangSmith: Leading Tools in LLM Engineering

Two of the most recognized tools in LLM engineering today are Langfuse and LangSmith. In this series, we’ll compare their main functionalities, focusing on how each platform handles various tasks. To do so, we’ll use Python and integrate both with LangChain, the go-to framework for LLMs.

Before diving in, note these key differences:

- LangSmith requires a license for commercial use, though it offers a robust free version.

- Langfuse can be self-hosted on your own servers or used under a paid license for managed services.

In this post, we’ll use LangSmith’s free version and a self-hosted instance of Langfuse.

Local Setup

LangSmith

Setting up LangSmith is straightforward. You’ll need API keys for LangChain (with which LangSmith is fully integrated) and OpenAI (since we’ll use an OpenAI model in this example). Configure the required environment variables as follows:

import os

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "YOUR_LANGCHAIN_API_KEY"

os.environ["LANGCHAIN_PROJECT"] = "default"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

Langfuse

Setting up Langfuse involves a few additional steps:

- Clone the official Langfuse repository:

git clone https://github.com/langfuse/langfuse.git

- Deploy the necessary services locally (a PostgreSQL database and Langfuse service) with Docker Compose:

cd langfuse

docker compose up

- Create an account and a project:

- Open http://localhost:3000 in your browser.

- Click Sign up, enter your details, and log in.

- Create a new project by selecting New Project and naming it.

- Generate API keys for connecting your code to Langfuse:

import os

os.environ["LANGFUSE_HOST"] = "http://localhost:3000"

os.environ["LANGFUSE_PUBLIC_KEY"] = "YOUR_LANGFUSE_PUBLIC_KEY"

os.environ["LANGFUSE_SECRET_KEY"] = "YOUR_LANGFUSE_SECRET_KEY"

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

Prompt Versioning

In any ML-based application, there’s an experimentation phase to select the best model and a lifecycle management phase for monitoring and control. In an MLOps workflow, ML models are evaluated and versioned based on performance, enabling version control over time.

For LLM-based solutions, the equivalent of an ML model is a prompt. Prompts go through an experimentation phase to establish a baseline and a subsequent monitoring phase to refine or revert as needed.

Both LangSmith and Langfuse enable prompt storage, versioning, and referencing for different application needs.

LangSmith

To upload a prompt to LangSmith, use the .push_prompt() method from the LangSmith client. The prompt is represented as a PromptTemplate object (from LangChain). Below is an example of creating a ChatPromptTemplate with both a system and user prompt:

from langsmith import Client

from langchain_core.prompts import ChatPromptTemplate

system_prompt_template = """

You are a bot that responds to questions based on context provided by the user. The user message will have this format:

## CONTEXT ##

Knowledge to use for answering the question

## QUESTION ##

Question to answer

Keep in mind:

- Respond naturally. Avoid phrases like 'based on the provided context.'

- Restrict your answers strictly to the given context.

- Use information from the entire conversation if necessary.

"""

user_prompt_template = """

## CONTEXT ##

{context}

## QUESTION ##

{question}

"""

client = Client()

prompt = ChatPromptTemplate.from_messages([

("system", system_prompt_template),

("user", user_prompt_template)

])

client.push_prompt(

"prompt_rag",

object=prompt,

description="Test prompt for RAG",

tags=["first_prompt", "test_prompt"]

)

To upload a new version, modify the prompt template and re-upload:

system_prompt_template = """

You are a bot that answers questions based on user-provided context. The user message will follow this format:

## CONTEXT ##

Knowledge to answer the question

## QUESTION ##

Question to answer

"""

prompt = ChatPromptTemplate.from_messages([

("system", system_prompt_template),

("user", user_prompt_template)

])

client.push_prompt(

"prompt_rag",

object=prompt,

description="Updated prompt for RAG",

tags=["RAG"]

)

By default, LangSmith retrieves the latest version of a prompt when using .pull_prompt(). To access an earlier version, specify the relevant commit:

client.pull_prompt("prompt_rag")

client.pull_prompt("prompt_rag:5e12e879")

In LangSmith, the prompt versioning functionality allows you to maintain a history of the different prompts used for various tasks within an application. This prompt tracing enables access to different versions of the same prompt, storing them with tags, and more. However, it does not allow for organization by project, so it’s important to consider this when defining the naming convention to ensure it is distinctive or to indicate it through tags.

Langfuse

In Langfuse, the main difference lies in the object passed to the .create_prompt() method. In this case, it is a list of dictionaries with the keys role and content; the content values will correspond to the templates we have defined. Additionally, we must specify a name for the prompt, the configuration it is intended for (such as the model, temperature, supported languages, etc.), and the labels, where by default, the production label is assigned since it is mandatory and marks the prompt currently in production. You can also assign tags, which, unlike labels, can be repeated across prompts, and a type, depending on whether it is for text or chat.

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.prompts import HumanMessagePromptTemplate, SystemMessagePromptTemplate

from langfuse import Langfuse

langfuse = Langfuse()

system_prompt_template = """

You are a bot that answers questions based on a context

provided by the user. The user's message will follow

this format:

## CONTEXT ##

Knowledge you will use to answer the question

## QUESTION ##

Question you must answer

Keep the following in mind:

- Respond as naturally as possible. Do not say "according to the

provided context" or similar phrases.

- Limit your response strictly to the context provided

by the user.

- If necessary, use information from the entire conversation to

answer.

"""

user_prompt_template = """

## CONTEXT ##

{{context}}

## QUESTION ##

{{question}}

"""

prompt_registry = {

"name": "prompt_rag",

"prompt": [

{"role": "system", "content": system_prompt_template},

{"role": "user", "content": user_prompt_template}],

"config": {

"model": "gpt-4o",

"temperature": 0.1,

"supported_languages": ["en"]

},

"labels": ["production", "staging", "latest"],

"tags": ["RAG"],

"type": "chat"

}

langfuse.create_prompt(

**prompt_registry

)

To upload a new version of the prompt, as with LangSmith, you simply need to write the new version, generate the object, and use the method again:

system_prompt_template = """

You are a bot that answers questions based on a context provided by the user. The user's message will follow this format:

## CONTEXT ##

Knowledge you will use to answer the question

## QUESTION ##

Question you must answer

"""

prompt_registry = {

"name": "prompt_rag",

"prompt": [

{"role": "system", "content": system_prompt_template},

{"role": "user", "content": user_prompt_template}],

"config": {

"model": "gpt-4o",

"temperature": 0.1,

"supported_languages": ["es"]

},

"labels": ["production", "staging", "latest"],

"tags": ["RAG"],

"type": "chat"

}

langfuse.create_prompt(

**prompt_registry

)

By default, the most recent version of the prompt is accessed, but previous versions can also be retrieved:

langfuse.get_prompt("prompt_rag")

langfuse.get_prompt("prompt_rag", version = 1)

This prompt is not a Langchain prompt like it was in LangSmith, so to use it in Langchain, we need to convert it—but it’s simple:

from langchain_core.prompts import ChatPromptTemplate

langchain_prompt = ChatPromptTemplate.from_messages(

langfuse.get_prompt("prompt_rag").get_langchain_prompt()

)

Tracing LLM Calls

Tracing refers to the logging of all user interactions with the LLM. It’s a valuable feature because it allows you to monitor application usage, identify frequent errors, or detect if a specific user is leveraging the application unusually often (useful for spotting potential prompt hacking attempts). Tracing also provides insights into call durations, costs, and overall performance.

Additionally, traces can be “customized” by adding metadata (such as the LLM’s name) for each call. This enables differentiation between various LLM use cases, such as generating embeddings, calculating metrics, or producing responses. This way, each type of usage can be traced separately.

Both LangSmith and Langfuse support this tracing functionality.

LangSmith

In LangSmith, tracing is as simple as invoking a chain or an LLM, which automatically generates the trace. Let’s create the “Hello, World” example for LLM engineering:

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4o")

llm.invoke("Hello, world!")

This generates a response like:

AIMessage(content='Hello! How can I assist you today?', response_metadata={'token_usage': {'completion_tokens': 9, 'prompt_tokens': 11, 'total_tokens': 20, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-4o', 'system_fingerprint': 'fp_3537616b13', 'finish_reason': 'stop', 'logprobs': None}, id='run-cf556208-5a03-4597-95b7-809623ec5a79-0', usage_metadata={'input_tokens': 11, 'output_tokens': 9, 'total_tokens': 20})

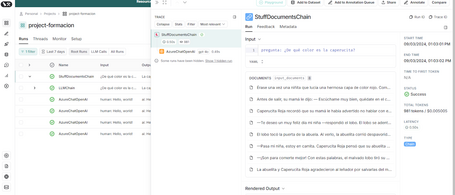

In LangSmith’s UI, it appears as follows:

For a more complex example, let’s load the story of Red Riding Hood from red_riding_hood.txt and ask the system a question about it. This is an example of Retrieval-Augmented Generation (RAG). We’ll invoke a chain, pass it the split text, and pose the question:

from langchain.chains.question_answering import load_qa_chain

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.docstore.document import Document

# Load the story text

with open("red_riding_hood.txt", "r") as f:

red_riding_hood_story = f.read()

# Split the text into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

chunks = text_splitter.split_documents([Document(page_content=red_riding_hood_story)])

# Retrieve the prompt for the chain

langchain_prompt = client.pull_prompt("prompt_rag")

# Load the QA chain

chain = load_qa_chain(

llm=llm,

chain_type="stuff",

prompt=langchain_prompt,

document_variable_name="context"

)

# Invoke the chain with the chunks and the query

response = chain.invoke({

"input_documents": chunks,

"question": "What color is the riding hood?"

})

The traces visible in LangSmith’s portal would look like the following:

- Trace Details: Each stage of the chain invocation is listed, showing how the chunks were sent to the LLM.

- Internal View: For each stage, you can drill down to see the exact fragments sent to the LLM and its corresponding response.

LangSmith offers specific features tailored to the conversational nature of many LLM-based applications, allowing traces to be grouped into threads based on session IDs.

Langfuse

Langfuse also allows for logging LLM call traces and provides detailed information about execution time and call costs. To integrate it with Langchain, you need to explicitly define the callback handler in Langchain or use the observe decorator, which monitors everything executed within the function it is applied to. In this example, the decorator option is chosen:

from langfuse.decorators import langfuse_context, observe

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains.question_answering import load_qa_chain

prompt = langfuse.get_prompt("question_and_answer")

langchain_prompt = ChatPromptTemplate.from_messages(

prompt.get_langchain_prompt()

)

@observe()

def generate_response(llm_model, query, chunks, model_kwargs):

langfuse_handler = langfuse_context.get_current_langchain_handler()

llm = ChatOpenAI(model=llm_model, **model_kwargs)

chain = load_qa_chain(

llm=llm,

chain_type="stuff",

prompt=langchain_prompt,

document_variable_name="context"

)

response = chain.invoke(

{"input_documents": chunks, "question": query},

config={"callbacks": [langfuse_handler]}

)

return response

generate_response(

"gpt-4o",

"What color is the riding hood?",

chunks,

model_kwargs={"temperature": 0.2}

)

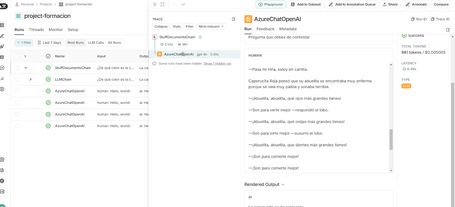

The traces can be viewed as a list, similar to LangSmith, and can also be accessed for detailed inspection.

We have explored the main features of both platforms. If you want to learn more about how datasets are managed and how experiments and evaluations are conducted, we invite you to check out the second part of this series.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.