The creation of Generative AI solutions is a booming tech trend. Driven by the rapid evolution of LLMs and the growing demand for smarter, automated interactions, we’re witnessing a proliferation of new platforms designed to simplify and accelerate the development of innovative GenAI solutions.

But, what exactly are Generative AI solution platforms?

They are intuitive platforms (often low-code/no-code) that empower users to design, build, and deploy Generative AI solutions quickly—optimizing both time and cost.

How did these platforms emerge?

These innovative conversational Generative AI platforms didn’t emerge from a single origin but rather converged from various starting points:

- Evolution of traditional chatbot platforms:

Solutions originally built for deterministic chatbots (based on flows, rules, and keyword recognition) have integrated Generative AI capabilities to enhance interactions—enabling more natural and contextual responses, advanced semantic understanding of user intents, and dynamic decision-making in conversation management.

E.g. Google Cloud Dialogflow (especially its CX version with generative features) is a clear example of this transition, allowing the combination of structured flows with the flexibility of Generative AI.

- Expansion of large language model (LLM) platforms:

Environments initially focused on training, fine-tuning, and deploying Large Language Models (LLMs) are now adding orchestration layers, tools for managing conversational flows, integration with knowledge bases (RAG - Retrieval Augmented Generation), and the ability to connect LLMs with APIs and external tools (actions/functions)—enabling complete conversational applications built on LLM capabilities.

E.g. Azure AI Foundry (Microsoft) offers tools like Prompt Flow to design, evaluate, and deploy flows that integrate LLMs (from Azure OpenAI Service or others) with application data and logic.

- Abstraction and visualization over development frameworks:

Open-source development frameworks like LangChain have been crucial in standardizing and speeding up LLM app development by offering modular components and reusable chains. On top of these frameworks, visual interfaces and low-code platforms are emerging, abstracting the code complexity and allowing a broader audience to intuitively design and build GenAI workflows.

E.g. LangFlow (for LangChain) provides a drag-and-drop graphical interface to assemble LangChain components and build complex applications without coding every detail.

- Integration of AI agent capabilities into automation tools:

Process automation platforms (e.g. RPAs or workflow automation tools) are embedding AI agents and Generative AI capabilities. This allows them not only to execute predefined tasks but also to understand natural language instructions, make complex decisions, interact conversationally with users, and perform autonomous goal-based actions.

E.g. N8N allows integration of Generative AI nodes into its workflows to create more intelligent and adaptive automations.

Is this going to eliminate the need for programming?

We’ve seen platforms like these before in other areas, for example:

Data projects:

| Programming Languages or Frameworks | Platforms |

|---|---|

| SQL PySpark Apache Beam |

Talend Informatica PowerCenter Azure Data Factory |

Machine Learning projects:

| Programming Languages or Frameworks | Platforms |

|---|---|

| Python Scikit-learn TensorFlow |

Azure ML (Auto ML) DataRobot H2O.ai |

Gen AI projects:

| Programming Languages or Frameworks | Platforms |

|---|---|

| Python LangChain LangGraph |

Azure Copilot Studio Amazon Bedrock Agents LangFlow |

The development platforms streamline the creation of solutions, but do not eliminate the need for programming languages and frameworks. While these platforms provide high-level tools for common tasks, programming remains essential for solutions requiring complex logic, advanced customization, or specific innovations. They are, therefore, complementary.

What types of platforms can I use?

- Solutions with vendor lock-in from major hyperscalers (Azure, AWS, and GCP).

These are platforms offered by major cloud providers (hyperscalers) that facilitate the creation of Generative AI solutions integrated into their ecosystems. For example, Copilot Studio (Microsoft) or Amazon Bedrock.

| Advantages | Disadvantages |

|---|---|

| Cost efficiency in development. | Vendor lock-in (difficulty migrating to other providers). |

| Strong investment/evolution (backed by large companies with significant R&D resources). | Learning curve for each vendor (each ecosystem has its own specifics). |

| Better integration with other services from the same provider. | Potential limitations in available features (dependent on the provider’s roadmap). |

| Lower initial technical requirements for development teams. | Costs can scale quickly with intensive use if not managed properly. |

| Scalability and reliability inherent to cloud platforms. | |

| Access to powerful foundation models optimized for their infrastructure. |

- Solutions with vendor lock-in from Generative AI platform vendors.

These are proprietary platforms from companies specialized in conversational AI or enterprise solutions, offering specific tools. For example, Kore.ai XO or Cognigy.

| Advantages | Disadvantages |

|---|---|

| Cost efficiency in development. | Vendor lock-in (difficulty migrating to other providers). |

| Lower initial technical requirements for development teams. | Learning curve for each vendor (each ecosystem has its own specifics). |

| Specialization in specific niches or industries. | Potential limitations in available features (dependent on the provider’s roadmap). |

| Often highly polished interfaces focused on user experience for business roles. | Uncertainty about the provider's stability (especially with smaller or less established companies compared to hyperscalers). |

| Costs can scale quickly with intensive use if not managed properly. |

- Open-source solutions.

These are open-source platforms that offer maximum flexibility and control for developing Generative AI solutions. For example, LangFlow.

| Advantages | Disadvantages |

|---|---|

| Cost-efficient development. | Learning curve of the solution. |

| Lower initial technical requirements for development teams. | No official support (or community-based support, which is not guaranteed). |

| No vendor lock-in. | Uncertainty about long-term sustainability and evolution (though popular projects often have strong traction). |

| Rapid evolution (driven by the global community). | Greater responsibility regarding security, maintenance, scalability, and infrastructure. |

| Flexibility to develop on your own (DIY). | Requires a more experienced technical team to manage the full lifecycle. |

Do They Offer Quantifiable Advantages?

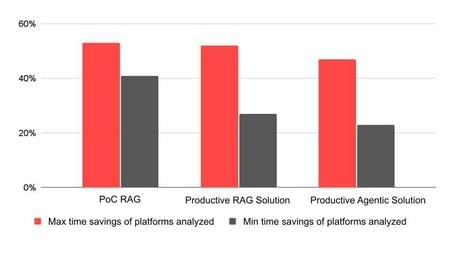

At Paradigma, we conducted an analysis to quantify the development time savings that can be achieved using various Generative AI platforms. The study focused on the following tools:

- Azure Copilot Studio

- Azure AI Foundry

- Amazon Bedrock

- Amazon Q

- Google Conversational Agents

- LangFlow

- N8N

Time savings estimates were calculated across three distinct project scenarios:

- RAG PoC (Proof of Concept with RAG):

- Description: a solution using Retrieval Augmented Generation (RAG) to provide information based on a specific knowledge base.

- Goal: validate the technical and operational feasibility of the solution, without the full requirements of a production system.

- Production-Ready RAG Solution:

- Description: a fully-featured RAG solution ready for deployment and operation in a production environment.

- Includes: features like continuous integration/continuous delivery (CI/CD), infrastructure as code (IaC), monitoring, versioning, and more.

- Production-Ready Agentic Solution:

- Description: an AI agent-based solution capable of using various tools (e.g., querying knowledge bases, interacting with relational databases or systems like JIRA).

- Includes: all necessary requirements and features for production deployment and maintenance.

The development time savings from using these Generative AI platforms vary depending on the specific tool and project complexity.

There is a significant reduction in development time across all three analyzed scenarios. Proportionally, simpler projects tend to benefit the most, as the core features of these platforms have a more direct impact. This trend can be summarized as follows (from highest to lowest relative savings): RAG PoC > Production-Ready RAG Solution > Production-Ready Agentic Solution.

Analyzing the specific impact of these platforms by task type, we observe the following:

Low impact:

- Initial documentation analysis and information gathering

- Requirements analysis and high-level conceptual design

- Project management, extensive documentation, and follow-up meetings

Moderate impact:

- Development of ingestion, transformation, and indexing pipelines

- Custom tool development and integration logic for agentic solutions

- Iterative experimentation and optimization (prompt tuning, LLM selection, ingestion settings)

- Custom UI (frontend) development

High impact:

- Deployment and configuration of the underlying infrastructure and DevOps automation (CI/CD, IaC)

- Development and orchestration of the core AI logic (e.g., RAG logic, reasoning chains of agents)

How to Choose the Right Platform?

The requirements for developing and deploying Generative AI use cases—both present and future—are diverse. When selecting a platform, it's crucial to evaluate essential and desirable capabilities to determine the best fit:

- LLM Instructions: clear, effective definition of instructions, context, and role for the LLM

- Multiple LLMs: support for choosing among proprietary, third-party, and ideally open-source models

- Prompt Engineering: tools for designing, testing, and efficiently refining prompts

- Knowledge Bases for RAG:

- Internal ingestion: automation of document ingestion and indexing from repositories

- External connection: integration with vector or graph databases

- Multiple sources: use of multiple knowledge bases simultaneously

- Agents and Tools:

- Definition: creation of agents and tools to interact with external systems and execute actions

- Connectors: prebuilt connectors for common systems

- Standards: OpenAPI and MCP support

- Flows: design and execution of task logic flows (e.g., conversational flows, process orchestration)

- Memory Management: ability to maintain context across conversations or processes

- Variables and Parameters: dynamic, personalized solutions via parameterized flows and prompts

- User Interface (Frontend):

- Channels: native integration with channels like Teams, WhatsApp, etc.

- Demos/PoCs: automatic generation of web interfaces for demonstration

- Web embedding: snippets (e.g., iframes) to embed chat into existing websites

- Advanced integration: APIs or SDKs for custom frontend development

- CI/CD Processes:

- Multiple environments: support for DEV, TEST, and PROD environments

- Automation: automated testing and promotion capabilities

- External integration: compatibility with standard CI/CD tools

- IaC (Infrastructure as Code): configuration of the AI solution (agents, KBs) as code (e.g., Terraform, CloudFormation)

- Code & Prompt Versioning:

- Change control: versioning of application logic and prompts

- Integration: connection with Git and prompt versioning tools (e.g., Langfuse)

- Monitoring: ability to track usage, LLM performance, costs, and user satisfaction

- Guardrails: security filters to prevent harmful content and cyberattacks (e.g., prompt injection)

- Authentication & Security: authentication and permission management, including IdP and role-based access

Note: Generative AI platforms vary in maturity. Some are ready for deploying AI agents in production, while others are better suited for PoCs. Given their rapid evolution and frequent feature updates, any analysis is a temporary snapshot.

Other factors beyond features include:

- Vendor lock-in: risk and contractual flexibility

- Ease of use: intuitiveness for target users

- Speed of development and deployment: agility to iterate and launch solutions

- Total cost of ownership (TCO): licenses, infrastructure, usage

- Scalability: solution growth capability

- Additional integrations: enterprise ecosystem connectivity (e.g., CRM, ERP)

- Support and documentation: quality and availability of technical help and resources

- Governance: lifecycle control and compliance for deployed solutions

Ease, Speed, and Efficiency in Developing Generative AI Solutions

In short, Generative AI solution creation platforms have become fundamental tools for harnessing the immense potential of this technological revolution.

Their ability to democratize development, accelerate value delivery, and optimize resources (a competitive advantage clearly shown in the time-saving analysis) is undeniable. The wide variety of available options allows organizations to efficiently tackle everything from agile PoCs to the implementation of robust production systems.

However, the selection of the right platform goes beyond analyzing its technical capabilities. It requires careful consideration of strategic factors such as the organization’s infrastructure, total cost of ownership, vendor dependency, and the quality of technical support.

In a rapidly evolving field like Generative AI, using platforms that accelerate value delivery can provide a decisive competitive edge, especially when combined with an agile team mindset and smart integration of these tools into existing business processes.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.