Many things are changing in this new era of AI. Every day, we see new AI tools reaching new milestones, and yet we are still in a relatively early stage (although, if you think about it, it’s not that early — AI as a discipline dates back to the 1940s and 50s).

On the other hand, and as we’ve seen in recent years with Cloud platforms, some things may not change much in the world of development. That’s because the major AI platforms used by most people are still controlled by a handful of large multinational corporations. We can confirm this by looking at the AI investment breakdown from the top tech companies and the recent AI-related hires.

Naturally, this means we’ll be more or less dependent on what these companies decide in terms of tool features, data handling, privacy, and pricing. While this model will likely remain (and undoubtedly provides value), we now also have a more decentralized alternative: open AI tools and models.

Alongside the rise of major AI platforms, open development communities have emerged. These communities aim to make AI applications and resources more accessible, relying on open source, without being tied to any one company or technology stack.

In this series of posts, we’ll explore some of these more decentralized platforms and what they can offer — particularly for development teams.

Foundational Concepts

While this article has a hands-on focus — showing how to interact with LLMs in a straightforward way — there are some key concepts that are important to understand to better grasp how certain configurations impact the behavior or accuracy of LLM responses.

Below, we explain a couple of high-level concepts that help shed light on how these models work under the hood.

How LLMs Work

LLMs (Large Language Models) are AI models built on neural networks and trained using self-supervised machine learning on massive datasets, allowing them to understand and generate natural language and other types of content to perform a wide range of tasks.

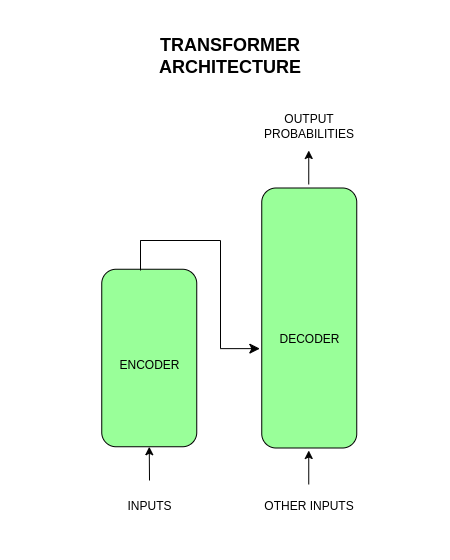

This gives us a general sense of how they can help us in our daily work. But… how do they really work internally? Many modern LLMs are based on the Transformer architecture, composed of neural networks trained as language models.

In the Transformer architecture, words (inputs) are converted into vector representations or embeddings which are then used, along with optional additional inputs, to generate probabilistic predictions (words) to accomplish the task at hand (text generation, translation, summarization, etc.).

The Transformer architecture consists of two main components:

- Encoder: Receives the input and creates a contextual representation (embedding). It’s designed to “understand” the input.

- Decoder: Uses the encoder's output and additional input to generate an output (typically probabilities). It's designed to “produce” output.

At a high level, this architecture relies on statistical knowledge of language, i.e., the probabilities of one word appearing in a given context. While originally developed for translation tasks, it can be adapted for other NLP tasks (Natural Language Processing).

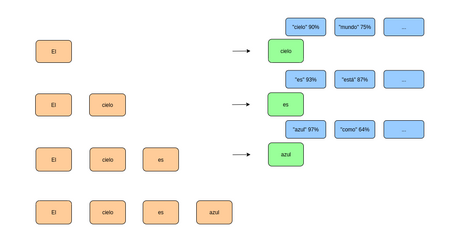

For example, to perform a text generation task, the Transformer architecture processes input words in parallel to predict the next word based on those inputs and their context.

Based on these probabilities, a word is selected and the process repeats — the newly generated word is added to the input. This cycle continues iteratively, with each generated word becoming part of the input, until the full text is generated. Naturally, during this iterative process, the output probabilities can vary depending on many tunable parameters in the LLM.

Quantization

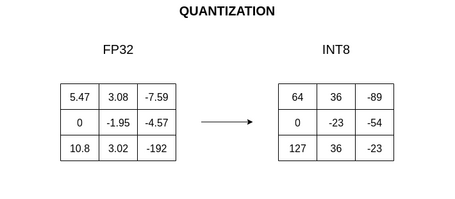

Quantization is the process of reducing the precision of a model’s weights, converting numbers from floating point format (e.g., 16 or 32 bits) to integers (e.g., 4 or 8 bits).

This precision reduction affects the embeddings (how words are represented in the Transformer architecture described above). There are several ways to implement this, but the goal is the same: reduce model file size and RAM usage, and often improve inference speed as well.

Ultimately, quantization enables the execution of larger models on the same hardware, though it comes at the cost of some loss in quality or accuracy — sometimes negligible.

Below is a sample comparison of quantized models (original table):

| Model | Metric | F16 | Q2_K | Q3_K_M | Q4_K_S |

|---|---|---|---|---|---|

| 7B | *perplexity | 5.9066 | 6.7764 | 6.1503 | 6.0215 |

| 7B | file size | 13.0G | 2.67G | 3.06G | 3.56G |

| 7B | milliseconds/token @ 8th, M2 Max | 111 | 36 | 36 | 36 |

| 7B | ms/tok @ 4th, Ryzen 7950X | 214 | 57 | 61 | 68 |

| 13B | perplexity | 5.2543 | 5.8545 | 5.4498 | 5.3404 |

| 13B | file size | 25.0G | 5.13G | 5.88G | 6.80G |

| 13B | ms/tok @ 8th, M2 Max | 213 | 67 | 77 | 68 |

| 13B | ms/tok @ 4th, Ryzen 7950X | 414 | 109 | 118 | 130 |

* Perplexity refers to a metric used to evaluate the prediction performance of a model. The lower the value, the better the model.

Model quantization is widely used in scenarios with limited resources, such as mobile applications, autonomous vehicles, IoT devices, drones, and more.

The concepts mentioned above may be among the most relevant for basic interaction with LLM execution tools or the LLMs themselves, but there are many more to be aware of (tokens, prompts, embeddings, RAG...).

Benefits of Running LLMs Locally

Nowadays, chatbots based on LLMs/AI can be found in nearly every application or website to help us with daily tasks.

In general, interactions with these applications are straightforward and pose no immediate security or privacy risks. Even if some platforms offer these services for free, there is always an underlying cost (often a significant one).

While these factors may not concern users initially, they are critical for businesses, as they can lead to billing issues and security risks. In such cases, it is more appropriate to run LLMs on machines controlled by the user or organization, offering several benefits such as:

- Privacy and security: All data generated from interactions with LLMs remains on your machine: prompts, documents sent to the model, model responses, etc. It is crucial to carefully review each tool’s documentation to determine whether any data is collected and what kind. This ensures full privacy and data control—especially useful in sensitive industries such as healthcare and finance.

- Cost savings and performance: Most LLM-based chat tools have request-based or subscription-based pricing. Aside from hardware requirements, running LLMs locally incurs no additional costs. Furthermore, local execution eliminates the need for internet connectivity, improving metrics like response latency—especially important in environments with slow or no internet access.

- Customization: Some local LLM execution tools allow you to tweak model behavior through parameters. There are also options to copy or import weights from other models, making it easy to personalize them. This standardization has fueled the rise of a vibrant open-source community sharing models for various use cases.

Throughout this post series, we will explore some platforms/tools that allow us to run LLMs locally on our PCs in a simple and transparent way.

Ollama

Ollama is an application that allows users to run and interact with LLMs locally on their machine without needing a constant internet connection. This applies to interactions with LLMs, although you will need an internet connection to install Ollama itself and download the models to your machine.

Ollama is built on top of the llama.cpp library, providing a wrapper layer that simplifies interaction and management of LLMs, abstracting many of the lower-level concepts for developers and users.

System Requirements

Before beginning installation, keep in mind the system requirements for running Ollama smoothly. These requirements primarily pertain to the models run within Ollama, as the Ollama software itself is lightweight. Key requirements include:

- RAM: Likely the most important resource. The RAM required depends on the model size:

- 8GB: minimum recommended for small models (1B, 3B, 7B), though performance may suffer.

- 16GB: recommended for smooth performance with 7B and 13B models.

- 32GB or more: optimal for larger models (30B, 40B, 70B).

- GPU: Crucial for accelerating model performance by handling massive parallel computations. GPU acceleration options are available via drivers compatible with each OS and for Docker-based setups using NVIDIA or AMD.

- Disk space: While Ollama’s codebase is relatively small, model files take up significant space depending on their size. Estimates:

- Small quantized models → 2GB required

- Medium quantized models → 5GB required

- Large quantized models → 40GB required

- Very large models → 200GB required (some can reach 1.3TB!)

- CPU: Most modern processors should suffice, though a minimum of 4 cores is recommended, and 8+ cores is ideal.

- Operating system: Latest versions of major OSes (Windows, Linux, macOS) should be compatible, though some may require additional configuration.

Installing Ollama

There are several ways to run Ollama on your system depending on your OS and needs:

- Install Ollama locally for Windows, Linux, or macOS.

- Use the Ollama Docker image.

In this post, we will install Ollama for Linux following the official documentation. Simply download Ollama using the command provided on their website:

curl -fsSL https://ollama.com/install.sh | sh

Once Ollama is installed on your system, it's helpful to understand certain aspects that will be useful for running it effectively:

Model Storage

Each operating system has a different folder path where Ollama stores downloaded models. It's important to be aware of this for managing disk space and making backups.

On Linux, with the default recommended installation, models are stored at the path: /usr/share/ollama/.ollama/models.

Environment Variables

Ollama allows customization via environment variables. Some of the most useful include:

- OLLAMA_HOST: Defines the network interface and port where the Ollama API server listens. The default value is 127.0.0.1:11434.

- OLLAMA_MODELS: Custom path to store downloaded models. Make sure the folder exists and has the necessary permissions for Ollama to download and read the models.

- OLLAMA_ORIGINS: Handles CORS for the Ollama API, preventing cross-origin requests. As usual, you can use "*" to allow all origins.

- OLLAMA_DEBUG: Set to 1 to enable debug mode.

- OLLAMA_KEEP_ALIVE: Time (in seconds) a model stays in memory after the last request. By default, it's about 5 minutes. A value of 0 unloads the model immediately after use, while -1 keeps it loaded indefinitely.

Note that how you configure these variables depends on the installation method used for Ollama. In the case of the default installation on Linux, you’ll need to override the corresponding config file by running the following commands:

- Run the command:

sudo systemctl edit ollama.service

- Include the lines with the variables and their values in the configuration file:

[Service]

Environment="OLLAMA_MODELS=/path-personalizado"

Environment="OLLAMA_HOST=0.0.0.0:11434"

Environment="OLLAMA_DEBUG=1"

...

- Save the changes to the file. The original file in Linux is located in the path /etc/systemd/system/ollama.service.d.

- Run the following command to apply the changes:

sudo systemctl daemon-reload

- Run the following command to restart the Ollama service:

sudo systemctl restart ollama

Application logs

Specific to each operating system. On Linux (with Ollama running natively), you can run the following command to view the logs (add the -f option to the command to view the logs in real time):

sudo journalctl -u ollama

Producing an output like the following:

...

-- Reboot --

date&time user systemd[1]: Started Ollama Service.

date&time user ollama[2598]: ... routes.go:1230: INFO server config env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:2048 OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/usr/share/ollama/.ollama/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* ...] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]"

date&time user ollama[2598]: time=... level=INFO source=images.go:432 msg="total blobs: 25"

date&time user ollama[2598]: time=... level=INFO source=images.go:439 msg="total unused blobs removed: 0"

date&time user ollama[2598]: time=... level=INFO source=routes.go:1297 msg="Listening on 127.0.0.1:11434 (version 0.6.1)"

date&time user ollama[2598]: time=... level=INFO source=gpu.go:217 msg="looking for compatible GPUs"

date&time user ollama[2598]: time=... level=INFO source=gpu.go:377 msg="no compatible GPUs were discovered"

date&time user ollama[2598]: time=... level=INFO source=types.go:130 msg="inference compute" id=0 library=cpu variant="" compute="" driver=0.0 name="" total="... GiB" available="... GiB"

...

By adding the option -n 100 to the previous command, we can view the last 100 lines of the log. Using the Linux terminal pipe options, we can export the Ollama logs to a file with the following command:

sudo journalctl -u ollama.service > ollama_logs.txt

Permission Errors

On Linux, errors may occur during model creation or download if the user doesn't have write permissions in the directory where models are stored.

History File

This file stores the history of conversations with Ollama. On Linux, it can be found at: ~/.ollama/history.

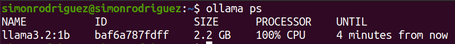

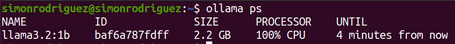

GPU Acceleration

When a model is running, the ollama ps command will indicate—via a cpu/gpu value—whether GPU acceleration is being used. For this section, the PROCESSOR property should show 100% GPU to confirm GPU usage. We'll explore the behavior of ollama ps in more detail in a later section.

You may also encounter errors such as CUDA error or ROCm error during execution. If so, make sure to check the GPU drivers and system configuration for compatibility.

Below is an example of running ollama ps where GPU acceleration is not active:

Basic Ollama Commands

Once Ollama is installed, you can interact with it and the associated models using the following commands.

PULL

This command is used to download available models from the Ollama website. It fetches the necessary files to run the model and updates them if newer versions are available. Here's an example command:

ollama pull llama3.2:1b

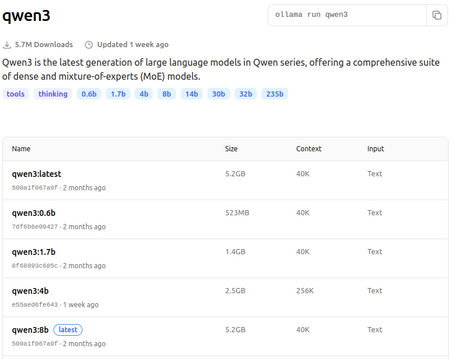

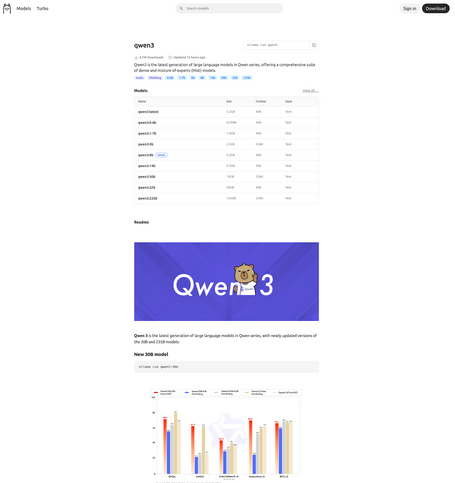

On the Ollama website, in the models section, you'll find various tags that let you filter models by topic:

Each model also displays multiple tags following a common structure:

- Size: indicates the number of parameters the model was trained with, which affects both its capabilities and resource requirements. Generally, the more parameters, the better the performance. Examples: 1b, 3b, 7b, 13b, 32b, 70b, etc.

- Quantization: as mentioned earlier, quantization reduces the model size to lower its resource usage, although at the cost of precision. Lower numbers (e.g., q4) mean more compression and thus less accuracy. Variants with K suffixes (K-quants: _K_S, _K_M, _K_L; S = small, M = medium, L = large) aim to balance size and accuracy. Values like fp16 or fp32 indicate minimal or no quantization, providing higher precision but requiring more resources. Common examples: q2_K, q4_K_M, q8_0, fp16, etc.

- Variants: models may be tagged with variants such as:

- instruct: designed to follow instructions.

- chat: optimized for conversational use.

- code: tailored for coding tasks.

- vision: built to handle images and multimodal input.

- Latest: this is the model downloaded by default when no tag is specified. Note that it doesn’t necessarily refer to the newest model released, but rather to a commonly used one that strikes a balance between performance and resource usage (usually a quantized medium-sized model).

It's worth noting that model tagging may evolve or new tags may appear. On each model’s page, you'll find relevant details about its characteristics and, in some cases, performance benchmarks.

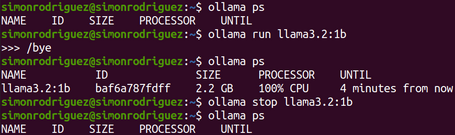

RUN

Command to run a model and interact with it:

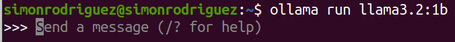

ollama run llama3.2:1b

If the model hasn't been downloaded beforehand, Ollama will download it. Once the model is fully loaded, an interactive prompt will appear where you can start sending requests to the model:

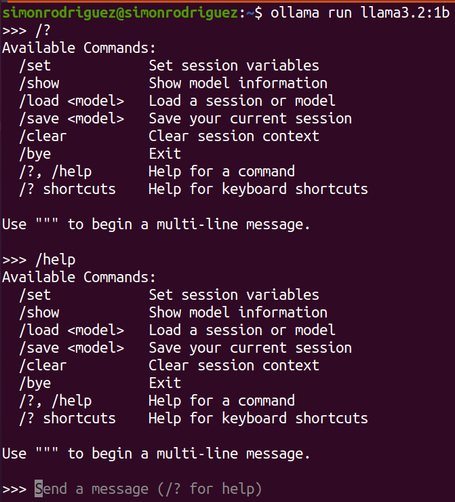

Within this interactive prompt, several special commands are available:

- /? or /help: displays the help menu with the rest of the available commands.

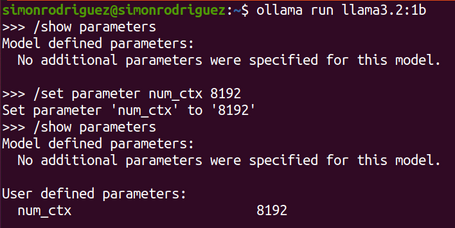

- /set parameter

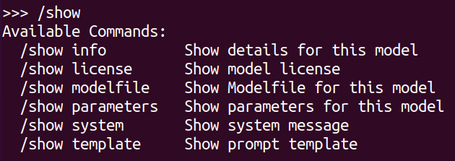

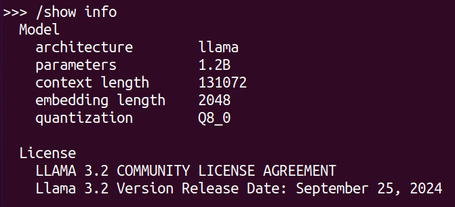

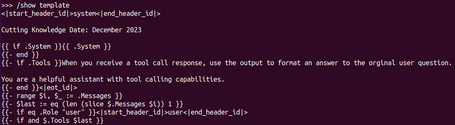

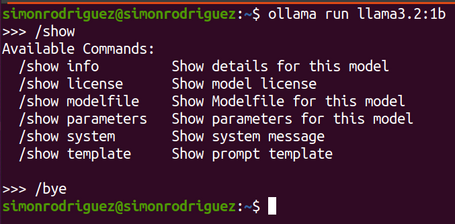

- /show: displays information about the loaded model such as parameters, model file content, template (instruction that defines the prompt format for interaction), or license.

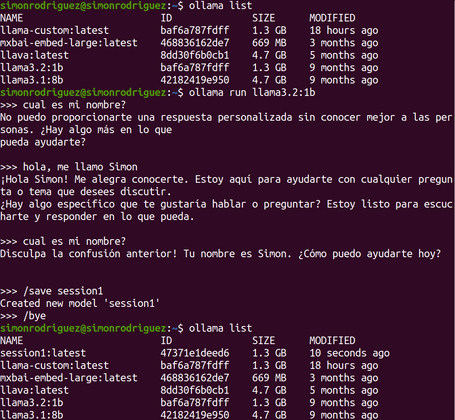

- /save

: saves the chat history by creating a new model.

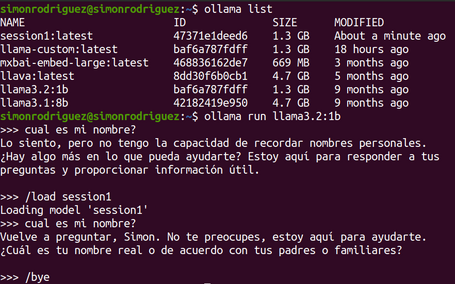

- /load

: loads a previously saved session.

- /clear: clears the session.

- /bye or /exit: exits the interactive session.

Additionally, there are other variants of the run command, such as:

- Running a multimodal model by providing a prompt and an image path:

ollama run llava "What's in this image? ./multimodal.jpg"

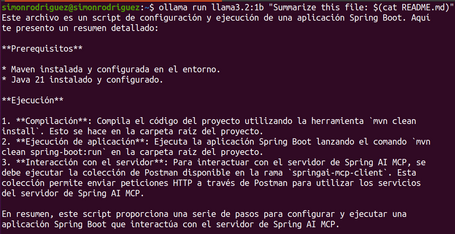

- Specify the prompt as an argument:

ollama run llama3.2:1b "Summarize this file: $(cat README.md)"

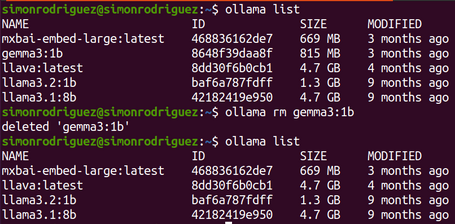

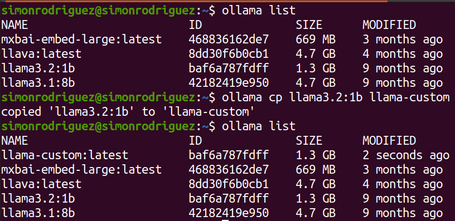

LIST

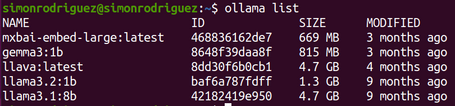

Command to list downloaded models:

ollama list

Displays information such as the model name, ID, size on disk, and last modified date:

SHOW

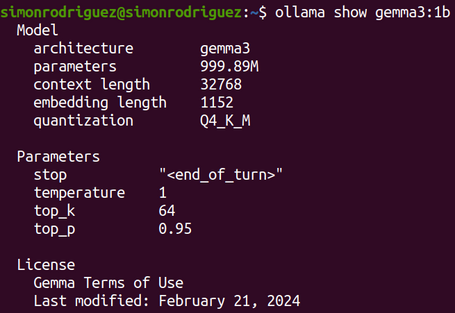

Command that displays detailed information about each model:

ollama show gemma3:1b

It will display information such as the parameter configuration, metadata, or template details.

RM

Command to remove a model from Ollama:

ollama rm gemma3:1b

CP

Command to copy an existing model, which can be used for model customization. In reality, the command does not copy the entire file but rather its reference. This means that if, for example, you copy a 5GB model, it will not create a new 5GB file — instead, it copies the manifest file that references the model:

ollama cp llama3.2:1b llama-custom

PS

Command to view which models are currently loaded into memory, also useful to check whether GPU acceleration is being used in Ollama:

ollama ps

Provides information about the model, ID, size, processor used (CPU/GPU), and time since last access.

STOP

Command to stop a running model:

ollama stop llama3.2:1b

PUSH

Command to upload a created model to the Ollama registry.

ollama push llama-custom

The Ollama registry is a centralized repository for storing models used by the tool itself, and where users can publish their own models. This registry offers several benefits, including:

- Distribution: allows publishing and sharing custom models with the rest of the user community, fostering collaboration and reuse.

- Availability: once published, the model is available for download using the ollama pull command, reducing the risk of losing it due to issues with your local machine.

- Versioning and control: enables the creation of tags to better manage and track the characteristics of each model.

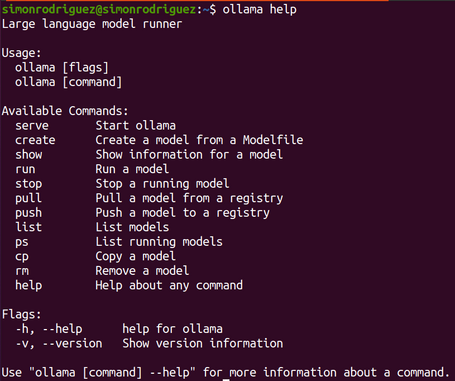

HELP

Command to learn how to use the rest of Ollama's commands.

ollama help

SERVE

Command to start Ollama. Useful in cases where Ollama is not running as a background system process.

ollama serve

How to choose the right model?

As we've seen in previous sections, several technical considerations must be taken into account when selecting the model you want or can use: available resources such as RAM, disk, CPU, etc., or specifics of each model: size, quantization, variants, etc.

But beyond the technical side, models can also be selected based on tasks or use cases, following these recommendations:

- General use cases or chat: use case involving interaction through a chat or general information requests. Some models to consider: llama3.2, mistral, gemma3, qwen3. This category may also include models under the Thinking tag on Ollama’s website.

- Coding tasks: use case for code generation to build software applications. Suitable models include: codellama or deepseek-coder.

- Text summarization or analysis: tasks involving summarizing or interpreting text (sentiment analysis, classification, etc.). Models labeled with instruct often perform better here.

- Multimodal tasks with images: when working with multimedia such as images, video, or audio, you’ll need specific models that support it. You can filter by the Vision tag on the Ollama website to find them. Some available models include: llava, moondream, or bakllava.

Ultimately, it’s important to read the documentation and specifications of each model and run experiments to evaluate which one performs best for your specific use case and dataset.

Conclusions

This introduction to running LLMs locally has covered some of the key concepts to keep in mind when working with these models, as well as how to choose the most suitable one for your use case.

Focusing on the practical side, we've seen how simple it is to interact with models via Ollama using its various commands.

In the next article, we’ll dive into more advanced Ollama options, including model customization.

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.