We begin the sixth installment of this series of architecture pattern posts. If you missed any, here’s a look at the previous articles:

- Microservices Architecture Patterns: What Are They and What Benefits Do They Offer?

- Architecture Patterns: Organization and Structure of Microservices.

- Architecture Patterns: Communication and Coordination of Microservices.

- Microservices Architecture Patterns: SAGA, API Gateway, and Service Discovery.

- Microservices Architecture Patterns: Event Sourcing and Event-Driven Architecture (EDA).

We are concluding the section on Communication and Coordination Between Microservices by exploring CQRS (Command Query Responsibility Segregation), BFF (Backend for Frontend), and Outbox.

But the series does not end here, as there is much more content coming in future posts, where we will cover additional patterns such as those related to scalability and resource management with auto-scaling, migration, testing, security, and more.

Communication and Coordination Between Microservices

CQRS (Command Query Responsibility Segregation)

The CQRS (Command Query Responsibility Segregation) pattern is an architectural design pattern that proposes separating the responsibility of reading (query) from writing (command) in an application. This separation allows each of these operations to be optimized independently, which can lead to a more scalable, flexible, and maintainable system.

Components of the CQRS Pattern:

- Command: Represents a write operation or modification of data in the system. Commands are responsible for making changes to the system state.

- Query: Represents a read operation or data retrieval from the system. Queries are responsible for retrieving data without modifying the system state.

- Read Model: A data model optimized for read and query operations. It may be specifically designed to meet the application’s query needs and may contain aggregated or precomputed data for better performance.

- Write Model: A data model optimized for write and modification operations. It may be structured differently from the read model and can be optimized for efficient write operations.

Features and Benefits of CQRS:

- Optimized Operations and Scalability: Separates read (query) and write (command) operations, allowing independent scalability of each as needed.

- Performance Improvement: Specific optimization techniques can be applied to improve performance, leading to a better user experience.

- Rich Domain Modeling: CQRS enables a more expressive and robust domain model, as each data model is designed specifically for the operations it supports.

- Flexibility in Evolution: Each part of the system can evolve independently, making it easier to introduce changes without impacting other components.

- Security and Control: More granular access control can be applied, enhancing system security.

- Clearer Design: The separation of responsibilities improves system clarity and maintainability.

Challenges of CQRS:

- Implementation Complexity: Separating responsibilities can introduce additional complexity in system design and implementation, especially in distributed systems.

- Data Consistency: Maintaining consistency between the read and write models can be challenging, particularly in distributed systems where data may be replicated across multiple locations.

- User Interface Coherence: Splitting read and write operations may require synchronizing the user interface to ensure a consistent user experience, adding development complexity.

- Component Communication: Communication between read and write components can be complex and may require messaging patterns for effective and reliable communication.

- Transaction Management: Handling transactions can be more complex in a CQRS system due to the separation of data models and operations.

- Increased Overhead: Managing and synchronizing separate data models can introduce overhead, affecting system performance in some cases.

In summary, the CQRS pattern is a valuable technique for improving performance, scalability, and flexibility by separating read and write operations. However, it also introduces challenges in terms of complexity and data consistency that must be carefully considered before implementation.

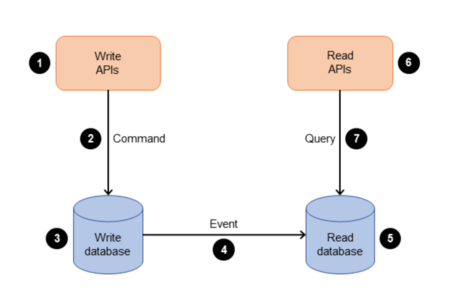

The diagram illustrates the following processes:

- A company interacts with the application by sending commands through an API. Commands include actions like creating, updating, or deleting data.

- The application processes the incoming command in the command layer. This involves validating, authorizing, and executing the operation.

- The application stores the command data in the write database.

- Once the command is stored in the write database, events are triggered to update the read database.

- The read database processes and stores the data. Read databases are designed to be optimized for specific query requirements.

- The company interacts with the read APIs to send queries to the read side of the application.

- The application processes the incoming query in the read layer and retrieves the data from the read database.

Here is a code example:

Let's assume we have a product management application where users can add new products and also query the list of available products.

First, define a command to add a new product:

public class AddProductCommand {

private String name;

private double price;

// Constructor, getters, and setters

}

Then, create a controller to handle write commands:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class ProductCommandController {

private final ProductCommandService productCommandService;

@Autowired

public ProductCommandController(ProductCommandService productCommandService) {

this.productCommandService = productCommandService;

}

@PostMapping("/products")

public void addProduct(@RequestBody AddProductCommand command) {

productCommandService.addProduct(command);

}

}

The command service handles command logic:

import org.springframework.stereotype.Service;

@Service

public class ProductCommandService {

public void addProduct(AddProductCommand command) {

// Logic to add a new product

System.out.println("New product added: " + command.getName());

// The write operation in the database would be performed here

}

}

To handle queries, define a DTO (Data Transfer Object) for product information:

public class ProductDTO {

private String name;

private double price;

// Constructor, getters y setters

}

Create a controller to handle queries:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

@RestController

public class ProductQueryController {

private final ProductQueryService productQueryService;

@Autowired

public ProductQueryController(ProductQueryService productQueryService) {

this.productQueryService = productQueryService;

}

@GetMapping("/products")

public List<ProductDTO> getAllProducts() {

return productQueryService.getAllProducts();

}

}

This example demonstrates how CQRS separates write (command) operations from read (query) operations, allowing each to be optimized and scaled independently.

BFF (Backend for Frontend)

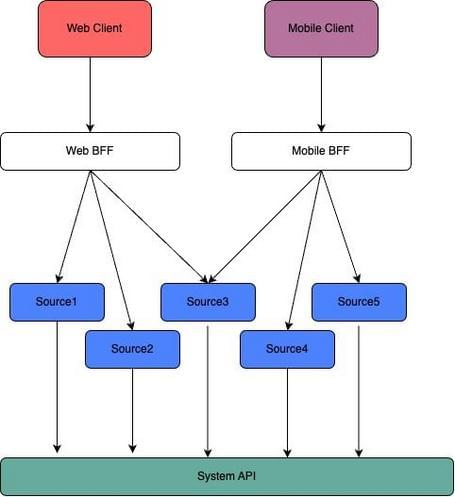

The Backend for Frontend (BFF) pattern is an architectural approach that suggests creating specialized backends for specific frontend applications. Instead of having a single backend that serves all frontend clients' needs, the BFF pattern recommends creating multiple specialized backends, each designed to meet the specific needs of a frontend client or user interface.

Characteristics of the BFF Pattern:

- Specialized Backends: Each frontend client has its own specialized backend designed to meet its specific data and functionality requirements.

- Decoupling: Enables decoupling between frontend and backend, as each frontend interacts with a dedicated backend that provides the required functionality.

- Optimized User Experience: Allows optimizing the user experience by providing backends specifically tailored to each frontend client’s needs and requirements.

- Security and Privacy: Enables the implementation of security and privacy policies specific to each frontend client since each backend can independently manage authorization and authentication.

Components of the BFF Pattern:

- Backend for Frontend (BFF): A specialized backend designed to serve a specific frontend client, providing the necessary services and data.

- Frontend Client: The user interface that interacts with the corresponding backend. This can be a web application, a mobile app, or another type of client.

Advantages of the BFF Pattern:

- Optimized User Experience: Provides specialized backends that cater to the specific needs of each frontend client.

- Decoupling: Makes it easier to separate frontend and backend concerns by allowing each frontend client to interact with a dedicated backend.

- Security and Privacy: Facilitates the implementation of security policies tailored to each frontend client, as each backend can independently manage authorization and authentication.

- Scalability: Enhances scalability by enabling independent scaling of backends based on the needs of each frontend client.

Challenges of the BFF Pattern:

- Additional Complexity: Having multiple backends adds complexity in terms of design, implementation, and maintenance.

- Data Consistency: Managing data consistency across different backends can be challenging, requiring synchronization mechanisms.

- Development Overhead: Increases development workload since multiple specialized backends need to be created and maintained.

In summary, the BFF pattern is a useful technique to optimize the user experience by providing specialized backends tailored to each frontend client’s requirements. However, it also introduces additional complexity and overhead that must be carefully considered.

Example: Implementing BFF in Java

To illustrate the BFF pattern in Java, let's consider a scenario where we have a web application and a mobile application that share common functionalities but also have specific requirements.

We will use Spring Boot to implement specialized backends for each frontend client.

Creating a Backend for the Web Application (Web BFF):

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class WebBFFController {

@GetMapping("/web/data")

public String getWebData() {

// Logic to retrieve web-specific data

return "Data for the web application";

}

}

Creating a Backend for the Mobile Application (Mobile BFF):

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MobileBFFController {

@GetMapping("/mobile/data")

public String getMobileData() {

// Logic to retrieve mobile-specific data

return "Data for the mobile application";

}

}

Each backend is specialized to serve the specific needs of its corresponding frontend client. The web application interacts with the ”/web/data” endpoint to obtain its data, while the mobile application must invoke the ”/mobile/data” endpoint to retrieve its own.

With this configuration, each frontend client has its own specialized backend that provides the necessary services and data for its respective user interface, ensuring a more optimized user experience and maintaining clear separation between frontend and backend.

Outbox

The Outbox pattern is a technique used in distributed architectures to ensure consistency between changes in a local database and the publication of events to a messaging system such as Kafka, RabbitMQ, or similar.

This pattern is particularly useful in situations where atomicity between database writes and event publication must be ensured, such as in event-driven systems or microservices architectures.

Components of the Outbox Pattern:

- Local Database: Where write operations generating events take place. This can be any relational or NoSQL database.

- Outbox: A table or collection in the local database where events pending publication are recorded. Each row or document in the outbox represents an event awaiting processing.

- Event Publishing Process: A process that periodically scans the outbox for pending events and publishes them to a messaging system.

Advantages of the Outbox Pattern:

- Atomicity: Ensures that database write operations and the corresponding event publications occur atomically, meaning either both succeed or neither happens.

- Consistency: Guarantees that published events accurately reflect changes made to the database, maintaining consistency between data and generated events.

- Durability: Published events are stored in a persistent log (the outbox), ensuring they are not lost in case of system failures.

- Decoupling: Separates business logic from event generation, as database write operations are not directly tied to event publication.

Challenges of the Outbox Pattern:

- Implementation Complexity: Requires careful implementation to ensure atomicity between database writes and event publication, introducing additional complexity.

- Event Synchronization: Must ensure that published events remain synchronized with database changes to avoid inconsistencies.

- Scalability: The event publishing process must be scalable to handle large volumes of pending events.

In summary, the Outbox pattern is an effective technique for ensuring consistency and atomicity between local database changes and related event publication.

Although it adds implementation complexity, it provides a robust solution for event-driven systems or microservices architectures.

Example: Implementing the Outbox Pattern in Java with Kafka

Here’s a simplified example of how to implement the Outbox pattern in Java using Spring Boot and Kafka for processing a purchase event in an e-commerce system.

Defining an Entity for Purchase Events in the Outbox:

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.GenerationType;

import javax.persistence.Id;

@Entity

public class PurchaseEvent {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

private Long id;

private Long userId;

private Long productId;

private int quantity;

public PurchaseEvent() {}

public PurchaseEvent(Long userId, Long productId, int quantity) {

this.userId = userId;

this.productId = productId;

this.quantity = quantity;

}

// Getters and Setters

}

Creating a Service to Handle Purchase Event Persistence:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

@Service

public class PurchaseEventService {

@Autowired

private PurchaseEventRepository purchaseEventRepository;

public void addPurchaseEventToOutbox(Long userId, Long productId, int quantity) {

PurchaseEvent event = new PurchaseEvent(userId, productId, quantity);

purchaseEventRepository.save(event);

}

}

Processing and Publishing Events from the Outbox to Kafka:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

@Component

public class PurchaseEventPublisher {

@Autowired

private PurchaseEventRepository purchaseEventRepository;

@Autowired

private KafkaProducer kafkaProducer;

@Scheduled(fixedDelay = 1000) // Executes every second

public void publishPendingPurchaseEvents() {

List<PurchaseEvent> pendingEvents = purchaseEventRepository.findAll();

for (PurchaseEvent event : pendingEvents) {

kafkaProducer.send("purchaseEvent", event.toString()); // Send event to Kafka

purchaseEventRepository.delete(event);

}

}

}

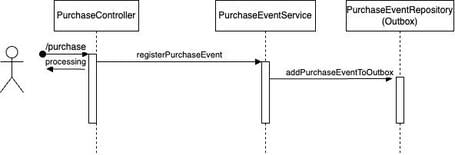

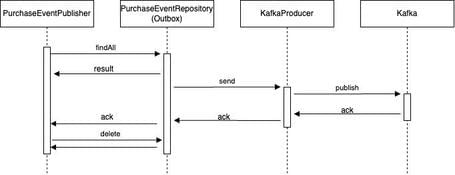

In this adapted example, when a POST request is made to "/purchase" with the purchase details, a purchase event is recorded in the outbox.

Then, the PurchaseEventPublisher component periodically scans the outbox and sends purchase events to Kafka for further processing.

- The client makes a POST request to "/purchase" with the purchase details (userId, productId, quantity).

- The PurchaseController receives the request and calls the registerPurchaseEvent method from the PurchaseEventService.

- PurchaseEventService creates a new PurchaseEvent object with the purchase details and stores it in the outbox via the PurchaseEventRepository.

- The PurchaseEventPublisher component periodically scans the outbox for pending events.

- PurchaseEventPublisher finds the pending purchase event and sends it to Kafka using KafkaProducer.

- Kafka receives the purchase event.

- Then, interested consumers retrieve it for further processing.

Conclusion

In summary, effective communication and proper coordination between microservices are essential for success, though they are not enough on their own.

By understanding the different approaches and tools available, organizations can build more flexible, scalable, and robust systems that can adapt to changing market and business demands.

This concludes our discussion on microservices communication and coordination patterns. Stay tuned as we continue exploring more architectural patterns in upcoming posts.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.