We continue this series of posts comparing the two leading platforms in LLM engineering: Langfuse and LangSmith. If you’d like to learn more about the motivation behind this series, how the platforms are configured, and what steps are required to version prompts and trace LLM calls, make sure to check out the first post.

Datasets

Defining datasets is a key step in managing the lifecycle of your prompts. Datasets are collections of inputs and their corresponding expected outputs from the LLM, used to evaluate the application or specific components of it (such as prompts, the LLM itself, or the database).

It’s important to note that creating a dataset requires ground truths—the correct answers to the questions you want the LLM to handle—which serve as a benchmark to compare the model’s actual responses. In both LangSmith and Langfuse, datasets can be created iteratively, allowing you to incorporate real-world cases from production environments selectively. These datasets can be built using either the graphical interface or programmatically via code.

LangSmith

Creating a dataset in LangSmith is as straightforward as using the .create_dataset() method with the desired name. To add examples, you then use the .create_examples() method and fill in the necessary parameters:

- inputs: A list of dictionaries representing the input the LLM will receive (the ‘prediction’ function) on which the generation will be based. In the following example, we assume the input will be the ‘question.’ While it will also receive dynamic inputs like chunks, these are expected to be inserted directly into the prediction function.

- outputs: A list of dictionaries of the same size as the inputs list, containing the ground truths (expected answers). In our example, this corresponds to the anticipated response to the question. Outputs can also include multiple elements, such as in cases where information extraction is being evaluated.

dataset_name = "Dataset1"

dataset = client.create_dataset(dataset_name)

client.create_examples(

inputs = [

{"question": "What color was Little Red Riding Hood?"},

{"question": "What does the wolf want?"},

],

outputs = [

{"answer": "Little Red Riding Hood was red."},

{"answer": "The wolf wants to eat Little Red Riding Hood."},

],

dataset_id = dataset.id,

)

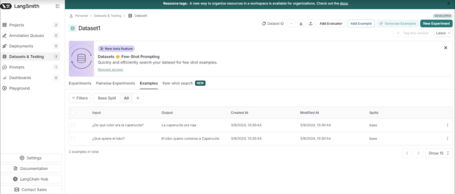

In LangSmith, a dataset is displayed as follows:

Langfuse

In Langfuse, there is also a .create_dataset() method:

langfuse.create_dataset(

name="simple_dataset",

description="Test dataset",

# Optional metadata

metadata={

"author": "Miguel",

"date": "2024-08-06",

"type": "tutorial"

}

)

However, to add items to the dataset, it must be done one by one:

langfuse.create_dataset_item(

dataset_name="simple_dataset",

input={

"text": "What color was Little Red Riding Hood?"

},

expected_output={

"text": "Little Red Riding Hood was red"

},

)

langfuse.create_dataset_item(

dataset_name="simple_dataset",

input={

"text": "What does the wolf want?"

},

expected_output={

"text": "The wolf wants to eat Little Red Riding Hood"

},

)

Datasets also enable control over prompt deterioration. Datasets allow for monitoring the degradation of a prompt’s performance, which may be linked to changes in the LLM version or incremental adjustments due to evolving input data over time.

Experimentation and Evaluation

As mentioned earlier, the purpose of creating a dataset is to evaluate a specific part of the application.

Both LangSmith and Langfuse integrate seamlessly with the LangChain evaluation framework and also with RAGAS (a library for automatic metrics designed to evaluate RAG use cases). In this scenario, however, we will create a custom evaluation prompt to assess whether the generated content includes the information from the reference content (generated response vs. correct answer).

For this, we will once again utilize a ChatPromptTemplate, but this time we will include some behavior examples (few-shot technique) to ensure the format is more predictable (e.g., returning “True” or “False”).

LangSmith

To begin, we will write shared code for both platforms: the system template, the template for inserting the human message, and a template with examples:

from langchain_core.prompts.few_shot import FewShotChatMessagePromptTemplate

from langchain_core.messages.human import HumanMessage

evaluation_prompt_system = """

You will receive two text fragments: one as the reference

and the other as the text to evaluate. Your task is to

determine if the text to evaluate semantically contains

all the information in the reference text. Respond only

with 'True' or 'False'. Consider the following:

1. The evaluated text does not need to be literally the

same as the reference text.

2. The evaluated text must contain all the information

in the reference text.

3. The evaluated text can include additional information

beyond the reference text.

4. Only return 'True' or 'False' depending on whether the

conditions are met.

You will receive:

reference_text: Reference text

evaluate_text: Text to evaluate

"""

human_prompt = """

reference_text: {reference_text}

evaluate_text: {evaluate_text}

"""

# Few-shot examples

examples = [

{

"reference": "The house is blue",

"evaluate": "The house is blue and has a brown door",

"response": "True"

},

{

"reference": "The Russian Revolution began in 1328",

"evaluate": "The Russian Revolution began in 1917",

"response": "False"

},

{

"reference": "My cousin has blonde hair and blue eyes",

"evaluate": "My cousin Pedro has blue eyes",

"response": "False"

},

{

"reference": "I own two houses in Formentera",

"evaluate": "I own two beautiful houses in Formentera",

"response": "True"

}

]

example_prompt = ChatPromptTemplate.from_messages([

('human', 'reference_text: {reference}\nevaluate_text: {evaluate}'),

('ai', '{response}')

])

# Creating the few-shot prompt

few_shot_template = FewShotChatMessagePromptTemplate(

example_prompt=example_prompt,

examples=examples

)

Once we have the three templates, we need to create our prompt_to_evaluate to upload it to the platform. Let’s start with LangSmith:

# Creating messages for few-shot examples

examples_msgs = [

("human", i.content) if isinstance(i, HumanMessage)

else ("ai", i.content)

for i in few_shot_template.format_messages()

]

# Combining the system prompt with few-shot examples and human prompt

prompt_to_evaluate = ChatPromptTemplate.from_messages(

[("system", evaluation_prompt_system)] +

examples_msgs +

[("human", human_prompt)]

)

# Uploading the prompt to LangSmith

client.push_prompt(

"prompt_for_evaluation",

object=prompt_to_evaluate

)

For the custom evaluation stage, it is necessary to define:

- A function (a callable) that handles generation. This can internally involve, for example, a call to the API that serves our application.

- A function that performs the evaluation and returns a dictionary with a key field containing the name of the metric and a score field with its numerical value.

Next, we define both functions. The generation function (generate_response()) initializes the LLM, loads the prompt to be evaluated, and invokes the model. It must accept a dictionary as input, corresponding to the dictionaries defined as inputs when creating the dataset. This input will contain the necessary fields defined during the dataset creation process (e.g., question in our case). The output should also be a dictionary, with fields that will later be referenced by the evaluation function (in this case, output).

The evaluation function (evaluate_shot()) takes a Run object and an Example object from LangSmith as arguments. Inside the function, the evaluation prompt is instantiated, and we retrieve both the example output (defined as answer in the dataset storage) and the execution result, which follows the same structure defined in the return of the generation function (in our case, it will only contain the output field).

from langsmith.schemas import Example, Run

from langsmith.evaluation import evaluate

from langchain.chains.question_answering import load_qa_chain

# Function to generate a response using the LLM

def generate_response(inputs: dict):

llm = ChatOpenAI(model="gpt-4o", temperature=0.1)

prompt = client.pull_prompt("prompt_rag")

chain = load_qa_chain(

llm=llm,

chain_type="stuff",

prompt=prompt,

document_variable_name="contexto"

)

# Perform generation

response = chain.invoke({

"input_documents": chunks,

"pregunta": inputs["question"]

})

return {"output": response["output_text"]}

# Function to evaluate the response against the expected output

def evaluate_shot(run: Run, example: Example):

prompt_evaluation = client.pull_prompt("prompt_for_evaluation")

reference = example.outputs["answer"]

output_to_evaluate = run.outputs["output"]

chain = prompt_evaluation | ChatOpenAI(model="gpt-4o")

response = chain.invoke({

"evaluate_text": output_to_evaluate,

"reference_text": reference

}).content

return {"key": "accuracy", "score": float(eval(response))}

Finally, to conduct the evaluation, we use the evaluate() function, passing the dataset, generation function, and evaluation function:

# Evaluate the dataset using the defined generation and evaluation functions

results = evaluate(

generate_response,

data="Dataset1",

evaluators=[evaluate_shot]

)

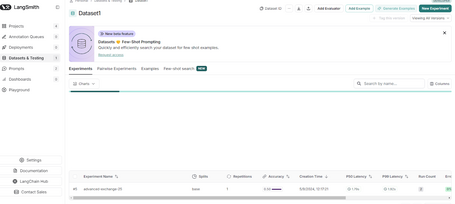

And now we can go to LangSmith to view our experiment, which has automatically been assigned a name composed of two random words and a number separated by hyphens. In this case, advanced-exchange-25.

When entering the experiment, we can see that another can be added for comparison. As shown, the column advanced-exchange-25 contains the actual responses and their respective scores. In this case, the first response has a score of 0 because the actual response says, “The wolf wants to eat the grandmother, Little Red Riding Ho…”, whereas the reference states, “The wolf wants to eat Little Red Riding Hood.” The second response has a score of 1 because it says, “Little Red Riding Hood is red,” matching the reference, “Little Red Riding Hood is red.”

Langfuse

Now, we’ll create the prompt_to_evaluate needed to upload it to Langfuse, using the same templates we defined for LangSmith.

examples_msgs = [

{

"role": "human",

"content": i.content

} if type(i) == HumanMessage

else {"role": "ai", "content": i.content}

for i in few_shot_template.format_messages()

]

prompt_to_evaluate = [{

"role": "system", "content": evaluation_prompt_system

}] + examples_msgs + [{

"role": "human", "content": human_prompt

}]

langfuse.create_prompt(

name = "evaluation_prompt",

prompt = prompt_to_evaluate,

labels = ["production"],

tags = ["evaluation", "simple_evaluation_prompt"],

config = {"llm": "gpt-4o"},

type = "chat"

)

Next, we define the function that will handle the evaluation prompt. Since it also involves generation, it will be traced, indicating that it is an evaluation trace:

@observe()

def generate_evaluation(llm_model, reference_text, evaluate_text, model_kwargs, metadata):

langfuse_handler = langfuse_context.get_current_langchain_handler()

langfuse_context.update_current_trace(

name = "evaluation_trace",

tags = ["evaluation", "first_evaluation"],

metadata = metadata

)

llm = ChatOpenAI(model = llm_model, **model_kwargs)

# Perform the generation

chain = evaluation_prompt | llm

response = chain.invoke(

{

"reference_text": reference_text,

"evaluate_text": evaluate_text

},

config = {"callbacks": [langfuse_handler]}

)

return response

We instantiate the dataset and the evaluation prompt:

dataset = langfuse.get_dataset("dataset_sencillo")

evaluation_prompt = ChatPromptTemplate.from_messages(

langfuse.get_prompt(

"evaluation_prompt"

).get_langchain_prompt())

Finally, we iterate through the dataset, apply the evaluation, and save the score:

for item in dataset.items:

with item.observe(

run_name = "evaluation",

run_description = "First Evaluation",

run_metadata = {"model": "gpt4-o"}

) as trace_id:

question = item.input['text']

expected_output = item.expected_output['text']

# Perform the generation

response = generate_response(

"gpt-4o",

question,

chunks,

{"temperature": 0.1}

)['output_text']

# Perform the evaluation

evaluation_value = generate_evaluation(

"gpt-4o",

expected_output,

response,

{"temperature": 0.1},

metadata = {"date": "2024-08-06"}

).content

# Save the score

langfuse.score(

trace_id = trace_id,

name = "evaluate_" + item.id,

value = float(eval(evaluation_value))

)

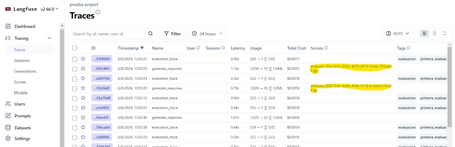

Now we can observe the evaluation traces in Langfuse, along with the names, tags, and their respective scores.

We can also go directly to the “scores” tab if we’re only interested in tracking how the scores have changed over time.

Finally, the dataset view also records this information in the “runs” tab, allowing us to compare evaluations for the same dataset at a glance.

Conclusions

As we have seen, these are two very similar platforms, but here’s a summary of their key differences:

- LangSmith offers complete integration with LangChain, making it easier to integrate with LLM-based applications. Langfuse is also compatible with LangChain but requires some code modifications.

- LangSmith is not open-source, so it requires a paid license. Langfuse, on the other hand, allows for a free self-hosted version but requires managing your own infrastructure.

- At the moment, LangSmith does not support differentiating versioning for prompts, datasets, or experiments by project (only tracing is project-specific). However, it appears to be more stable than Langfuse.

- Though not discussed in this post, LangSmith includes highly useful features in its UI, such as the playground and LangGraph for easily using agents (currently only available for paid accounts).

Both platforms are excellent options for monitoring your LLM-based applications—just choose the one that best fits your needs.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.