In the previous post, we took our first dive into Spring AI and its APIs. We talked about classes like ChatClient and ChatModel, which are the main entry points for interacting with LLMs. In this post, we continue exploring the module to look at features like multimodularity, prompts, and something as cross-cutting as observability in models.

Multimodularity

Humans acquire knowledge from various "data sources" (images, sound, text, etc.), so it's fair to say that our experiences are multimodal. In contrast, Machine Learning traditionally focused on a single modality—until recently, when models began to appear that can process multiple inputs, such as image and text, or audio and video at the same time.

Multimodularity refers to a model's ability to process information from multiple sources, and Spring AI, through its Message API, provides this support. The content field in the UserMessage class is used for text, while the media field allows the inclusion of additional content such as images, audio, or video, specified by the corresponding MimeType.

@RestController

public class MultimodalController {

private OllamaChatModel chatModel;

public MultimodalController(OllamaChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/multimodal")

String multimodal() {

ClassPathResource imageResource = new ClassPathResource("/static/multimodal.jpg");

UserMessage userMessage = new UserMessage("Explain to me what you see in the image", new Media(MimeTypeUtils.IMAGE_JPEG, imageResource));

return chatModel.call(new Prompt(List.of(userMessage))).getResult().getOutput().getText();

}

}

To see it in action, we’ll request a description of the following image:

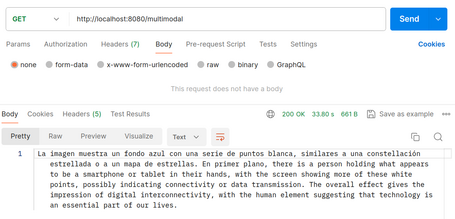

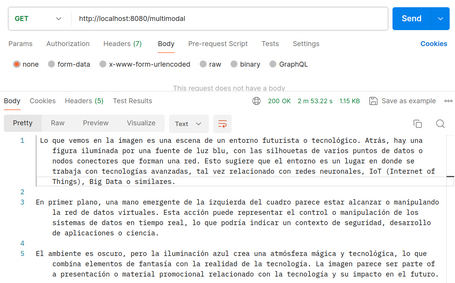

We have asked him in Spanish and we get responses like:

As shown, the model used can produce strange responses, even mixing languages. Another important aspect to keep in mind is the response time (in general, for all model calls), so it's essential to handle asynchrony as well as performance improvements in the code (such as using virtual threads).

Prompts

As mentioned earlier, prompts are the inputs sent to the models. Their design and structure significantly influence the model’s responses. Ultimately, Spring AI handles prompts with models similarly to how the “view” layer is handled in Spring MVC. This involves creating markers that are replaced with the appropriate value to send content dynamically.

The structure of prompts has evolved along with AI. Initially, they were just simple strings, but now many include placeholders for specific inputs (for example: USER:<user>

Roles

Each message is associated with a role. Roles categorize the message, providing context and purpose for the model, improving response effectiveness. The main roles are:

- System: guides the behavior and style of the response by setting rules for how it should respond. It’s like giving instructions before starting a conversation.

- User: the input provided by the user. This is the primary role to generate a response.

- Assistant: the AI’s “reply” to the user that maintains the flow of the conversation. Tracking these responses ensures coherent interactions. It may also include “Function Tool Call” requests to perform functions such as calculations or retrieving external data.

- Tool/Function: focuses on returning additional information in response to Tool Call Assistant messages.

In Spring AI, roles are defined as an enum.

public enum MessageType {

USER("user"),

ASSISTANT("assistant"),

SYSTEM("system"),

TOOL("tool");

...

}

Prompt Template

The PromptTemplate class is key to managing prompt templates, making it easier to create structured prompts.

public class PromptTemplate implements PromptTemplateActions, PromptTemplateMessageActions {}

The interfaces implemented by the class provide different aspects for prompt creation:

- PromptTemplateStringActions: for creating prompts based on Strings.

- PromptTemplateMessageActions: for creating prompts using Message objects.

- PromptTemplateActions: for creating a Prompt object that can be sent to the ChatModel.

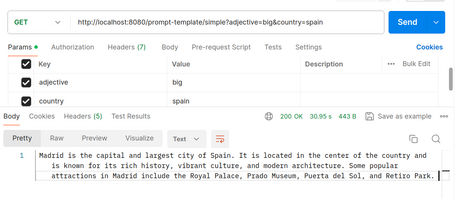

Some examples of using PromptTemplate:

@GetMapping("/simple")

String simplePromptTemplate(@RequestParam String adjective, @RequestParam String country) {

PromptTemplate promptTemplate = new PromptTemplate("Give a {adjective} city from {country}");

Prompt prompt = promptTemplate.create(Map.of("adjective", adjective, "country", country));

return chatModel.call(prompt).getResult().getOutput().getText();

}

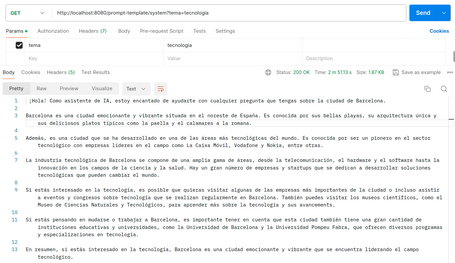

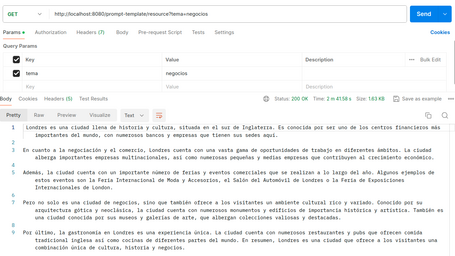

@GetMapping("/system")

String systemPromptTemplate(@RequestParam String topic) {

String userText = """

Give me information about Barcelona.

Respond with at least 5 lines.

""";

Message userMessage = new UserMessage(userText);

String systemText = """

You are an AI assistant that helps people with information about cities.

You must respond with information about the city on the topic {topic}.

Respond as if you were writing a travel blog.

""";

SystemPromptTemplate systemPromptTemplate = new SystemPromptTemplate(systemText);

Message systemMessage = systemPromptTemplate.createMessage(Map.of("topic", topic));

Prompt prompt = new Prompt(List.of(userMessage, systemMessage));

return chatModel.call(prompt).getResult().getOutput().getText();

}

In addition to using Strings for prompt creation, Spring AI supports creating prompts through resources (Resource), allowing you to define the prompt in a file that can then be used as a PromptTemplate:

@Value("classpath:/static/prompts/system-message.st")

private Resource systemResource;

SystemPromptTemplate systemPromptTemplate = new SystemPromptTemplate(systemResource);

Prompt Engineering

As mentioned earlier, the quality and structure of prompts have a significant impact on model responses, giving rise to a new profession. In the developer community, there's ongoing analysis and sharing of best practices to improve prompts in various scenarios, which leads to several key points for creating effective prompts, such as:

- Instructions: Create clear and concise instructions, just as you would when communicating with a person. This helps the AI understand what is expected.

- External context: Provide relevant contextual information to help the AI understand the broader scenario.

- User input: This is the user’s direct question or request — the core of the prompt.

- Output format: Specify the desired response format, even though this may sometimes be loosely followed. Providing request-response examples helps the AI better understand user intent.

Spring’s official documentation links to multiple resources to improve prompts, and there is also a large community online dedicated to this task.

Observability

As with any existing Spring framework/module, the observability section is essential to maintain and understand how applications behave, especially when issues arise. Spring AI provides metrics and traces for various components discussed earlier, such as ChatClient, ChatModel, and others to be covered later.

Some of the metrics and traces offer insights like parameters passed to the model, response times, tokens, and models used, etc. Special care must be taken with what gets logged, as it could potentially leak sensitive user data. You can find all available metrics and data in the official documentation.

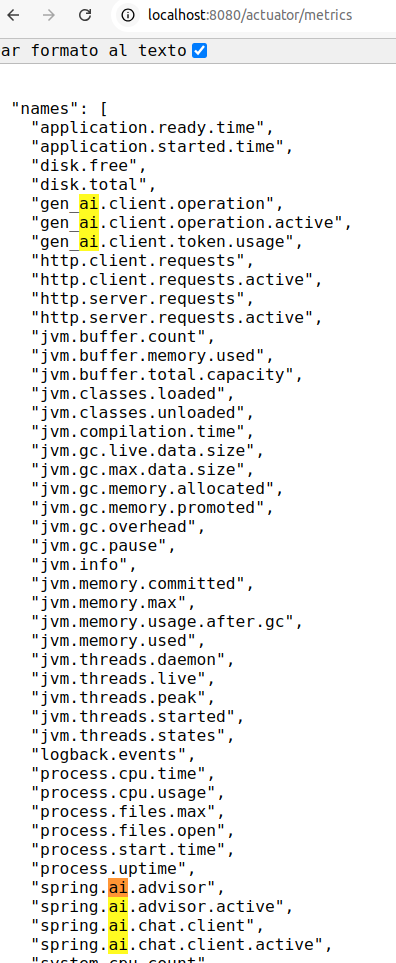

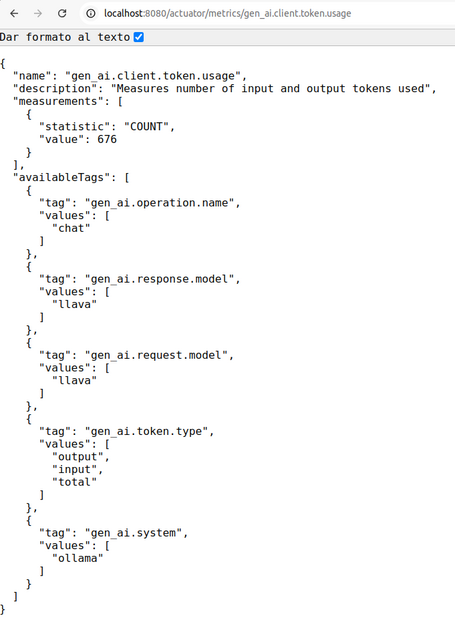

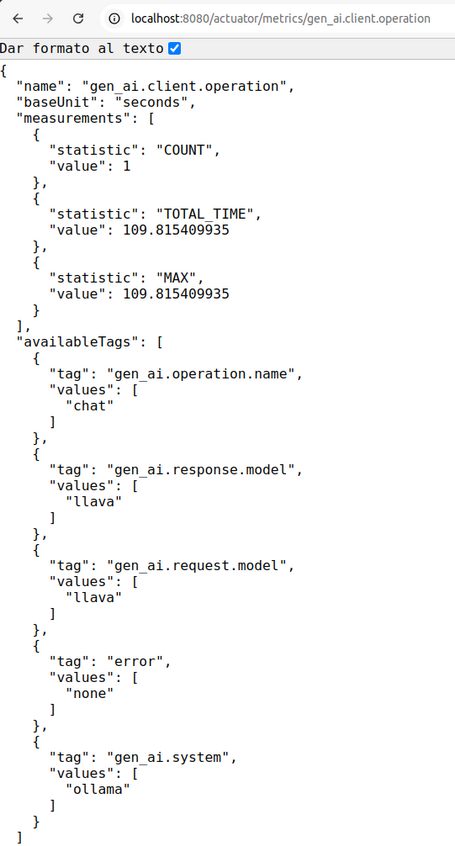

By enabling tracing with Actuator, Zipkin, and OpenTelemetry dependencies, you can access metrics such as:

These include information such as tokens, model used, response times, etc.

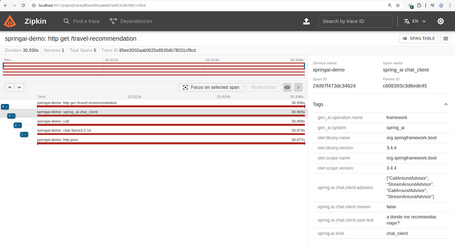

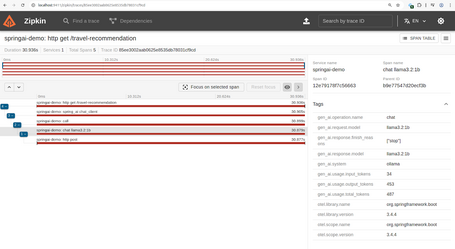

Moreover, when enabling traceability with Zipkin and OpenTelemetry, you will also get traces in the Zipkin server:

If you look closely, you can see the user input (property spring.ai.chat.client.user.text). This is possible because it was enabled using the property spring.ai.chat.client.observations.include-input, though Spring AI does show a warning about this in the trace logs, as it may expose sensitive user information.

Demo with Ollama

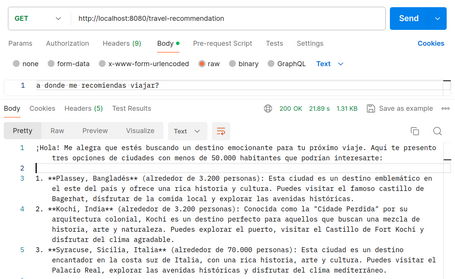

With all the prior knowledge, we can now build a demo using Ollama. Once Ollama is up and running in your system, the app can be created with various endpoints to test different Spring AI features:

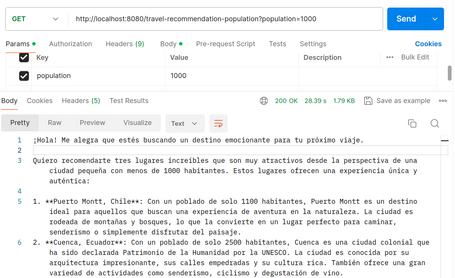

- travel-recommendation: an endpoint that recommends travel destinations (it actually accepts any user input, so it could be used for other types of queries).

- travel-recommendation-population: an endpoint that recommends cities below a specified population. It illustrates how to pass a parameter to the system input. You may notice the model has limited accuracy when filtering results.

- travel-recommendation/chat-response: works similarly to the first endpoint but returns a ChatResponse object that includes metadata such as tokens and model used (the text field contains the actual reply, shortened here for simplicity).

{

"result": {

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "..."

},

"metadata": {

"finishReason": "stop",

"contentFilters": [],

"empty": true

}

},

"metadata": {

"id": "",

"model": "llama3.2:1b",

"rateLimit": {

"requestsLimit": 0,

"requestsRemaining": 0,

"requestsReset": "PT0S",

"tokensLimit": 0,

"tokensRemaining": 0,

"tokensReset": "PT0S"

},

"usage": {

"promptTokens": 34,

"completionTokens": 428,

"totalTokens": 462

},

"promptMetadata": [],

"empty": false

},

"results": [

{

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "..."

},

"metadata": {

"finishReason": "stop",

"contentFilters": [],

"empty": true

}

}

]

}

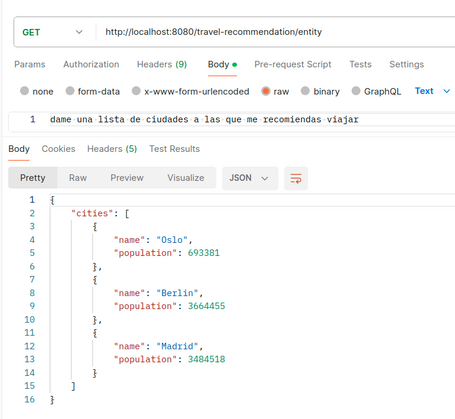

- travel-recommendation/entity: works just like the initial endpoint, but transforms the model response into domain model classes from our application:

Note that this endpoint is very prone to failure since correctly transforming the model’s response into JSON format often fails (this is still one of the key areas pending improvement in both Spring AI and the models, as they frequently do not generate valid JSON).

- chat-client-programmatically: this endpoint illustrates how to create a ChatClient bean programmatically instead of letting Spring auto-inject it. This gives more flexibility when using multiple models in the same application. Functionally, it behaves the same as the travel-recommendation endpoint.

- multimodality: this endpoint allows you to send both text and image inputs to the model. In this case, the model is asked to describe the image. This example is detailed in the multimodality section.

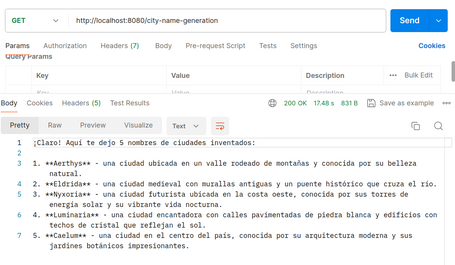

- city-name-generation: generates 5 fictional city names. This serves as an example of how to modify the model's "temperature" setting (for more creative responses). When calling the endpoint, the response might look like this (ALT in English):

- /prompt-template/simple: endpoint that provides information about a city using an adjective (e.g., large, small, coastal) and country. It serves as an example of how prompt templates are generated.

- /prompt-template/system: endpoint that provides information on a specific topic (technology, business, industry, etc.) related to the city of Barcelona. It showcases how to generate system prompt templates (ALT in English).

- /prompt-template/resource: this endpoint performs the same task as the previous one but retrieves the systemPromptTemplate from an external file (ALT in English).

The application includes the dependencies and configuration properties needed to enable observability of the various requests made to the models. To export traces to a Zipkin server, you can spin up a local Zipkin instance with Docker using the following command:

docker run -d -p 9411:9411 openzipkin/zipkin

In general, it should also be noted that depending on the prompt provided and the accuracy of the model, nonsensical responses or outputs that do not meet the specified requirements may occur. This can result in formatting errors or even memory-related issues, with traces such as:

java.lang.RuntimeException: [500] Internal Server Error - {"error":"model requires more system memory (6.1 GiB) than is available (5.3 GiB)"}

You can download the sample application code at this link.

Conclusion

In this second part of the Spring AI series, we’ve explored how to run multimodal models as well as how to manage model input through various prompt techniques. Another crucial section in any Spring module is observability, and here it offers relevant metrics on model behavior, which are essential for both the application’s performance and potential LLM usage billing.

While what we’ve seen so far can be considered the "Hello World" of AI, it’s important to note that this is the most basic layer of the Spring AI module — and it already provides plenty of capabilities to consider for real-world use cases, especially those involving chatbots.

In future posts, we’ll dive into more advanced features of Spring AI. Let me know what you think in the comments! 👇

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.