Once you’ve understood the basics of Spring AI and how to interact with models in the simplest way, let’s explore more advanced concepts to build more powerful applications, taking full advantage of AI’s capabilities.

Some of the features we’ll explore next often go unnoticed but are crucial for improving user experience or integration with other applications. One such example is prompt context, allowing a chatbot to understand indirect references during a conversation:

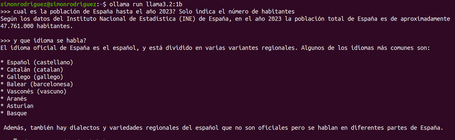

Translated into English: What is the population of Spain up to the year 2023? Just state the number of inhabitants. According to data from the Spanish National Statistics Institute (INE), the total population of Spain in 2023 is approximately 47,761,000 inhabitants. And what language is spoken? The official language of Spain is Spanish, and it is divided into several regional variants. Some of the most common languages are: Spanish (Castilian), Catalan, Galician, Balearic (Mallorcan), Basque, Aranese, Asturian, Basque

In addition, there are dialects and regional varieties of Spanish that are not official but are spoken in different parts of Spain.

In this example, we can see how the second question doesn’t need to explicitly mention that it refers to Spain.

Another feature we’ll cover is the ability to use “tools” to enrich the model’s information. LLMs are pretrained models, meaning they contain knowledge only up to a certain date, and can’t deliver real-time results out of the box.

Popular applications like ChatGPT, DeepSeek, and others also retrieve real-time data through external functionalities beyond the LLM itself.

Advisors

Advisors allow you to intercept, modify, and enrich model interactions. Benefits include the use of common AI patterns, transformation of input/output data, and portability across models and use cases.

Core Components

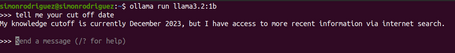

As seen in the image below, the API is built around CallAroundAdvisor and CallAroundAdvisorChain classes (and their streaming equivalents), plus AdvisedRequest and AdvisedResponse for handling request/response. These also include an adviseContext to share state throughout the advisor chain.

NextAroundCall and nextAroundStream are the key methods to examine, customize, and forward prompts, block requests, inspect responses, and raise exceptions. There are also methods like getOrder (to control execution order) and getName (to assign a unique advisor name).

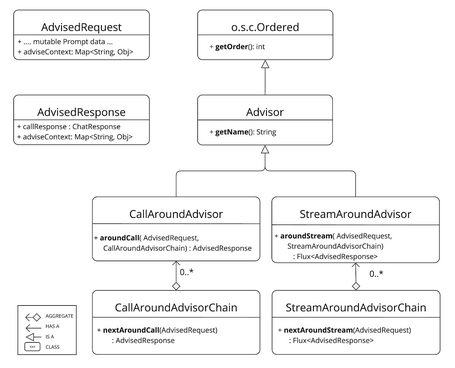

The Spring AI advisor chain executes advisors sequentially based on their getOrder. Lower values run first; the last advisor sends the request to the LLM. The image below shows the interaction flow between advisors and the model.

- Spring AI creates an AdvisedRequest from the user’s prompt with an empty AdvisorContext.

- Each Advisor processes the request, modifying or blocking it (if blocked, it must return a response).

- The last framework-created Advisor sends the request to the Chat Model.

- The model’s response is returned to the Advisors as an AdvisedResponse.

- Each Advisor can process or modify the response.

- The AdvisedResponse is sent back to the client.

Advisor Execution Order

Advisor execution order in the chain is defined by the getOrder method. Key points:

- Lower-order Advisors execute first. Higher values have lower priority.

- The Advisor chain works like a stack:

- The first Advisor is the first to process the request.

- That same Advisor is the last to process the response.

- To control execution order:

- Use values near Ordered.HIGHEST_PRECEDENCE to run first (early in requests, late in responses).

- Use values near Ordered.LOWEST_PRECEDENCE to run last (late in requests, early in responses).

- If multiple Advisors have the same order, their execution order is not guaranteed.

If you need an Advisor to run first in both the request and the response:

- Use separate Advisors for each direction.

- Assign different order values to each.

- Use the Advisor Context to share state between them.

Implementing an Advisor

To create an Advisor, you must implement CallAroundAdvisor and its aroundCall method:

public class CustomAdvisor implements CallAroundAdvisor {

@Override

public String getName() {

return this.getClass().getSimpleName();

}

@Override

public int getOrder() {

return 0;

}

@Override

public AdvisedResponse aroundCall(AdvisedRequest advisedRequest, CallAroundAdvisorChain chain) {

AdvisedResponse originalResponse = chain.nextAroundCall(this.beforeCall(advisedRequest));

return this.afterCall(originalResponse);

}

//Methods to complete the behaviour

}

Advisors Provided by the Framework

Spring AI provides several built-in Advisors:

- Chat Memory:

- MessageChatMemoryAdvisor: retrieves memory data and adds it as a collection of messages in the prompt. This enables conversation history to be maintained, although not all models support this feature.

memoryChatClient = chatClientBuilder

.clone()

.defaultAdvisors(new MessageChatMemoryAdvisor(new InMemoryChatMemory()))

.build();

- PromptChatMemoryAdvisor: retrieves memory data and includes it in the system prompt.

- VectorStoreChatMemoryAdvisor: retrieves data from a VectorStore and includes it in the system prompt. This Advisor is useful for efficiently retrieving relevant information from large datasets.

- Question Answering:

- QuestionAnswerAdvisor: leverages a VectorStore to provide question-answering capabilities by implementing the RAG pattern.

- Content Safety:

- SafeGuardAdvisor: advisor that prevents the model from generating inappropriate content.

Best Practices

- Each Advisor should be focused on a specific task.

- Use AdviseContext to share state between Advisors when needed.

- Implement Advisors with maximum flexibility in mind (normal and streaming versions).

- Carefully plan the order of Advisors in the execution chain to ensure proper data flow.

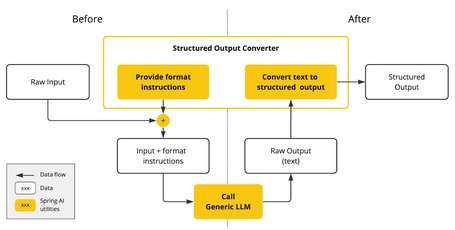

Structured Output

This API helps convert model output into a structured format to facilitate integration with other components/applications.

Before calling the model, the Converter adds instructions to the prompt so that the model generates the response in the desired format. Afterwards, the Converter transforms the output into instances of the required format, such as JSON, XML, or domain entities.

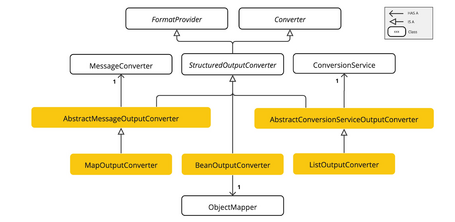

Converters Provided by Spring AI

Spring AI currently provides the following Converter implementations:

- AbstractConversionServiceOutputConverter: provides a GenericConversionService to transform LLM output into the desired format. It does not include a default FormatProvider implementation.

- AbstractMessageOutputConverter: provides a MessageConverter to transform LLM output into the desired format. It does not include a default FormatProvider implementation.

- BeanOutputConverter: configured with a Java class or ParameterizedTypeReference that uses a FormatProvider to construct a compatible JSON. Then, it uses ObjectMapper to deserialize the JSON into an instance of the Java class. This is the Converter used in previous examples when calling the entity() method. This Converter supports the @JsonPropertyOrder annotation to define the specific order of properties in the JSON.

- MapOutputConverter: extends the functionality of AbstractMessageOutputConverter with a FormatProvider implementation to construct a JSON. It also includes a converter implementation that uses the MessageConverter to transform the JSON into a Map (java.util.Map<String, Object>) instance.

@GetMapping("/map")

Map<String, Object> getStandardOutputMap() {

MapOutputConverter outputConverter = new MapOutputConverter();

String format = outputConverter.getFormat();

String template = """

Give me a list of the 3 most important cryptocurrencies with their name, abbreviation and a brief description.

Example: Bitcoin -> "abbreviation": "BTC", "description": "the most important cryptocurrency".

{format}

""";

PromptTemplate promptTemplate = new PromptTemplate(template, Map.of("format", format));

Prompt prompt = new Prompt(promptTemplate.createMessage());

return chatClient.prompt(prompt)

.call()

.entity(outputConverter);

}

- ListOutputConverter: extends AbstractConversionServiceOutputConverter and includes a FormatProvider implementation tailored for comma-delimited list outputs. This converter leverages the ConversionService to transform the output into an instance of the List (java.util.List) class.

Tool Calling

The integration with "tools" allows the model to execute client-side functions to access information or perform tasks when necessary. Its main use cases are:

- Information retrieval: these “tools” allow retrieving data from multiple external sources (databases, file systems, web services, etc.), providing the models with additional context they otherwise wouldn't have access to.

- Task execution: tools that perform specific actions such as sending emails, saving records to a database, submitting a form, etc., aimed at automating workflows.

It’s important to note that this pattern is not a built-in capability of the models, but rather a feature provided by the application itself. The model requests the tool with specific arguments, but the function is executed by the application.

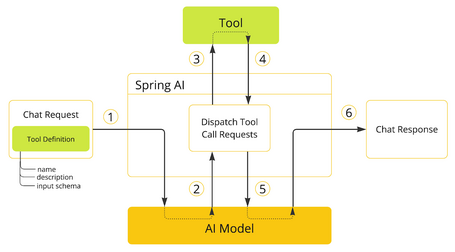

How It Works

The key concepts and components for Tool Calling are:

- Define the "tool" you want to provide to the model, including its name, description, and input parameter schema.

- When the model decides to call a tool, it sends a response with the tool name and adapted input parameters.

- The application executes the tool.

- The result is processed by the application.

- The application sends the result back to the model.

- The model generates the final response using the tool output as additional context.

Next, we’ll explore several ways to define and execute tools.

Methods as Tools

There are two ways to define tools from methods:

- Declarative: annotate the method with @Tool, optionally customizing it with parameters such as name, description, returnDirect, or resultConverter. The method itself can be static or instance-based and can have any visibility level (public, protected, package-private, or private). The class containing the method can be either top-level or nested and also support any visibility modifier.

Regarding parameters, you can define any number (including none) of nearly any type (specific limitations are discussed later). Additionally, you can use the @ToolParam annotation to add descriptions or indicate whether parameters are required (by default, they are). The definition of the return value works similarly; most types are allowed, including void. If a return value exists, it must be serializable.

To specify the tool to execute, use the tools() method on ChatClient, which only applies to the current request:

@Slf4j

public class BitcoinTool {

@Tool(description = "Get the current price of bitcoin cripto currency in euros")

Long getBitcoinPriceInEuros() {

BitcoinInfo bitcoinInfo = null;

String apiResponse = "";

try {

apiResponse = RestClient.create().get().uri(new URI("https://cex.io/api/last_price/BTC/EUR")).retrieve()

.body(String.class);

bitcoinInfo = new ObjectMapper().readValue(apiResponse, BitcoinInfo.class);

} catch (URISyntaxException | JsonProcessingException e) {

e.printStackTrace();

}

log.info("The current bitcoin price in euros is {}", bitcoinInfo.getLprice());

return bitcoinInfo.getLprice();

}

}

@GetMapping("/btc")

String toolCallingBtcPrice() {

return this.chatClient.prompt().user("Give me the current bitcoin price")

.tools(new BitcoinTool()).call().content();

}

You can also define default “tools” in the ChatClient.Builder using the defaultTools() method (this way, the tool applies to all requests). A similar behavior applies to the ChatModel class.

- Programmatic: you must build a MethodToolCallback, specifying the appropriate method and its details through the parameters: toolDefinition, toolMetadata, toolMethod, toolObject, and toolCallResultConverter.

Regarding the parameters, wrapper classes, and method return types, this kind of definition offers the same possibilities and limitations as the declarative option. The same methods are used for execution (both in ChatClient and ChatModel):

@Slf4j

public class PurchaseOrderTool {

public void createBitcoinPurchaseOrder(@ToolParam(description = "Bitcoin amount") Integer bitcoinAmount,

@ToolParam(description = "Current bitcoin euros price") Long currentBitcoinEurosPrice) {

log.info("Create a purchase order for {} btc with each btc at {} euros price", bitcoinAmount, currentBitcoinEurosPrice);

}

}

@GetMapping("/btc-purchase-order")

String toolCallingBtcPriceSavePurchaseOrder() {

Method method = ReflectionUtils.findMethod(PurchaseOrderTool.class, "createBitcoinPurchaseOrder", Integer.class, Long.class);

ToolCallback toolCallback = MethodToolCallback.builder()

.toolDefinition(ToolDefinition.builder(method)

.description("Create bitcoin purchase order of bitcoin amount at current bitcoin euros price")

.build())

.toolMethod(method)

.toolObject(new PurchaseOrderTool())

.build();

return this.chatClient.prompt().user("Give me the current bitcoin price in euros and create a bitcoin purchase order of 10 bitcoins at this current bitcoin euros price")

.tools(new BitcoinTool())

.tools(toolCallback)

.call()

.content();

}

On the other hand, the following limitations exist when using methods as "tools." Basically, the following types are not supported as parameters or return values for methods:

- Optional

- Asynchronous types (CompletableFuture, Future)

- Reactive types (Flow, Mono, Flux)

- Functional types (Function, Supplier, Consumer). These types are supported but focused in a different way as you can see in the following section.

Functions as tools

Another way to create "tools" is through functions. Just like with methods, there are two ways:

- Dynamic: Functions can be defined as Spring beans and resolved dynamically at runtime thanks to the ToolCallbackResolver class. The bean's name will be used as the name of the "tool," and the @Description annotation for its description, so that the model can understand when and how to call the "tool." Additionally, the @ToolParam annotation can be used to indicate additional information about the parameters.

- On the downside of this approach, type safety is not guaranteed. To solve this, the name of the "tool" can be explicitly indicated in the @Bean annotation, and the value can be saved in a constant to be used in chat requests.

@Configuration(proxyBeanMethods = false)

class WeatherTools {

public static final String CURRENT_WEATHER_TOOL = "currentWeather";

WeatherService weatherService = new WeatherService();

@Bean(CURRENT_WEATHER_TOOL)

@Description("Get the weather in location")

Function<WeatherRequest, WeatherResponse> currentWeather() {

return weatherService;

}

}

- Programmatic: by creating a FunctionToolCallback and defining the parameters: name, toolFunction, description, inputType, inputSchema, toolMetadata, and toolCallResultConverter. Its declaration and usage is very similar to the corresponding section on methods as tools.

Input and output parameters can be Void or POJOs (they must be serializable). Both the function and its input/output types must be public.

Despite these capabilities, there are the following limitations when using functions as “tools”, which do not support the following types as input or output parameters:

- Primitive types

- Optional

- Collection types (List, Map, Array, Set)

- Asynchronous types (CompletableFuture, Future)

- Reactive types (Flow, Mono, Flux)

Note: It is important to provide a well-written description for each “tool,” as this helps the model understand when and how to use it correctly to generate a coherent response.

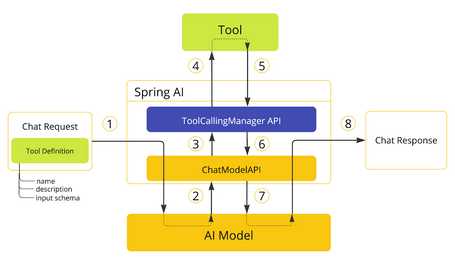

Tool Execution

The execution of “tools” is managed through a process handled by the ToolCallingManager interface. The default implementation is the DefaultToolCallingManager class, but it can be customized by creating a custom ToolCallingManager bean.

The default flow is shown in the following image:

- The definition of the “tool” is included in the chat request (ChatRequest), and the model receives this request.

- When the model decides to call a “tool,” it sends a response (ChatResponse) with the name of the tool and the corresponding parameters.

- ChatModel forwards the request to the ToolCallingManager.

- The ToolCallingManager identifies and executes the tool.

- The result of the execution is returned to the ToolCallingManager.

- The ToolCallingManager returns the result to the ChatModel.

- The ChatModel sends the result to the model.

- The model generates the final response using the tool's result as additional context.

Tool Discovery

The main way to use “tools” is through the mechanisms explained in previous sections (Methods and Functions as Tools). However, Spring AI also provides the option to discover tools dynamically at runtime using the ToolCallbackResolver interface along with tool names.

public interface ToolCallbackResolver {

@Nullable

ToolCallback resolve(String toolName);

}

By default, instances that implement ToolCallbackResolver include:

- SpringBeanToolCallbackResolver: resolves “tools” derived from Spring beans of type Function, Supplier, Consumer, or BiFunction.

- StaticToolCallbackResolver: resolves “tools” from a static list of ToolCallback instances.

Specification

The documentation also details the tool specification for finer control over customization of tools.

Demo

We’ve created an app with the following endpoints to demonstrate the concepts covered so far:

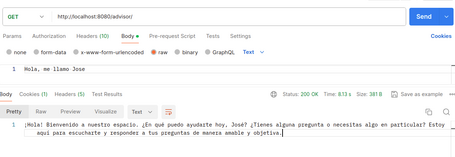

- /advisor/: an interaction endpoint used to test that the LLM has no memory. The idea is to first call it introducing our name, then ask it what our name is.

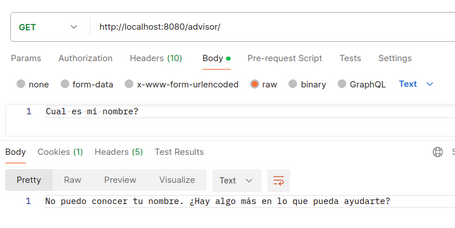

- /advisor/memory: this endpoint includes the MessageChatMemoryAdvisor to show that the LLM retains conversation memory. Just like before, we first introduce ourselves and then ask for our name.

Translated into English: Hello, my name is Jose. Hi, Jose! How can I assist you today? Is there anything specific you'd like to discuss or need information about? I'm here to help.

Translated into English: What is my name? Your name is Jose, as you mentioned earlier. How are you? Is that correct?

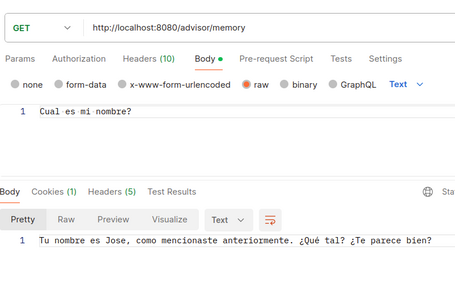

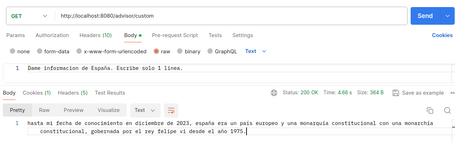

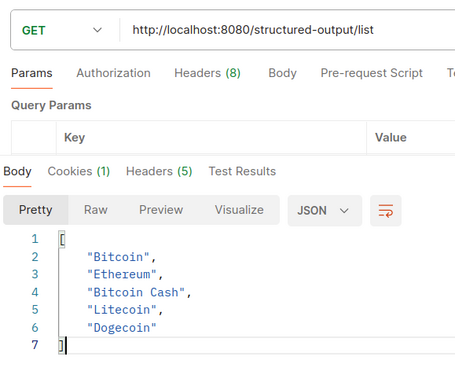

- /advisor/custom: this endpoint uses a custom advisor. Essentially, it sends the user's input to the model in uppercase and returns the model's response to the user in lowercase. You can observe the behavior in both the logs and the endpoint response.

Translated into English: Give me information about Spain. Just one line. as of my knowledge cutoff in December 2023, Spain was a European country and a constitutional monarchy governed by King Felipe VI since 1975.

Translated into English: c.e.s.d.s.advisors.CustomAdvisor Input transformed GIVE ME INFORMATION ABOUT SPAIN. JUST ONE LINE. c.e.s.d.s.advisors.CustomAdvisor Request to the model AdvisedRequest chatModel=org.springframework.a1. c.e.s.d.s.advisors.CustomAdvisor Original response AdvisedResponse response=ChatResponse metadata={

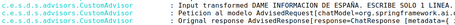

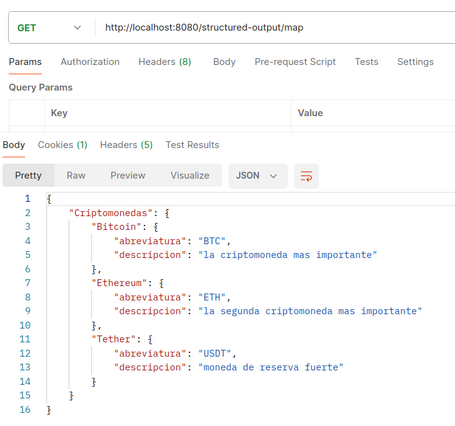

- /structured-output/map: an endpoint that formats the output as a Map containing information about 3 cryptocurrencies. As seen in previous examples, the LLM may fail to consistently structure the output as expected (e.g., JSON formatting issues), occasionally resulting in errors during the call.

Translated into English: { "Cryptocurrencies": { "Bitcoin": { "symbol": "BTC", "description": "the most important cryptocurrency" { "Ethereum": { "symbol": "ETH", "description": "the second most important cryptocurrency" "Tether": { "symbol": "USDT", "description": "strong reserve currency" }

Translated into English: "ethereum": "{"symbol":"ETH", "description":"the second most important cryptocurrency"}", "litecoin": "{"symbol":"LTC","description":"the third most important cryptocurrency"}", "bitcoin": "{"symbol":"BTC", "description":"the most important cryptocurrency"}"

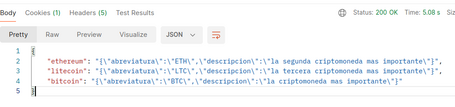

- /structured-output/list: an endpoint that formats the output as a List of 5 cryptocurrencies.

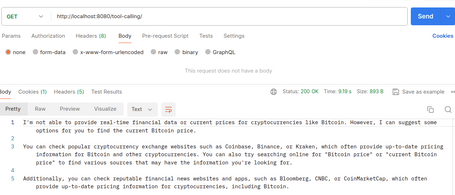

- /tool-calling/: an endpoint used to demonstrate that the model does not have access to real-time data, such as the current Bitcoin price.

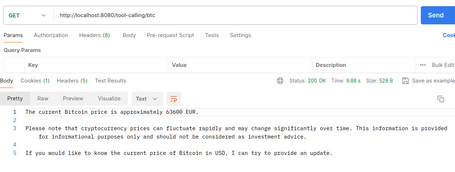

- /tool-calling/btc: an endpoint that uses a “tool” to fetch the current price of Bitcoin in euros.

- /tool-calling/btc-purchase-order: this endpoint uses two tools (one declarative, one programmatic). It fetches the current price of Bitcoin in euros and attempts to create a purchase order for 10 bitcoins at that price. However, due to limitations (in this case with llama3.2:1b), the model may fail to correctly pass the price, resulting in incorrect or random values for the order. Tool calls are visible in the logs.

- /tool-calling/function: an endpoint that handles a “function-type” tool. It behaves like previous endpoints and logs the creation of a Bitcoin purchase.

You can download the source code of this example app here.

Conclusion

In this Spring AI chapter, we explored various ways to enrich or transform model input and output to generate coherent responses — even using real-time information, although there are still some details to polish.

In upcoming posts, we’ll dive into RAG and the capabilities that support it.

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.