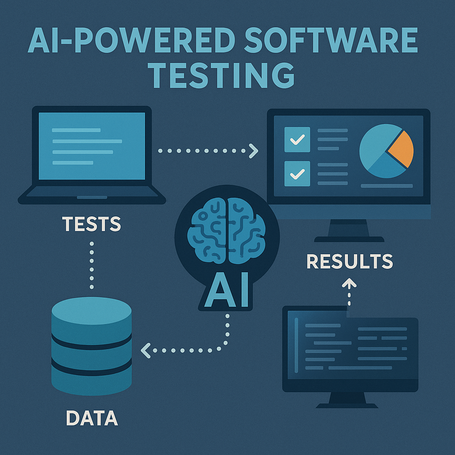

They say traditional testing can’t keep up with the pace of agile development anymore. That it can feel slow or outdated… and that’s where AI comes in: tools that not only automate tests, but also predict bugs and improve over time (and optimize our daily workflow!).

In this post, I’ll try to explain how AI is revolutionizing the world of software testing and why it might become your new best ally in development, even though I used to be skeptical too.

Can AI really help us save time in the testing process?

The short answer is yes. But it always depends on how willing we are to “let it in.”

When we test, everything starts with the design of test cases, a task that requires creativity, product knowledge and analytical skills. Now imagine that artificial intelligence can generate more test cases on its own, aiming to boost quality. What if it could also help us write clearer bug reports, detect overlooked details and suggest more effective wording?

Not only that — it could save us time by identifying potential root causes of errors, acting as a thinking partner that complements our approach.

But first I need to review all project documentation and requirements to organize the information... can I reduce that time?

Absolutely, the answer is yes. When starting a new project, we often need to read all the documentation and understand what we're reading to deliver real value and satisfy the client. This step usually takes the most time — and yes, it can be boring!

There are AI-powered tools that can read documentation, summarize it, and help us focus on what really matters. Once this information is processed, AI can suggest what to test, how to test it, and even which exact tests to apply: regression, stress, functional, smoke… and even generate full end-to-end (E2E) scenarios.

And best of all, if we get documentation in another language, we can quickly translate it.

Can it also help us catch bugs?

As mentioned earlier, AI is here to support our work, especially by improving speed in our daily tasks.

Another big win with this new teammate is its ability to not only find bugs, but also propose fixes automatically, and sometimes even spot problems before they happen.

Is that possible? Yes. By training on historical data (bugs, error logs, test results), AI can predict where similar bugs are likely to appear in newer versions. It can even help write clearer, more detailed bug descriptions.

But let’s not forget: “Instead of detecting errors at the end, quality engineering focuses on preventing defects.”

And let’s not forget: AI improves test coverage

AI can help us (as support) prioritize test cases based on risk, usage frequency, or bug history, ensuring we don’t waste resources on unnecessary tests. Of course, manual or technical validation still provides the final check of all this work.

AI can analyze which parts of the software are most critical or prone to failure (based on history, frequency, etc.) and help us decide which tests to run first. For instance, instead of running 200 tests, we only run the 20 most relevant and save time.

Another great benefit of AI is its ability to suggest tests covering overlooked or missed scenarios — this is what a real partner does.

It can also enhance visual testing (UI analysis), spotting more specific issues such as:

- Font size inconsistencies

- Misaligned images

- Incorrect brand color usage

- Text misalignment or cutoff issues

- Missing or misaligned buttons

And when it comes to test automation, it saves us maintenance time. AI-based tools can automatically adjust scripts when detecting minor changes (like a button name or element position).

More reasons to team up with AI

Today, many companies are using AI in testing. Tech giants like Microsoft, Google, and Amazon have integrated AI into their testing pipelines, reducing critical bugs in production by 40% and improving final user satisfaction. If you want to dive deeper, check out this article covering success stories and challenges, which also highlights several interesting tools in this space.

One example of the most popular AI-powered testing tools in 2025:

| Tool | Key Features |

|---|---|

| Test.ai | Automatic test case creation based on user experience |

| Applitools | Visual testing powered by Computer Vision |

| Mabl | Continuous regression testing using Machine Learning |

| Functionize | Test automation based on NLP |

If you still have questions about AI and its support for testing, I recommend reading this post from our colleague, which explains it with super clear details and examples: “Why Generative AI Won’t Take Your QA Job”.

In conclusion, AI doesn’t replace us — it complements us, helping us do more with less. I’ll read you in the comments! 👇

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.