As we've already discussed, LLMs are models pre-trained up to a certain date and with public data (it's assumed they don't have access to a company's data or our devices, for example). The importance of tokens in aspects like billing or the context-window is also popular (we can't send everything we want in the input or the output, although there are LLMs that have context-windows of millions of tokens like Gemini).

The RAG pattern focuses on helping us with these limitations of LLMs in order to get better results, with fewer hallucinations and reducing the cost per use.

Before going into detail, you can take a look at the rest of the content in the Spring AI series in the following links in case you have missed any of the previous articles:

- Deep Learning about Spring AI: Getting Strarted

- Deep Learning about Spring AI: multimodularity, prompts and observability

- Deep Learning about Spring AI: Advisors, Structured Output and Tool Calling

Some of the foundations of RAG have already been seen in the post on Advisors, Tool-Calling or the topic of augmenting the context, but in this post we will focus more on the essence of RAG itself and on what technologies and abstractions it is supported by.

RAG

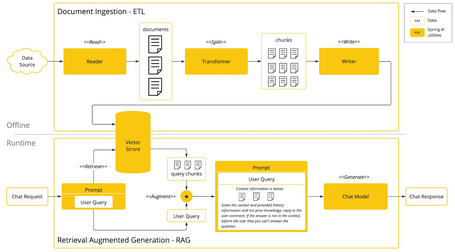

Retrieval Augmented Generation (RAG) is a widely used technique to address the limitations that exist in language models with large content, precision and context awareness. The approach is based on a batch process where unstructured data is first read from documents (or other data sources), transformed and then written to a Vector Database (roughly speaking it is an ETL process).

One of the transformations in this flow is the division of the original document (or data source) into smaller pieces, with two key steps:

- Division into smaller parts while preserving the semantics. For example, in a document with paragraphs and tables we try to avoid splitting in the middle of one of them. In the case of code, avoid splitting in the middle of a method.

- Division into parts whose size is smaller than the model's token limit.

The next phase in RAG would be the processing of the user's input, using similarity search to enrich the context with similar documents to be sent to the model.

Spring AI supports RAG by providing a modular architecture that allows creating custom RAG flows or using those created through the use of the Advisor API.

- QuestionAnswerAdvisor: performs queries to the vector db to obtain documents related to the user's input, whose answer is included in the context sent to the model.

String rag() {

return ChatClient.builder(chatModel)

.build().prompt()

.advisors(new QuestionAnswerAdvisor(vectorStore))

.user("que me puedes decir de los aranceles de EEUU en el año 2025")

.call()

.content();

}

In the example, QuestionAnswerAdvisor will perform a similarity search on the documents in the database, allowing the search to be filtered by documents using the SearchRequest class (also at runtime).

- RetrievalAugmentationAdvisor (in testing): an experimental advisor that provides the most common RAG workflows (Naive RAG and Advanced RAG).

Modules

Spring AI implements a modular RAG architecture based on the paper Modular RAG: Modular RAG: Transforming RAG Systems into LEGO-like Reconfigurable Frameworks. This is still in an experimental phase, so it is subject to change. The available modules are:

- Pre-Retrieval: modules responsible for processing the user's query to improve results. Classes like CompressionQueryTransformer, RewriteQueryTransformer, TranslationQueryTransformer, and MultiQueryExpander allow for transforming user queries to compress context, transform ambiguous or irrelevant content, translate, or provide different semantic perspectives.

Query query = new Query("Hvad er Danmarks hovedstad?");

QueryTransformer queryTransformer = TranslationQueryTransformer.builder()

.chatClientBuilder(chatClientBuilder)

.targetLanguage("english")

.build();

Query transformedQuery = queryTransformer.transform(query);

- Retrieval: modules responsible for fetching the most relevant documents from the database. The classes that can be used are VectorStoreDocumentRetriever and ConcatenationDocumentJoiner.

Map<Query, List<List<Document>>> documentsForQuery = ...

DocumentJoiner documentJoiner = new ConcatenationDocumentJoiner();

List<Document> documents = documentJoiner.join(documentsForQuery);

- Post-Retrieval: modules for processing the retrieved documents to improve result generation. Classes like DocumentRanker, DocumentSelector, and DocumentCompressor are used to rank, select, remove, or compress unnecessary documents.

- Generation: modules for generating the final response to the user. The ContextualQueryAugmenter class is used to add useful additional data for the model.

Embeddings

Embeddings are numerical representations of text, images, or videos that capture the relationships between the input data. They work by transforming text, images, or videos into arrays of numbers called vectors, which are designed to capture the meaning of these assets. This is done by calculating the numerical distance between two vectors from, for example, two pieces of text, thereby determining their similarity.

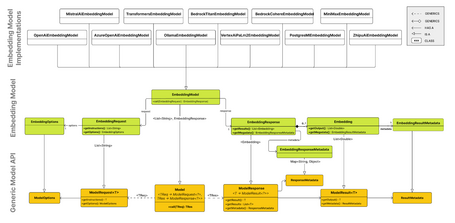

The EmbeddingModel interface is designed for integration with models. Its primary function is to convert assets into numerical vectors that are used for semantic analysis and text classification, among other things. The interface focuses mainly on:

- Portability: The interface ensures adaptability across different models with minimal code changes.

- Simplicity: It provides methods that simplify the process of transforming assets into embeddings, like embed (String text), without needing to know the internal transformations.

API Overview

The EmbeddingModel interface extends the Model interface, just as EmbeddingRequest and EmbeddingResponse extend from their corresponding ModelRequest and ModelResponse. The following image shows the relationships between the Embedding API, the Model API, and the Embedding Models:

- EmbeddingModel: Here you can see the different options provided for transforming text into embeddings. It also includes a dimensions method, which is important for knowing the size or dimensionality of the vector.

public interface EmbeddingModel extends Model<EmbeddingRequest, EmbeddingResponse> {

@Override

EmbeddingResponse call(EmbeddingRequest request);

float[] embed(Document document);

default float[] embed(String text) {...}

default List<float[]> embed(List<String> texts) {...}

default EmbeddingResponse embedForResponse(List<String> texts) {...}

default int dimensions() {...}

}

- EmbeddingRequest: This is a ModelRequest that contains a list of texts, as well as embedding options.

public class EmbeddingRequest implements ModelRequest<List<String>> {

private final List<String> inputs;

private final EmbeddingOptions options;

...

}

- EmbeddingResponse: Contains the model output with Embeddings, which includes the response vector and its corresponding metadata.

public class EmbeddingResponse implements ModelResponse<Embedding> {

private List<Embedding> embeddings;

private EmbeddingResponseMetadata metadata = new EmbeddingResponseMetadata();

...

}

- Embedding: Represents an embedding vector.

public class Embedding implements ModelResult<float[]> {

private float[] embedding;

private Integer index;

private EmbeddingResultMetadata metadata;

...

}

Available Implementations

On this page, you can find the available implementations. Following the premises of previous posts, we will focus on the Ollama implementation.

Properties

For autoconfiguration, a series of properties are provided that are very similar to the existing properties in the chat model, enabling autoconfiguration with the property:

spring.ai.model.embedding

Implementation

Below are some examples of how to use the OllamaEmbeddingModel and EmbeddingModel:

- Autoconfiguration: An EmbeddingModel bean already exists in the context and can be used directly:

@Autowired

private EmbeddingModel autoEmbeddingModel;

...

@GetMapping("/auto")

public EmbeddingResponse embed() {

return autoEmbeddingModel.embedForResponse(List.of("Tendencias tecnologicas 2025", "Tendencia tecnologica IA", "Tendencia tecnologica accesibilidad"));

}

- Manual Configuration: If you're not using Spring Boot or have other requirements, you can manually configure the OllamaEmbeddingModel. To do this, you may need to add the following dependency:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama</artifactId>

</dependency>

Then, create the corresponding instance:

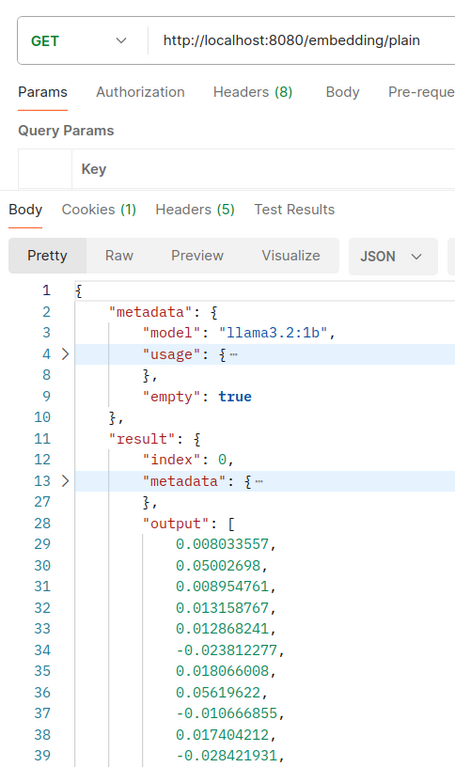

private OllamaApi ollamaApi;

private OllamaEmbeddingModel embeddingModel;

public EmbeddingController() {

ollamaApi = new OllamaApi();

embeddingModel =

OllamaEmbeddingModel.builder().ollamaApi(ollamaApi).defaultOptions(OllamaOptions.builder().model(OllamaModel.LLAMA3_2_1B).build()).build();

}

@GetMapping("/plain")

public EmbeddingResponse embedd() {

return embeddingModel.call(new EmbeddingRequest(List.of("Tendencias tecnologicas 2025", "Tendencia tecnologica IA", "Tendencia tecnologica accesibilidad"),

OllamaOptions.builder()

.model(OllamaModel.LLAMA3_2_1B)

.truncate(false)

.build()));

}

Vector Databases

These are a type of specialized database that, instead of performing exact matches, relies on similarity searches; in other words, when a vector is provided as input, the result is “similar” vectors.

First, the data is loaded into the vector database. Later, when a request is sent to the model, similar vectors are first retrieved, which are then used as context for the users question and are also sent to the model.

API Overview

To work with vector databases, the Vector Store interface is used:

public interface VectorStore extends DocumentWriter {

default String getName() {...}

void add(List<Document> documents);

void delete(List<String> idList);

void delete(Filter.Expression filterExpression);

default void delete(String filterExpression) {...};

List<Document> similaritySearch(String query);

List<Document> similaritySearch(SearchRequest request);

default <T> Optional<T> getNativeClient() {...}

}

In addition to the SearchRequest:

public class SearchRequest {

public static final double SIMILARITY_THRESHOLD_ACCEPT_ALL = 0.0;

public static final int DEFAULT_TOP_K = 4;

private String query = "";

private int topK = DEFAULT_TOP_K;

private double similarityThreshold = SIMILARITY_THRESHOLD_ACCEPT_ALL;

@Nullable

private Filter.Expression filterExpression;

public static Builder from(SearchRequest originalSearchRequest) {...}

public static class Builder {...}

public String getQuery() {...}

public int getTopK() {...}

public double getSimilarityThreshold() {...}

public Filter.Expression getFilterExpression() {...}

}

To insert data into the vector database, it is necessary to encapsulate that data in a Document object. When inserted into the database, the text is transformed into an array of numbers known as an embedding vector (the function of the vector database is to perform similarity searches, not to generate the embeddings themselves).

The similaritySearch methods allow you to obtain documents similar to a request, which can be adjusted with the following parameters:

- k: an integer that indicates the maximum number of similar documents to return. This is commonly known as a “top K” or “K nearest neighbors” (KNN) search.

- threshold: a number between 0 and 1, where values closer to 1 indicate greater similarity.

- Filter.Expression: a class used to specify a DSL (Domain-Specific Language) expression that functions similarly to the “where” clause in SQL, but it only applies to the metadata of a Document. You can find some examples on this page.

FilterExpressionBuilder builder = new FilterExpressionBuilder();

Expression expression = builder.eq("tendencia", "cloud").build();

- filterExpression: A DSL expression that allows for filter expressions as Strings. For example, with metadata such as country, year, and isActive, you could create an expression like “country == 'UK' && year >= 2020 && isActive == true”.

Schema Initialization

Some databases require the schema to be initialized before use. With Spring Boot, you can set the …initialize-schema property to true, although it is advisable to check this information against each specific implementation.

Batching Strategy

It's common when working with this type of database to have to embed many documents.

Although the first idea that comes to mind is to try embedding all these documents at once, this can lead to certain problems. This is mainly due to the token limits in the models (window size), which would cause errors or truncated embeddings.

This is precisely why the batch strategy is used, where large sets of documents are divided into smaller sets that fit within the window size. This not only solves the problem of token limits but can also improve performance and the request limits of the various APIs.

Spring offers this functionality through the BatchingStrategy interface:

public interface BatchingStrategy {

List<List<Document>> batch(List<Document> documents);

}

The default implementation is TokenCountBatchingStrategy, which bases the split on the number of tokens in each batch, ensuring the token input limit is not exceeded. The key points of this implementation are:

- It uses OpenAI's max input token limit (8191) by default.

- It includes a reserve percentage (10% by default) for an overhead buffer.

- The maximum token limit is calculated as: actualMaxInputTokenCount = originalMaxInputTokenCount * (1 - RESERVE_PERCENTAGE).

The strategy estimates the number of tokens per document, groups them into batches without exceeding the token limit, and throws an exception if any single document exceeds it. You can also customize the strategy by creating a new instance through a @Configuration class:

@Configuration

public class EmbeddingConfig {

@Bean

public BatchingStrategy customTokenCountBatchingStrategy() {

return new TokenCountBatchingStrategy(

EncodingType.CL100K_BASE, // Specify the encoding type

8000, // Set the maximum input token count

0.1 // Set the reserve percentage

);

}

}

Once this bean is defined, it will be used automatically by the EmbeddingModel implementations instead of the default strategy. Additionally, you can include your own implementations of TokenCountEstimator (the class that calculates the document`s tokens), as well as parameters for formatting content and metadata, or even your own custom implementation.

And, as with other components, you can also create a completely custom implementation:

@Configuration

public class EmbeddingConfig {

@Bean

public BatchingStrategy customBatchingStrategy() {

return new CustomBatchingStrategy();

}

}

Available Implementations

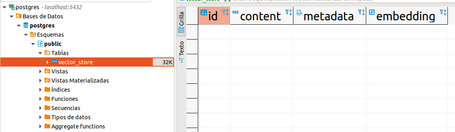

You can find the available implementations on this page. In this case, we will focus on the PGvector implementation for PostgreSQL databases, which is simply an open-source extension for PostgreSQL that allows for saving and searching embeddings.

Prerequisites

First, you need access to PostgreSQL with the vector, hstore and uuid-ossp extensions. On startup, PgVectorStore will attempt to install the necessary extensions on the database and create the vector_store table with an index. You can also do this manually with:

CREATE EXTENSION IF NOT EXISTS vector;

CREATE EXTENSION IF NOT EXISTS hstore;

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

CREATE TABLE IF NOT EXISTS vector_store (

id uuid DEFAULT uuid_generate_v4() PRIMARY KEY,

content text,

metadata json,

embedding vector(1536) // 1536 is the default embedding dimension

);

CREATE INDEX ON vector_store USING HNSW (embedding vector_cosine_ops);

Configuration

Let's start by including the corresponding dependency:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-vector-store-pgvector</artifactId>

</dependency>

The vector store implementation can initialize the schema automatically, but you must specify the initializeSchema in the corresponding constructor or through the …initialize-schema=true property.

Of course, an EmbeddingModel will also be necessary, and you must include its corresponding dependency in the project. And, as is often the case, you will also have to specify the connection values via properties:

spring:

datasource:

url: jdbc:postgresql://localhost:5432/postgres

username: postgres

password: postgres

ai:

vectorstore:

pgvector:

index-type: HNSW

distance-type: COSINE_DISTANCE

dimensions: 1024

max-document-batch-size: 10000

initialize-schema: true

Additionally, other properties exist for greater customization of the Vector Store. Remember that as Spring has accustomed us, a manual configuration can also be created.

Local Execution

You can run a PGVector instance using the following Docker command:

docker run -it --rm --name postgres -p 5432:5432 -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=postgres pgvector/pgvector:pg16

And connecting to the instance with the command:

psql -U postgres -h localhost -p 5432

Usage Examples

As expected, you must select an embedding model in conjunction with the general model being used. An example of loading Documents into the Vector Store (this loading operation would actually be executed as a batch process) would be:

Document document1 = new Document("Tendencia tecnologica 2025: La voz y los vídeos en la IA", Map.of("tendencia", "ia"));

...

List<Document> documents = Arrays.asList(document1, document2, document3, document4, document5, document6, document7, document8, document9, document10);

vectorStore.add(documents);

Later, when a user sends a question, a similarity search will be performed to obtain similar documents that will be passed as context for the prompt.

Deleting Documents

Multiple methods are provided for deleting documents:

- Deletion by IDs: As the name suggests, documents that match the specified IDs are deleted, ignoring any that are not found.

- Deletion by Filter.Expression: Using a Filter.Expression object, you specify the criteria for the documents to be deleted, which is especially useful for deleting documents based on their metadata:

Filter.Expression filterExpression = new Filter.Expression(Filter.ExpressionType.EQ, new Filter.Key("tendencia"), new Filter.Value("dragdrop"));

vectorStore.delete(filterExpression);

FilterExpressionBuilder builder = new FilterExpressionBuilder();

Expression expression = builder.eq("tendencia", "cloud").build();

try {

vectorStore.delete(filterExpression);

vectorStore.delete(expression);

} catch (Exception e) {

log.error("Invalid filter expression", e);

}

Note: According to the documentation, it is recommended to wrap these delete calls in try-catch blocks.

- Deletion by String Filter Expression: In this case, the String would be transformed into a Filter.Expression.

Additionally, you should also consider the following performance considerations:

- Deleting by IDs is faster when you know exactly which Documents to delete.

- Filter-based deletion may require scanning the index to find the Documents to be deleted.

- Deleting many Documents should be executed in batch operations.

- Consider deleting Documents based on filters instead of first retrieving the corresponding IDs.

Demo

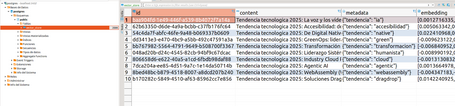

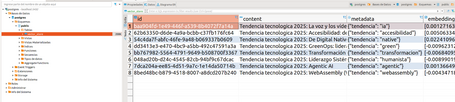

To see the components we've discussed in this post in action, we create an app. Once we start the application with the …initialize-schema: true property, you can see how the vector_store table has been created using a database client:

To test the functionality, we have the following endpoints:

- /embedding/plain: an endpoint that returns the embeddings generated from some texts. The embeddingModel has been created manually.

- /embedding/auto: an endpoint similar to the previous one that returns the embeddings generated from some texts. In this case, the embeddingModel has been injected via Spring.

- /vector-store/load: an endpoint for loading Documents into the Vector Store. The loaded data can be seen with a database client:

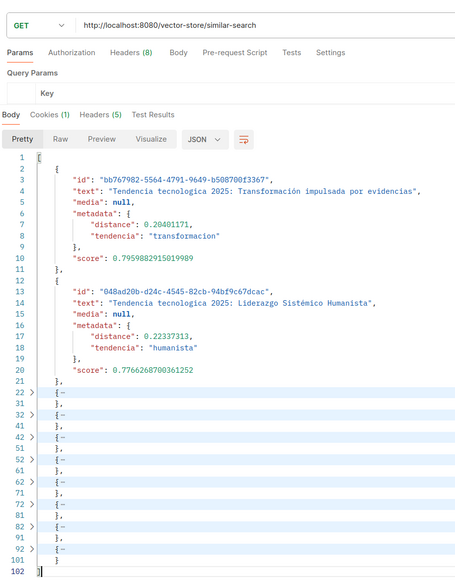

- /vector-store/similar-search: an endpoint to perform a similarity search on the Documents loaded in the previous endpoint. To ensure that only these documents are returned, it is important to have only the data from the previous endpoint in the database, since the model is not very reliable and other documents could be mixed into the response:

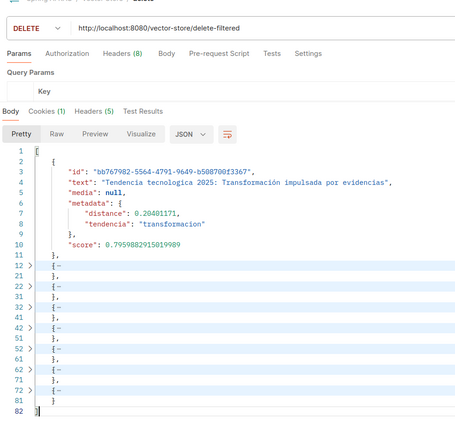

- /vector-store/delete-filtered: an endpoint that deletes two Documents and returns the remaining ones using a similarity search. You can also verify with a database client that the corresponding documents have been deleted. Likewise, you should try to only have the relevant documents in the database when making this request:

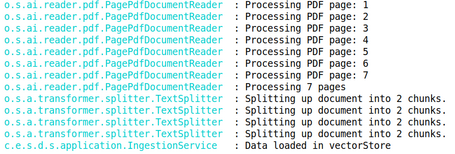

- /rag/load-data: This endpoint is used to load PDF data into the vector store. In this specific case, the PDF concerns US tariffs for the year 2025, taking into account that the model has a cutoff date of March 2023.

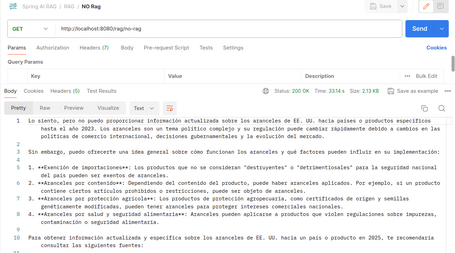

- /rag/no-rag: endpoint for consulting US tariffs data for 2025 without consulting the embeddings. It can be seen that it does not have concrete information about what we are asking:

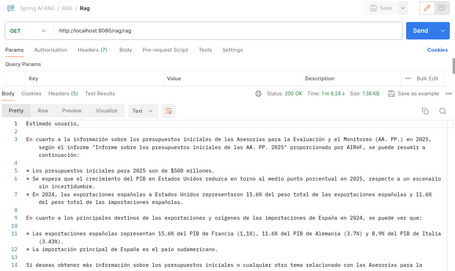

- /rag/rag: endpoint for performing the previous query by applying the QuestionAnswerAdvisor with the embeddings included in the context, returning information with allusions to the original document:

You can download the example application code from this link.

Conclusion

On this occasion, we have seen what the RAG pattern consists of and how it is implemented in Spring AI, in addition to the functionalities that support it (embeddings and vector databases), as well as the examples of the corresponding implementations (Ollama and PGVector).

In the next chapter, we will address the offline part of the RAG pattern (ETL phase), in addition to focusing on the rise of MCP and how Spring AI solves it. See you in the comments!

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.