In the previous post, Deep Learning about Spring AI: RAG, Embeddings, and Vector Databases, we explored the RAG pattern and saw that it basically consists of two phases: the first one for data ingestion and transformation, and the second for execution.

This time, we’ll look at the options Spring AI provides for the first phase of the RAG pattern (ETL).

We’ll also dive into one of the most relevant concepts in AI today: the MCP (Model Context Protocol).

If you’ve missed any of the previous posts in the series, you can check them out below:

- Deep Learning about Spring AI: Getting Strarted

- Deep Learning about Spring AI: multimodularity, prompts and observability

- Deep Learning about Spring AI: Advisors, Structured Output and Tool Calling

- Deep Learning about Spring AI: RAG, Embeddings and Vector Databases

ETL

Within the RAG pattern, the ETL framework organizes the data processing flow, from the point of obtaining raw data to storing structured data in a vector database.

API Overview

ETL pipelines create, transform, and store Documents, using three main components:

- DocumentReader, which implements Supplier<List<Document>>.

- DocumentTransformer, which implements Function<List<Document>, List<Document>>.

- DocumentWriter, which implements Consumer<List<Document>>.

To build an ETL pipeline, you can chain together an instance of each of the above:

For example, using the following instances:

- PagePdfDocumentReader as a DocumentReader implementation.

- TokenTextSplitter as a DocumentTransformer implementation.

- VectorStore as a DocumentWriter implementation.

You could use the following code to run the RAG pattern in its data ingestion phase:

vectorStore.accept(textSplitter.apply(pdfReader.get()));

Interfaces

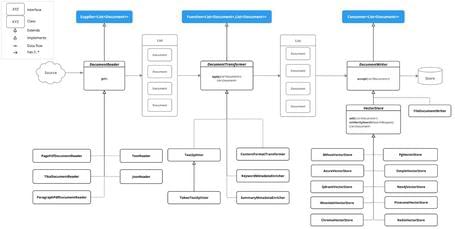

The following image shows the interfaces and implementations that support this ETL phase in Spring AI:

- DocumentReader: provides a source of documents from different origins:

public interface DocumentReader extends Supplier<List<Document>> {

default List<Document> read() {

return get();

}

}

- DocumentTransformer: transforms documents:

public interface DocumentTransformer extends Function<List<Document>, List<Document>> {

default List<Document> transform(List<Document> transform) {

return apply(transform);

}

}

- DocumentWriter: prepares documents for storage:

public interface DocumentWriter extends Consumer<List<Document>> {

default void write(List<Document> documents) {

accept(documents);

}

}

In the following sections, we'll take a closer look at each of them.

Document Readers

Some existing implementations include:

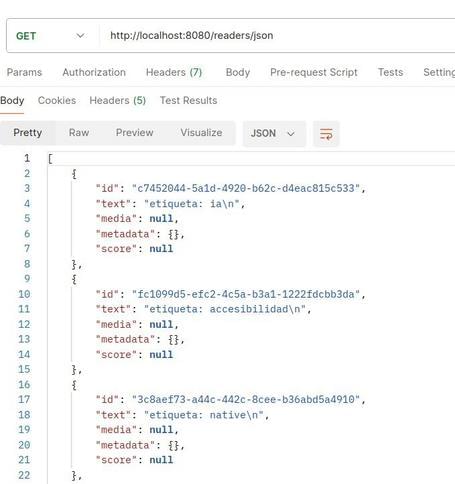

1. JSON: processes JSON documents by converting them into Document objects.

public class CustomJsonReader {

...

public List<Document> loadJson() {

JsonReader jsonReader = new JsonReader(this.resource, "etiqueta", "content");

return jsonReader.get();

}

}

Constructor parameters:

- resource: Spring resource pointing to the JSON file.

- jsonKeysToUse: array of JSON keys to be used as content in the output Document.

- jsonMetadataGenerator: object used to generate metadata for each Document.

Behavior for each JSON object (within an array or as a standalone object):

- Extracts content based on the jsonKeysToUse parameter.

- If no keys are specified, the entire JSON object is used as content.

- Metadata is generated using the jsonMetadataGenerator.

- A Document is created with the extracted content and metadata.

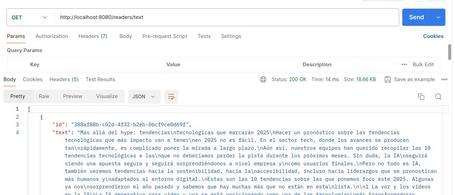

2. Text: processes plain text documents by converting them into Document objects.

public class CustomTextReader {

...

public List<Document> loadText() {

TextReader textReader = new TextReader(this.resource);

textReader.getCustomMetadata().put("filename", "text-source.txt");

return textReader.read();

}

}

Constructor parameters:

- resourceUrl: string representing the URL of the resource to read.

- resource: the resource to be read.

Behavior:

- Reads the entire content of the text file into a Document.

- The file content becomes the content of the Document.

- Metadata is added automatically:

- charset: the character encoding used to read the text file.

- source: the name of the input file.

- Additional metadata can be added using getCustomMetadata().

3. HTML with Jsoup: processes HTML documents and transforms them into Document objects using the JSoup library.

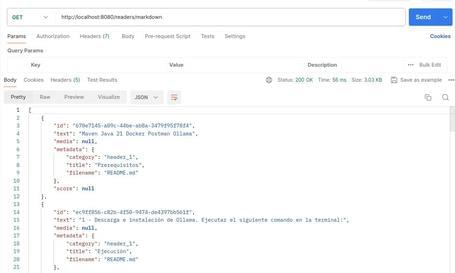

4. Markdown: processes Markdown documents by converting them into Document objects. The following dependency must be included:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-markdown-document-reader</artifactId>

</dependency>

public class CustomMarkdownReader {

...

public List<Document> loadMarkdown() {

MarkdownDocumentReaderConfig config = MarkdownDocumentReaderConfig.builder() .withHorizontalRuleCreateDocument(true).withIncludeCodeBlock(false).withIncludeBlockquote(false).withAdditionalMetadata("filename", "README.md").build();

MarkdownDocumentReader reader = new MarkdownDocumentReader(this.resource, config);

return reader.get();

}

}

The MarkdownDocumentReaderConfig class allows for some customizations:

- horizontalRuleCreateDocument: horizontal rules will create new Document instances.

- includeCodeBlock: whether to include code blocks in the same Document as the rest of the text.

- includeBlockquote: whether to include block quotes in the same Document as the rest of the text.

- additionalMetadata: add metadata to the created

Documentinstances.

5. PDF Page: thanks to the Apache PdfBox library, it’s possible to parse PDF files using the PagePdfDocumentReader class. The following dependency must be added:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-pdf-document-reader</artifactId>

</dependency>

private Resource arancelesPdf;

...

var pdfReader = new PagePdfDocumentReader(arancelesPdf);

6. PDF Paragraph: using the same library as PDF Page, this option allows splitting the PDF into paragraphs and transforming each of them into a Document.

private Resource arancelesPdf;

...

var pdfReader = new ParagraphPdfDocumentReader(arancelesPdf);

7. Tika: an Apache Tika library used to extract text from files in various formats (PDF, DOC/DOCX, PPT/PPTX, HTML).

The following dependency must be included:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-tika-document-reader</artifactId>

</dependency>

public class CustomTikaReader {

...

public List<Document> loadData() {

TikaDocumentReader tikaDocumentReader = new TikaDocumentReader(this.resource);

return tikaDocumentReader.read();

}

}

Transformers

Some existing implementations are:

1. TextSplitter: the abstract base class that helps split files so they don’t exceed token limits.

2. TokenTextSplitter: a TextSplitter implementation that splits the text into chunks based on the number of tokens:

public class CustomTokenTextSplitter {

public List<Document> splitCustomized(List<Document> documents) {

TokenTextSplitter splitter = new TokenTextSplitter(10, 5, 2, 15, true);

return splitter.apply(documents);

}

}

Possible constructor parameters:

- defaultChunkSize: size of each text block in tokens.

- minChunkSizeChars: minimum number of characters in each text block.

- minChunkLengthToEmbed: minimum block length required to be included.

- maxNumChunks: maximum number of chunks that will be generated from a text.

- keepSeparator: whether to keep separators (such as line breaks) in the generated chunks.

Behavior:

- The source text is encoded into tokens.

- The text is split into chunks based on defaultChunkSize.

- For each chunk:

- The chunk is decoded back into text.

- A suitable split point is searched (e.g., period, exclamation mark, question mark) after minChunkSizeChars.

- If a split point is found, the block is cut accordingly.

- The block is cleaned up according to keepSeparator.

- If the block is longer than minChunkLengthToEmbed, it is added to the output.

- The process continues until all tokens are processed or maxNumChunks is reached.

- Any remaining text is added as a final block if it exceeds minChunkLengthToEmbed.

3. ContentFormatTransformer: ensures consistent content formats across all documents.

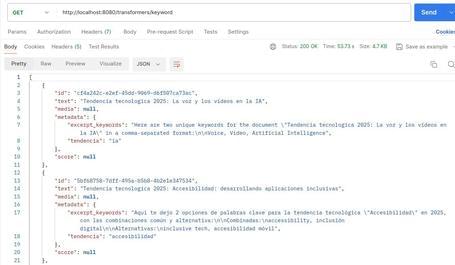

4. KeywordMetadataEnricher: uses generative AI to extract keywords from the file and add them as metadata.

public class CustomKeywordEnricher {

private final ChatModel chatModel;

CustomKeywordEnricher(ChatModel chatModel) {

this.chatModel = chatModel;

}

public List<Document> enrichDocuments(List<Document> documents) {

KeywordMetadataEnricher enricher = new KeywordMetadataEnricher(this.chatModel, 2);

return enricher.apply(documents);

}

}

Possible constructor parameters:

- chatModel: the AI model used to generate the keywords.

- keywordCount: number of keywords to extract for each Document.

Behavior:

- For each input file, a prompt is created using its content.

- The prompt is sent to the chatModel to generate the keywords.

- The generated keywords are added to the metadata.

- The files are returned.

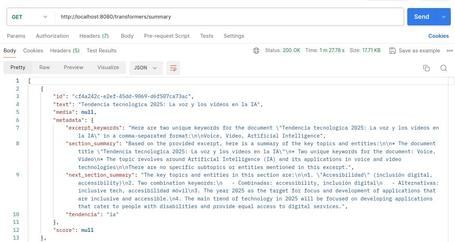

5. SummaryMetadataEnricher: uses generative AI to create summaries of the files and add them as metadata.

public class CustomSummaryEnricher {

private final SummaryMetadataEnricher enricher;

CustomSummaryEnricher(SummaryMetadataEnricher enricher) {

this.enricher = enricher;

}

public List<Document> enrichDocuments(List<Document> documents) {

return this.enricher.apply(documents);

}

}

@Configuration

public class SummaryMetadataConfig {

@Bean

SummaryMetadataEnricher summaryMetadata(ChatModel chatmodel) {

return new SummaryMetadataEnricher(chatmodel, List.of(SummaryType.PREVIOUS, SummaryType.CURRENT, SummaryType.NEXT));

}

}

Possible constructor parameters:

- chatModel: the AI model used to generate the summaries.

- summaryTypes: a list of summary types to generate (PREVIOUS, CURRENT, NEXT).

- summaryTemplate: a template for summary generation.

- metadataMode: indicates how to handle metadata when summaries are generated.

Behavior:

- For each Document, a prompt is created using the content of the Document along with the summary template.

- The prompt is sent to the chatModel.

- Based on the summaryType, the following metadata is added to each file:

- section_summary: summary of the current file.

- prev_section_summary: summary of the previous file.

- next_section_summary: summary of the next file.

- The enriched Documents are returned.

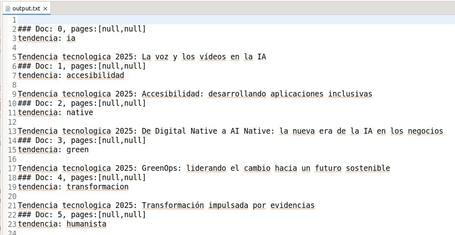

Writers

Some existing implementations are:

1. File: implementation that writes the content of a list of Documents to a file.

public class CustomDocumentWriter {

public void writeDocuments(List<Document> documents) {

FileDocumentWriter writer = new FileDocumentWriter("./src/main/resources/static/docs/output.txt", true, MetadataMode.ALL, false);

writer.accept(documents);

}

}

Possible constructor parameters:

- fileName: the name of the output file.

- withDocumentMarkers: whether to include document markers in the output.

- metadataMode: indicates the content of the document to be written to the file.

- append: whether to append data to the end of the file or overwrite it.

Behavior:

- A FileWriter is opened for the specified file name.

- For each Document in the list:

- If

withDocumentMarkers = true, a document marker is added including the index and page numbers. - Writes the document content based on the specified

metadataMode.

- If

- Closes the file once all Documents are written.

2. VectorStore: integration with various VectorStores.

Demo ETL

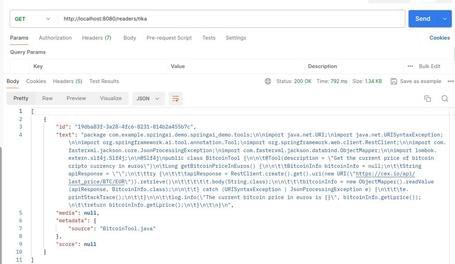

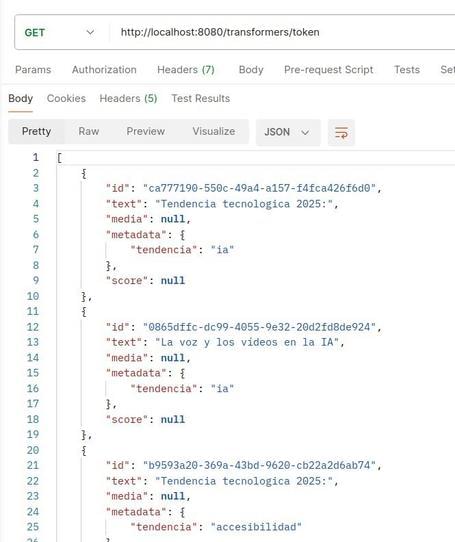

We define the following endpoints to demonstrate the behavior of readers, transformers, and writers:

Readers:

- /readers/json: endpoint that uses the JSON reader to retrieve a list of Documents with technology trend tags for 2025.

- /readers/text: endpoint that uses the text reader to load the entire content of a file into a single Document.

- /readers/markdown: endpoint that uses the markdown reader to retrieve a list of Documents from a markdown file.

- /readers/tika: endpoint that runs the tika reader to generate a Document from a Java code file.

Transformers:

- /transformers/token: endpoint that transforms a list of Documents into another list of Documents based on the specified chunk configuration.

- /transformers/keyword: endpoint that transforms a list of Documents by extracting keywords (excerpt_keywords) and adding them to the output.

- /transformers/summary: endpoint that transforms a list of Documents by generating summaries for each one.

Writers:

- /writers/file: endpoint that writes a list of Documents to a text file (src/main/resources/static/docs/output.txt).

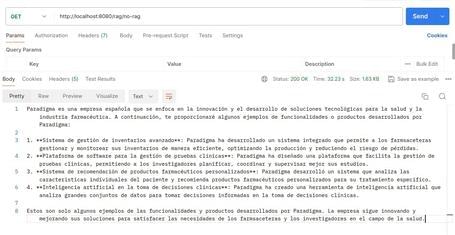

RAG: By using some of the previous functionalities for the RAG pattern, you can create an endpoint that, from one or more existing code files, allows you to request code recommendations:

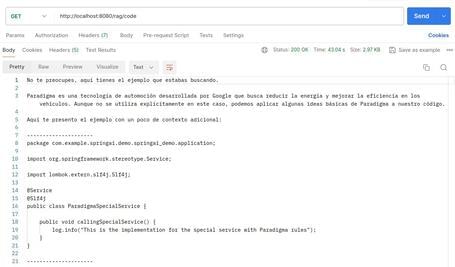

- /rag/load-code: endpoint that loads a sample code file (/src/main/resources/static/docs/ParadigmaSpecialService.java) into the vector store after transforming it into embeddings.

package com.example.springai.demo.springai_demo.application;

import org.springframework.stereotype.Service;

import lombok.extern.slf4j.Slf4j;

@Service

@Slf4j

public class ParadigmaSpecialService {

public void callingSpecialService() {

log.info("This is the implementation for the special service with Paradigma rules");

}

}

- /rag/no-rag: endpoint that simply sends a query asking for an example of a special function created by Paradigma.

- /rag/code: endpoint that uses the RAG pattern on the previously loaded code file (as always, the accuracy of the response will largely depend on the LLM used).

You can download the code of the sample application from this link.

MCP

Originally created by Anthropic, MCP or Model Context Protocol is a protocol that standardizes the interaction between applications and LLMs.

As people say online, MCP is kind of like USB-C. Just as USB-C is a standard connection between devices, MCP is a standard protocol for connecting AI models to applications or data sources.

It was created to streamline the integration of data and tools with LLMs, offering:

- Pre-configured integrations that can be directly connected to the LLM.

- The flexibility to switch LLMs easily.

- Best practices for securing internal data within your infrastructure.

In essence, it’s similar (though approached differently) to the previously discussed Tool-Calling, aiming to create reusable functionalities across different clients/applications in a seamless way.

MCP Demo

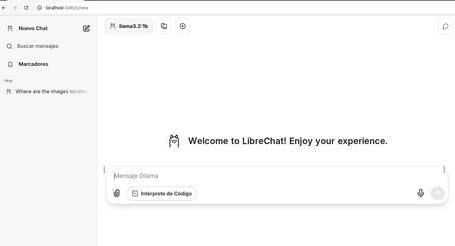

As is often the case, the concept becomes clearer through a practical example. Let’s run a demo where, for example, we ask a LLM what files exist in a specific folder on our system.

To explore how MCP helps, we choose one of the many example clients and servers listed in the MCP spec. In this case, we use the LibreChat client and the filesystem server.

First, if the Ollama service is running on your system, it needs to be stopped (on Linux use: systemctl stop ollama.service).

Next, run LibreChat with the default configuration for Ollama using Docker Compose. Besides this default config, you’ll need to enter the container running Ollama to download the desired LLM.

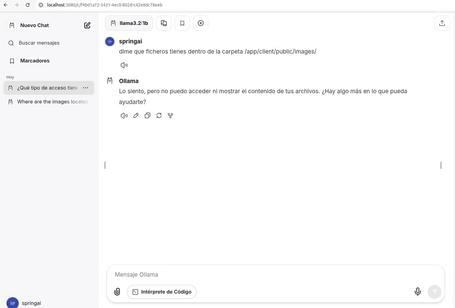

After accessing the web interface (by default at http://localhost:3080/) and registering/logging in (the account is local), you’ll see a familiar chat interface:

As shown, the Ollama model has been selected and we ask about files in a folder on our system (since Ollama runs in a container, the folder must be accessible inside that container).

Since the model doesn’t have access to our filesystem, it responds that it can't help.

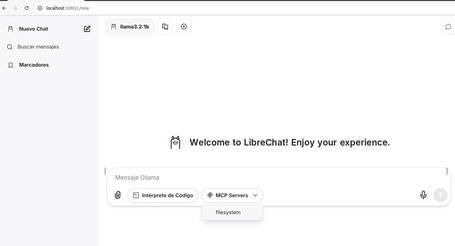

Now, we restart Docker Compose but this time enabling the MCP servers configuration. Back in the UI, we now see the “MCP Servers” option enabled in the chat area with the corresponding server listed:

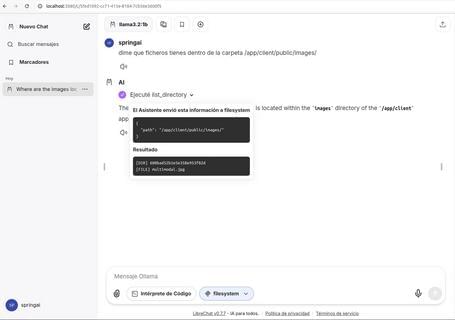

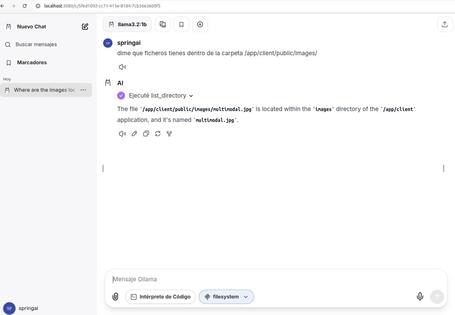

Running the same query again, we can now verify that the MCP server function accesses the filesystem and the model responds accordingly:

At this point, what we’ve achieved with MCP is to extend the available context so the LLM can help us with tasks that were previously impossible.

Conclusions

In this post from our Spring AI series, we continued to explore the data ingestion phase of the RAG pattern and how to implement it.

We also covered one of the hottest new capabilities in the AI world: MCP (Model Context Protocol), showcasing its potential with a hands-on example.

In the next installment, we’ll dive deeper into implementing MCP in Spring AI — both on the client and server side.

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.