Text generation has a large impact on society and, as a result, there is the need to evaluate this paradigm: How good is my prompt? How do I know if my LLM has stopped working properly? Intuitively, it would seem that a human being is the best option for answering these questions.

What happens, however, if we want to conduct a consistent, automatic evaluation? And what if these questions were answered by... another LLM?

Introduction

Evaluating an LLM-based system is key to ensuring that:

- The system will yield quality results once in production, and

- The system will maintain that quality over time.

In this post we will talk about how to evaluate these systems using metrics, focusing on LLM-based metrics. These are metrics that use an LLM to evaluate the outputs of another LLM.

First of all, we will describe some difficulties that appear when evaluating an LLM and the main aspects to take into account. Then we will talk about the metrics that are already out there and we believe are the most relevant, as well as about some useful libraries and frameworks.

Finally, we will discuss the application of LLM-based metrics at Paradigma.

Premises: difficulties when evaluating

There are a series of factors and elements that make the evaluation of an LLM a non-trivial matter. These difficulties need to be identified in order for an evaluation methodology to be as robust as possible.

Then, we need to define some of the principles that give rise to difficulties in terms of evaluation:

- The subjectivity of language. When we talk about evaluating an LLM or a specific prompt, we need to keep one thing clear: language is essentially subjective in nature. This is why, when labelling datasets for NLP, several annotators are usually required. In addition, this labelling usually goes hand in hand with metrics for measuring their mutual agreement. This clearly shows that, despite laying down agreed on criteria for evaluating or annotating a piece of text, it is hard to reach a unanimous consensus.

- Datasets. Another rather recurring difficulty that arises in generative AI-based projects is the existence of a labelled dataset. Labelling a set of texts so as to be able to come up with an evaluation set of our system is a tedious process. The labelled set can be eg pairs of questions and their respective answers to evaluate a RAG system, or a set of texts and JSON files with information drawn from the texts themselves to evaluate a structured information extraction task. Therefore, it is also important to determine whether we want to carry out a “supervised” evaluation and to consensually choose and build the most robust set.

- What do we want to evaluate? Establishing the criteria we would like to use to evaluate the content generated by a LLM is also essential. These are some of the aspects that can be evaluated:

- The form of the message. In other words, is the LLM answering with good enough manners? Are certain words being repeatedly used across the various generated answers? Is the language used by the LLM the one we want for this task?

- The correction of the information. We want to answer questions like: Is my system providing answers with all the possible information? Is the information provided by my system in its answers accurate or invented?

- Other aspects. There are other aspects that need to be taken into consideration when evaluating an LLM. For example, how vulnerable is the LLM to prompt injections? Is the format of the answer the one I need?

Non LLM-based metrics

Within NLP there is a series of tasks that do not require a “human” understanding of the contents generated by an LLM, as they do not call for too much creativity on behalf of the system.

Now we will define some of the metrics that are used in evaluating these systems:

- Literal similarity metrics: these metrics measure the word overlap between two texts (eg ROUGE or BLEU) and are typically used in tasks where the generation by the LLM does not have many implicit degrees of freedom (such as eg machine translation).

- Semantic similarity metrics: these metrics measure the non-literal similarity among texts and are used for more creative tasks such as eg a RAG system or Q&A tasks. Some of the metrics in the literature are BERTScore and those for measuring similarity between embeddings.

- Classic classification metrics for more simple tasks such as text classification, name-entity recognition and information extraction.

LLM-based metrics

When it comes to evaluating an LLM or a prompt, it is very likely that we would like to perform a semantic evaluation, ie we want the answers to have a certain semantic content – albeit not always using the same words in the same order. This is why the best way to do it is having human supervision.

When it comes to developing and maintaining an LLM-based system, however, an automatic evaluation process has to be carried out, which is impossible with human supervision.

But what if we were to ask an LLM to understand and evaluate a content like a human being? LLM-based metrics, where an LLM determines whether a text meets certain requirements specified in a prompt, were thus born.

LLM-based metrics can be used to evaluate different tasks or types of system (RAG, Q&A, code generation…) and can either be supervised, ie require a ground truth for comparison with the generated text, or unsupervised.

Now we will describe some of the most commonly used LLM-based metrics that we find to be the most interesting:

- Faithfulness: this is a supervised metric that is particularly suited to RAG tasks. It is represented as a numerical value between 0 and 1 and answers the following question: How consistent is the generated answer with regard to the provided context?

- Answer relevancy: it is an unsupervised, Q&A system-oriented metric. It measures how relevant an answer is to a question. To calculate it, an LLM is asked to generate synthetic questions based on the generated answer, and the similarity among embeddings of the original question and the synthetic questions is measured. Thus, the aim is to determine how reconstructible the question is based on the generated answer.

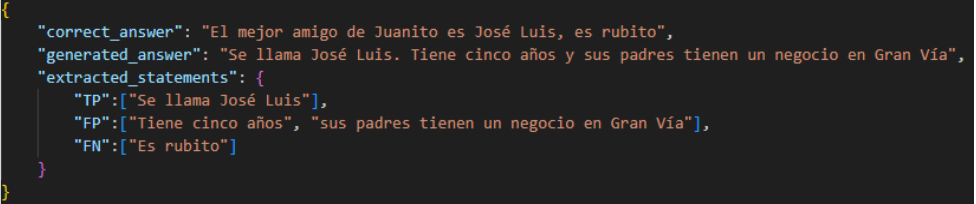

- Answer correctness: this is a supervised metric that is used with RAG systems. On the one hand, it is based on calculating an adjustment of the f-score metric using the statements of the generated answer that appear in the ground-truth answer as true positives, the statements of the generated answer that do not appear in the real answer as false positives, and the statements of the real answer that do not appear in the generated answer as false negatives. In addition, a cosine similarity between the generated and the expected answer is also calculated. The final metric is a weighted average between the f-score and the aforementioned embedding similarity.

- Aspect critique: this is an unsupervised, general-purpose metric that evaluates how correct an answer is with regard to aspects such as “harmfulness”, “maliciousness”, etc.

- Context relevancy: this is an unsupervised metric that evaluates the contexts provided in a prompt and measures, assigning a value ranging from 0 to 1, how relevant the information in the context is for answering a question. A context that has been given a score of 1 would correspond to information that is relevant for answering.

- Context recall: this is a supervised metric that is used with RAG tasks. It measures, from a ground-truth answer, whether each sentence of the ground truth can be ascribed to the context provided in the prompt. The final value is the ratio of sentences in the ground-truth answer that can actually be generated from the context.

Libraries and frameworks

In view of the growing importance of LLM-based metrics, newly developed packages and frameworks for supporting the evaluation of LLMs are appearing all the time. We would like to highlight here the following new tools:

RAGAs: this is a RAG system evaluation-focused library that implements several of the metrics described above.

DeepEval: this is a framework specialised in approaching the evaluation of LLMs as unit tests. It incorporates a large number of metrics, as well as libraries and RAGAs.

LangSmith: this is a framework that has many built-in functionalities for managing the lifecycle of an LLM-based system: traceability, prompt versioning, metric logging and so on. It belongs to LangChain, so integration is almost absolute.

Langfuse: this is an open-source framework that is similar to LangSmith but has less functionalities. Our colleague José María Hernández de la Cruz gave a webinar where he talked about this framework and showed how it is used.

Actual example: Benchmarking

At Paradigma Digital we have already worked with LLM-based metrics. As part of a project developed by the GENAI team, we benchmarked different technologies to obtain a baseline for developing generative AI projects.

We based this project on a contact centre use case, where the evaluation dataset consisted of a set of questions and answers in Spanish that, in theory, a call centre worker would give based on the calls made by selected customers.

LLMs were one of the technologies we benchmarked. In order to do so, we devised metrics (based on some of the ones described above) that we thought would be useful in this use case:

- Accuracy: to calculate this metric, we asked an LLM to tell us if a generated answer had the same content as an expected answer. In this case, the LLM would output ‘true’ or ‘false’ for each ‘generated answer’-‘expected answer’ pair. The metric is calculated as the percentage of true values in the evaluation set. We created the prompt for asking the LLM what the accuracy in Spanish was so that it was in line with the language of the evaluation set we had used.

- F-score, precision and recall: to calculate these metrics, we used the previously described Answer Correctness metric, whereby we obtained a series of TP, FP and FN statements by asking an LLM. In this case, we came up with a prompt (also in Spanish) in which, furthermore, we integrated a series of few-shot examples to make things easier for the LLM.

After analysing the calculated metrics, we reached the following conclusions:

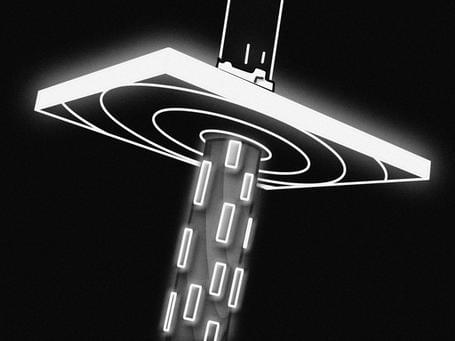

- Evaluating an LLM with itself can introduce a bias in its favour. In particular, we saw this in the case of GPT 3.5 Turbo, which we used to benchmark this model against Claude Instant. We saw a clear trend indicating that GPT 3.5 Turbo gave better answers, but when we manually evaluated it, we realised that this was not necessarily the case.

After discovering this, we switched to GPT-4 as the evaluator, which, in addition, is a more intelligent model. This LLM yielded more results in favour of Claude Instant than GPT 3.5 Turbo. The figure below shows this difference in the metric known as accuracy. Using GPT 3.5 (left), it was rather clear that it favoured what it generated itself, whereas evaluating with GPT-4 (right) had the opposite effect.

- LLM-based metrics are not perfect. They can give us a clue of the performance of a system but, since they are answers given by an LLM, they already have an intrinsic error. Please note, however, that not even human evaluation is perfect.

Conclusions and discussion

In this post we have briefly covered how an LLM can be evaluated, particularly using other LLMs as evaluators. The more salient points are listed below:

- Language is a subjective and complex thing. In order to evaluate a generated text, metrics other than the classic metrics used in other AI problems need to be used. Metrics that “understand” the text are required.

- Using LLMs to evaluate other LLMs is a new practice that facilitates automating the evaluation and lifecycle of LLM-based applications. Since this is a new practice, research is ongoing, so it will keep evolving. In turn, new, more powerful LLMs will be developed that will be used to evaluate other LLMs in a more “real” way.

- Using an LLM to evaluate itself can inject a certain bias in the metrics, as we have experienced ourselves. This should be taken into account when designing an evaluation flow.

- Evaluating an LLM using another LLM is not a perfect process – but we should keep in mind that human evaluation is not perfect either. It might be advisable to explore hybrid metric logging strategies involving LLMs and humans. This human intervention can take place by means of eg feedback systems, through which users of the LLM-based system may give their opinion of the quality of the generated content.

References

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.