In the 90s, three-level architectures were booming. During the transition from a client-server model to distributed architectures, industry leaders defended a pattern where the interface, business logic and databases had to be structured in different layers.

This is the three-tier architecture that we have been so familiar with in the J2EE world. Now, at the height of Cloud Computing, we are used to very similar architectures where data is transferred to a centralized location to be processed and then sent back to the requester.

However, there are situations in which this model does not work. For example, what happens if the generated data to be transferred is very large? It is clear that it will increase the time to process and the latency to store or retrieve said data from the cloud.

This, in many cases, can penalize the performance of the applications, negatively impacting business.

To solve these problems, new cloud computing paradigms emerge, such as Edge Computing.

The main objective of this model is to bring processing closer to data sources, reducing the amount of data transferred. Each edge location reproduces the public cloud exposing a compatible set of services and endpoints that applications can consume.

In this type of architecture, each Edge Computing unit has its own set of resources: CPU, memory, storage and network. With them, they will perform a specific function, in addition to managing the switching of the network, routing, load balancing and security. In this cluster of Edge Computing devices, a multitude of data from a variety of sources will be ingested.

For each data, the path through which it is processed according to a set of predefined policies and rules will be decided.

It can be processed locally or sent to the public cloud for further processing. The "hot" data that may be critical will be analyzed, stored and processed immediately by the Edge Computing layer.

Meanwhile, "cold" data are transferred to the public Cloud to perform, for example, long-term analytics.

It is mistakenly thought that it is only intended for IoT. But Edge Computing addresses many of the challenges we face when running workloads on Cloud.

It will allow us to keep certain sensitive data on-premise while we continue to enjoy the advantages of the elasticity offered by the public Cloud. In addition, it will reduce the latency of communications.

For all this, Edge Computing is the perfect architecture for the execution of intelligent applications driven by data. And it also offers enormous value for departmental applications and more traditional business lines.

[caption id="" align="aligncenter" width="800"]

Regions and edge locations in the global network of a leading public cloud provider.[/caption]

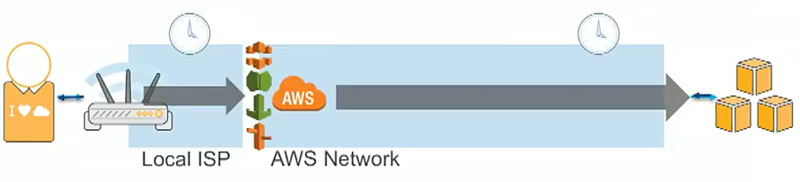

When we talk about Edge Computing or Edge locations, even if they are translated as "edge", it does not mean that they are far away towards the end of the network, quite the opposite.

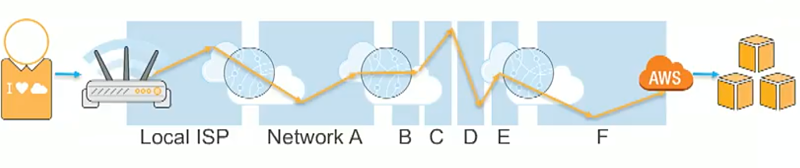

In a web application, the path that a request runs between the end user and our site will be made up of a series of jumps by different networks around the world.

In the answer, as well as in successive requests, the path can be that one or another completely different one.

When we use an edge location, what we want is to simplify and shorten that path, putting our application much closer to the end user.

To facilitate this task, Amazon puts at our disposal, among many other services, Lambda@Edge. With it we can execute our code in the border locations that AWS has to respond to our end users with low latency.

Lambda@Edge is used in conjunction with the AWS CDN to bring application logic to the CloudFront edge servers.

It works in a completely transparent way for us, we will only have to load the code of our Lambda functions (Amazon's FaaS platform) and AWS will be responsible for the replication management, routing and scaling in high availability to locate it in the edge location closest to the end user.

Among the many cases of use of Lambda@Edge, it is worth mentioning the following:

- Reduce the latency of communications. As we saw at the beginning, this is one of the main needs in the current Cloud architectures. In addition, it helps reduce data traffic and invoke other services, so it will also help optimize costs.

- Customization of the dynamic content of the site. With Cloudfront it is very easy to distribute static content from S3 without having to worry about deploying it and configuring it in multiple regions around the world. With Lambda @ Edge the same thing happens as with the dynamic content. In addition, it allows you to customize it depending on the location of the end user, generate pages on the fly or return special resources depending on the request.

- Modify the requests and answers. It allows you to rewrite the urls, control user access on the edge, do A/B tests, perform temporary redirections, customize http headers or redirect visitors according to the attributes of the requests (cookies, headers, etc.). ). Also take them to the optimal origin depending on the proximity, the location of the data, the user's session information, etc…

Products like Lambda@Edge are generating great expectation for the excellent capabilities they offer.

Not only do they put serverless computing closer to users, but they solve many current problems of Cloud Computing. They are facilitating a new transition in the computing model towards Edge Computing-based architectures.

In this new paradigm, the new software architectures will have nothing to do with the old design patterns of the 90s, but will be structured around the most innovative Cloud technologies.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.