This question is sure what we have asked ourselves or someone has asked us. Since I started in this AWS world, many people have assumed that security in the Cloud does not exist or is very low.

I have to admit that before I started in this world, I also had this vision, but it was a vision based on my ignorance and on certain statements that I had read or heard that were wrong.

We will dedicate this post to explaining certain security concepts in AWS, reviewing how AWS works, and how we can secure our loads as much as possible.

Making requests to AWS

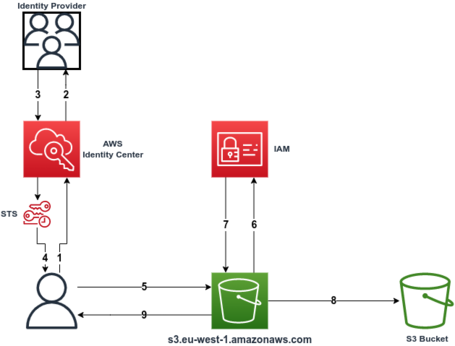

The first thing is to know how a request works in AWS. We will explain a call to create an S3 bucket, and from there, we will see how to secure AWS and how we can manage security.

We plan to use a federated user from an Identity Provider using AWS Identity Center, the successor to AWS Single Sign-On.

Whether we make it via the console, API, or AWS CLI, this call is the same.

- You first need to call AWS Identity Center to authenticate the user.

- AWS Identity Center will connect with the Identity Provider that we have configured to authenticate the user and request the user's credentials to validate them.

- Based on the permissions granted in Identity Center, the Identity Provider will validate the credentials and authenticate the request against AWS Identity Center.

- AWS Identity Center will call STS (Security Token Service) to generate a temporary credential that will return to the user's initial request and that we can reuse for a short period (from 1 hour to 12 hours maximum, depending on configuration).

This first flow corresponds to a login in the console, a login via CLI, or an AssumeRoleWithSAMLvia API, and it is only necessary to do it once. It is possible to use the token during its validity period.

With this token, we assume a role within an AWS account with the permissions assigned to that role.

- With this temporary credential, we will launch the request to create an S3 bucket for the S3 endpoint in our region.

- The S3 service will call IAM to authorize the request, depending on the permissions of the IAM role assumed with the credentials.

- IAM will authorize the request by returning the signed request to S3

- Finally, S3 will create the Bucket.

- S3 will generate the bucket in response to the request.

This Flow is simplified to the maximum to explain it in the simplest way possible (it is somewhat more complex).

AWS Signature Version 4 (AWS SigV4) is the process by which AWS marks all requests, and AWS has used it for over ten years without incident.

Each request, even if they are the same and repetitive, has a different signature, and it is impossible to carry out a brute force attack even if you have enough computing power.

If you want more information on this topic, I recommend Eric Brandwine's session about this theme.

This flow type is highly safe, and we will explain all its advantages.

Securing actions via IAM and SCPs

First of all, when using AWS Identity Center, in addition to being able to authenticate with our IP, we use AWS STS to generate secure and temporary tokens. So these tokens have a limited duration, and even if someone manages to steal one (In case a user explicitly publishes them by mistake), this token will automatically expire after a few hours. It is also possible to revoke a temporary token if necessary.

In addition, this model requires that each request be explicitly allowed in a policy associated with the IAM role or user and that it not be denied in any other policy.

IAM is highly flexible, and we can limit specific actions or allow different degrees of access with immense granularity.

All this without going into the power of Permission Boundaries, which is a compelling feature for delegating IAM Administration to other users but limiting the permissions they can manage.

It is also possible to deny specific actions organizationally, using a feature of AWS Organizations called Service Control Policies (SCPs), which allow blocking steps at the AWS account level, OUs (groupings of accounts similar to a folder), or the entire organization.

In this way, we can limit specific actions, such as users creating the infrastructure so that your VPC has direct access to the Internet. Or the use of some services without the setting determined.

We can use SCPs to limit specific actions in thousands of ways and provide infinite power, both in AWS documentation, in aws-samples or in Control Tower documentation there are plenty of handy examples besides being able to build your SCPs.

SCPs can be used to limit specific actions in a variety of ways and provide infinite power. Apart from being able to build your SCPs, there are plenty of handy examples in AWS documentation, in aws-samples or Control Tower documentation.

Auditing resources with CloudTrail y Config

We have already seen that we can block specific actions due to the AWS request flow, but what if we can't stop them all, or do we want more control over them?

The next step is CloudTrail, which, if you have yet to activate your accounts, you are taking time to start it. Since it is free (the first trial in each history) and without it, you have no audit of your events.

CloudTrail automatically records all the events generated in our AWS account, it is possible to centralize it using AWS Organization, and it has different configuration levels. Still, it allows us to audit all the requests made in our account to AWS services.

Some security Consultants said that CloudTrailit is very well, but it does not block improper actions. It only warns us. But we have other AWS services that we can combine with CloudTrail.

Amazon EventBridge is a marvel (Previously known as CloudWatch Events) which simplifies a lot. It is an event bus that allows us to bind AWS services.

With Amazon EventBridge, we can capture the events logged in CloudTrail and generate calls to services such as Lambda.

In this way, we can generate an evaluation action on different actions in the console and even lead to remediation actions.

For example, a great fear at the security level tends to cause someone to create a Security Group by opening inappropriate ports to everyone (SSH and RDP, for example). This way, we can generate automation that checks when a rule is generated or modified. If it meets specific requirements within a Security group, delete it and, in turn, send a notice to the person responsible for the account indicating that this remediation has been executed.

One of the significant advantages of the cloud is this. We can evaluate many actions with this method and automatically generate remediations. And with the advantage that we use two services with a meager cost, such as EventBridge and Lambda.

It may seem very complex to generate these evaluation rules with Lambda since we do not have a team that develops these rules, but for this, we have AWS Config.

AWS Config is a service that evaluates all our resources and their configuration changes based on rules that we can configure.

There are many rules to implement AWS has many managed rules for different use cases that you can consult here. There is also an exciting resource that collected by Conformance Packs for different cases of use.

Based on this, we can evaluate if our resources meet our standards and if it is possible to execute remediation actions. For these actions, we can use either Lambda or also System Manager Automation that allows the execution of certain runbooks developed by AWS for a multitude of remediation actions.

As an important note, AWS Config is used by all CSPM (Cloud Security Posture Management) to evaluate our resources. Also, It is essential to know that it is a service that can sometimes have relatively high costs.

Inspecting our resources withAmazon Inspector y System Manager

Ok, we have already seen that the actions within AWS are covered, but what about EC2 instances and the code they run?

Here other fantastic AWS services come in. The first is Amazon Inspector, which allows you to analyze AWS workloads for vulnerabilities.

This service can find vulnerabilities in EC2 instances, in container images stored in ECR (Elastic Container Registry, which is AWS's Registry service for containers), and, for a relatively short time, in the code itself deployed in Lambda.

It is a very useful service, and, like all AWS services, it allows integration with others such as Lambda and System Manager to automate remediation.

But we have also talked a bit about System Manager, which is an incredible service with many modules, several very interesting for security.

The first would be Patch Manager, which allows us to manage our EC2 instance park to keep them updated and apply patches automatically.

It is a simple-to-use service with infinite power that allows us to keep our machines updated, allowing us to generate windows for your application, generate different windows by type of environment and OS, and even have different levels of patching to be able to test in a small number of instances of updates that may be more disruptive.

Another service that is rarely used but essential to me is Session Manager, which allows us to access our instances using our IAM credentials without using the SSH protocol. This functionality is incredible since it will enable much more secure access to our instances without exposing them in any way. They only require access to the SSM service (which can be done entirely privately using VPC Endpoints)

This same service also exists for Windows instances without using the RDP protocol; in this case, it is called Fleet Manager.

The most impressive thing about System Manager is that the three modules we discussed are free.

AintOmatiWithandO the analysis of security events with AWS GuardDuty

We have a lot of services that support us proactively, but what if someone gets into our accounts and starts doing evil?

Here we have one of the essential AWS security services, AWS GuardDuty, which uses artificial intelligence to detect threats.

This sounds great, but how does it work? This service is capable of identifying inappropriate usage patterns within AWS. This is easy because it must be taken into account that it feeds on the security data of all existing accounts in AWS. AWS is not capable of accessing your data. Still, it does have visibility over the actions carried out on its services in such a way that it is pretty “easy” to identify inappropriate usage patterns. It is more to use Artificial Intelligence and feed on massive data. Suppose improper use is reported in an account. In that case, the way of service can be identified and used to analyze if it is repeated in more AWS accounts and generate warnings to users, which mitigates the problem.

It is a brutal service, instantly detecting if inappropriate events occur.

Many people do not use the service thinking that it is costly when it is not. On the contrary, it is a very cheap service, considering it can save us many problems by detecting inappropriate uses even within our organization.

Besides this service, there is a security team within AWS called Ghostbusters which is the last escalation for AWS security events which is incredible. I recommend you review this session in which they talk about this team and how they managed an event like Log4Shell.

So indeed, it is AWS

We have only talked about a few services involved in security within AWS. Still, there are much more, such as Macie (a service to detect sensitive data), Secret Manager (for password management), Network Firewall (as a Perimeter Firewall solution), WAF (Web Application Firewall with managed rules from AWS or Third Parties), Firewall Manager (ror centralized management of security rules, WAF, etc.), AWS Shield Advanced (improved protection for DDoS attacks) and many more.

In this way, we can become aware of the level of security that we can achieve in AWS, which can be extremely high if we implement AWS's security services.

In addition, one of the advantages is not only the level of security and warning that we can have but also the possibility of self-remedy, which is an excellent advantage in the AWS world.

Automating incident discovery and remediation by minimizing response time is a huge advantage.

It is also common to think that we require a level of security that AWS cannot provide. For these cases, I recommend checking who uses the AWS Secret Regions.

A secure environment must be usable, which is the most complicated part. A secure domain without sacrificing usability is very complex, but getting to a safe and functional environment with AWS tools is more accessible.

Many hyper-secure environments are out there, but they must be more usable. Unfortunately, this often leads to looking for less secure alternatives that security teams need to be made aware of.

With an environment in AWS, we can get to have a usable environment, and at the same time, we can control and secure the domain.

In conclusion, AWS is not only secure, but it can help us increase the security of our environment and reach very high levels of security, increasing automation.

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.