More and more companies are opting for architectures based on microservices. These microservices are highly specialized, which is why it is often necessary to realise orchestrations of them in order to fulfil a business function. In response to this need, Netflix has recently released Conductor, a new product within the Netflix OSS ecosystem. This product implements a flow orchestrator that runs in cloud environments, implementing each of the tasks through microservices. Today in the blog we will see how it works.

Orchestration of microservices with Conductor

Conductor implements the orchestration of calls to multiple microservices, so that the consumer obtains all the functionality that they need without having to make many calls that do not give them any value such as inserting an access in the audit table or consulting the internal identifier of their accounts when they have already logged into the system with their username and password and want the total balance in order to display it on the screen.

The objective of Conductor is to offer this functionality, facilitating the control and visualisation of the interactions between the microservices. By definition, the orchestration of microservices which is proposed is asynchronous, but can be performed synchronously if necessary.

Netflix has been using Conductor for a year, processing 2.6 million processes of all kinds. In addition, a very interesting feature is that it has been used on flows with high processing complexity such as information intake from data providers or video encoding and storage.

How does Conductor do it?

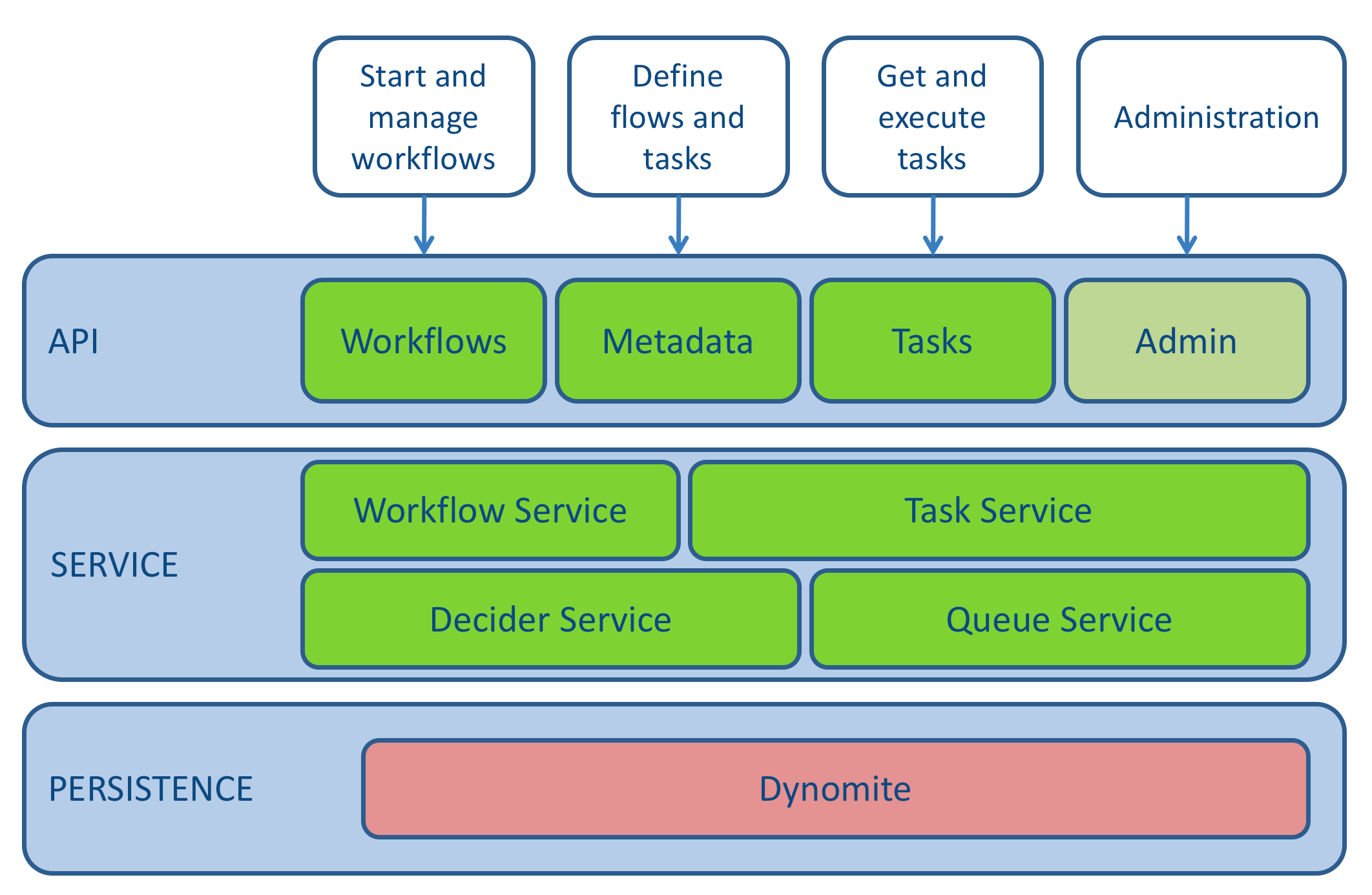

Conductor is based on the following architecture:

Netflix Conductor Architecture

API

- Workflows: allows management of workflow execution data.

- Metadata: allows management of workflow and task definition.

- Tasks: enables management of workflow execution data.

- Admin: Additional API, focused on administration. Allows for the recovery of configuration data, as well as purging workflows and recovering all queued tasks.

Access to the server is done via an HTTP balancer or using a Discovery product (Eureka in the case of Netflix OSS).

Servicio

The service layer is based on microservices implemented in Java. Given its stateless nature, multiple servers can be deployed to scale and ensure the availability and response of the system.

Persistence

Conductor allows working with varying modes of persistence:

- In-memory: only recommended for unit test environments. The configuration builds a local dynomite implementation.

- Redis: recommended for development and integration environments, due to its speed and easily configured persistence capabilities.

- Dynomite: default implementation for use in production. Dynomite is a persistence layer that provides a distributed and self-scalable database, based on databases without these features. The implementation that Netflix uses is based on a Redis database, though Memcached could also be used.

Conductor also enables the use of other backends implementing the database access interfaces (DAO) as discussed in this link. Implementations of any of the layers, such as cache or queues, could be performed, leaving the default implementation of persistence of execution data.

Configuration

Configuration is done through a config.properties configuration file, which is passed as a parameter when the server is started.

What does Conductor provide?

The fundamental purpose of Conductor is to perform the orchestration of microservices by facilitating the creation of workflows.

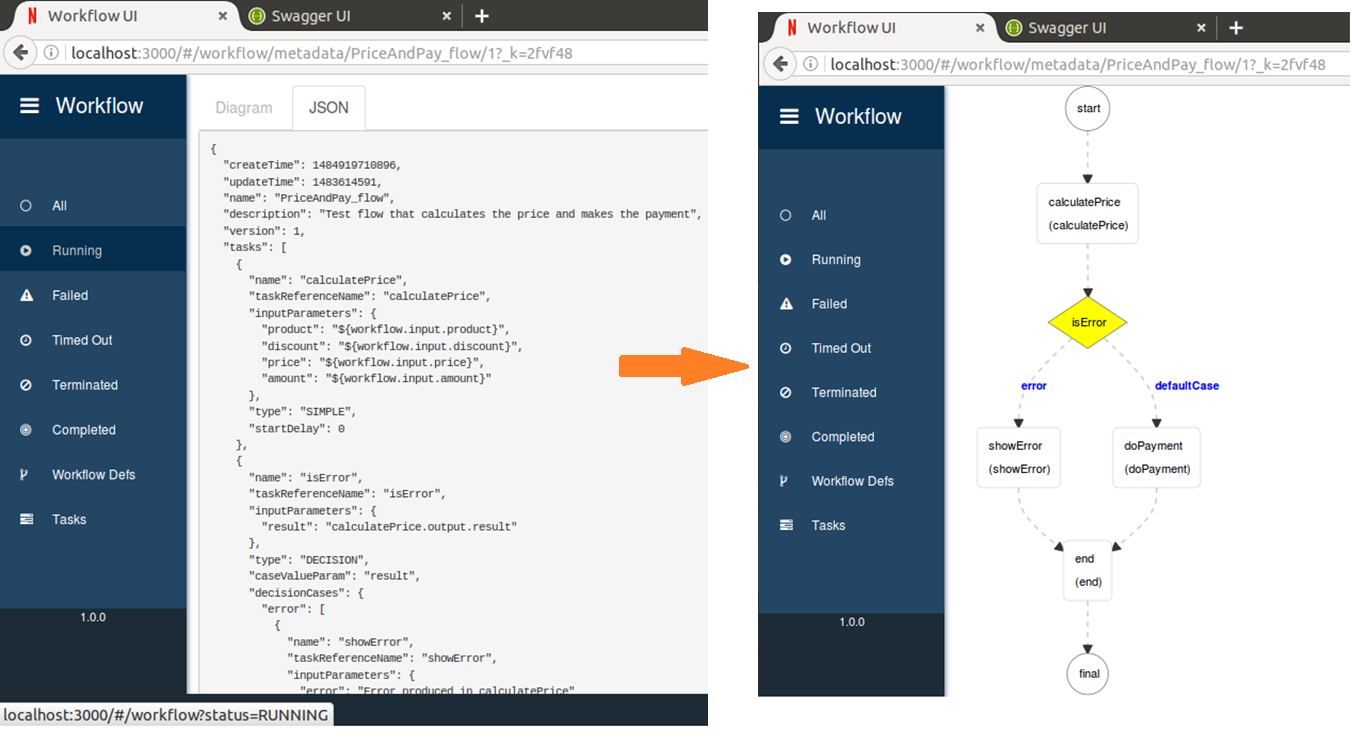

These workflows are designed in a simple way in JSON format, using different kinds of tasks. If you are used to process automisation, you will miss the graphical editor, since Conductor doesn't have one (for neither workflows nor the definition of tasks), although it does provide a graphical flow display. This feature makes it so that implemented workflows can be easily reviewed by business people, requiring a minimal amount of technical knowledge for their creation and modification.

Tasks

Tasks must be registered in the engine before their use in any flow. This record can be made programmatically or using the REST API for Metadata management.

The tasks are of two types, mainly:

- System tasks: tasks focused on workflow control. This includes the following types:

- Fork: creates a parallel fork.

- Fork_join_dynamic: similar to fork, but performs the parallelisation according to the entry expression.

- Join: similar to fork, but performs the parallelisation according to the entry expression.

- Decide: conditional, similar to a case switch.

- Sub_workflow: runs a workflow as a task. The workflow remains stopped until the sub-flow finishes.

- Wait: introduces an asynchronous stop point in the workflow. It is kept an in-progress status until it is updated with completed or failed status*.*

- HTTP: executes the call of a microservice through HTTP. This invocation will be governed by the retry policy declared when defining the task.

- Simple tasks: tasks to be implemented in the worker. These are tasks focused on the business itself. They are designed to be implemented in a different system from Conductor. Tasks communicate with Conductor via a polling system to retrieve scheduled configured tasks, and return it updated. At this point, Conductor continues the workflow following the updated task.

In addition to the tasks mentioned, new system tasks with a personalised behaviour can be implemented according to the needs of the project. To this end, you would have to extend com.netflix.conductor.core.execution.tasks.WorkflowSystemTask and instantiate the new class through an Eager Singleton pattern on start-up.

Tasks can have various retry policies in case of failure:

- Flag a workflow as TIMED_OUT.

- Retry until a set maximum number per parameter for each task.

- Alert: records a counter of retries.

Flows

Conductor workflows are in JSON format, which make reference to the previously defined tasks.

Data exchange

Conductor enables the implementation of data exchange between different flow activities in a simple way. There are two data contexts: workflow and task. In this way, the flow will have some input data, which it will be able to spread to different workflow activities through the parameters of task input. There is also output data, on both a task and workflow level.

Data binding is performed through the use of JSONPath, which implements most of the functionality of XPath for DSL JSON. The syntax will be the following:

${SOURCE.input/output.JSONPath}

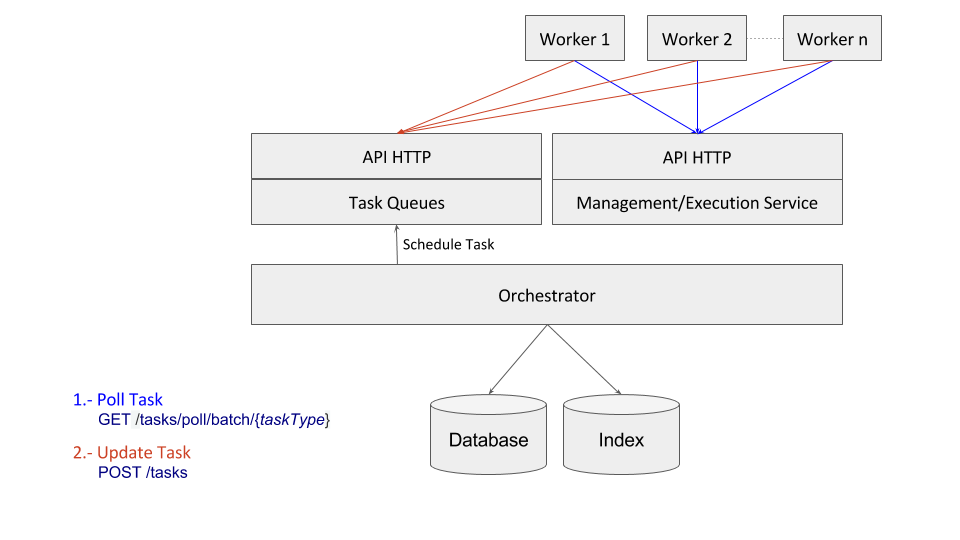

Execution Context

Conductor follows a model based on RPC communications, in such a way that the workers run on different machines from those of the Conductor server. In this way, it is the workers themselves that, via HTTP, consult the server for tasks pending execution. After performing the action implemented in the worker, they inform the server of the completion of the task, so that the workflow may continue.

This model provides the following features:

- Highly scalable, given the persistence model and that task scheduling is done asynchronously through queues.

- Allows for a variety of implementations of each task, in a way that, without modifying the flow, the behaviour of a workflow can be modified by simply modifying the workers responsible for the task.

What happens with the already existing services or those out of my control?

A question that might be asked is what happens if there is a need to request already existing microservices, or microservices that are out of my control and therefore I cannot implement them with the functionality required by the workers.

In this case, there are two alternatives:

- Implementing a worker that takes charge of making the call to the existing microservice and returning the information.

- Implementing invocation through an HTTP type task. The problem with this option is that such an implementation is directed towards a traditional mode in which the server becomes a point of failure in the HTTP call, in addition to being a possible bottleneck before a great number of HTTP tasks with high response times.

Installation

Installation of Conductor requires:

- Database: Dynomite

- Indexing engine: Elasticsearch 2.X

- Web server: TomCat, Jetty or a similar server running JDK 1.8+.

We can find the source code here.

To install Conductor the following steps must be followed:

- Clone the Conductor directory:

git clone git@github.com:Netflix/conductor.git

- Start the server (the APIs will be available at http//localhost:8080)

cd server

../gradlew server

- Start the UI (it will be available at http://localhost:3000)

cd ui

gulp watch

Docker

For a quick test of Conductor in test mode, you can use the following Docker which downloads the dependencies and builds the engine as well as the UI and swagger to access the APIs. They can be built independently or jointly.

Steps to start and execute a flow

- Start a flow

POST /workflow/{nombreFlujo}

{

... // dataInput

}

- Create a worker for that task type. This work will need to:

a) Make a poll of task type.

GET /tasks/poll/batch/{tipoDeTarea}

b) Perform the necessary actions.

c) Update the task status as “completed”.

POST /tasks

{

…

“outputData” : { ... },

“taskStatus”: “COMPLETED”

}

Conclusion

As you can see, Netflix continues making our lives easier by providing components that respond to the needs we encounter daily in the development of applications based on microservices. Now we just have to get to work and integrate it into our technology stack for future projects!

Comments are moderated and will only be visible if they add to the discussion in a constructive way. If you disagree with a point, please, be polite.

Tell us what you think.